Fine-tuning machine learning models starts with having well-prepared datasets. This guide will walk you through how to create these datasets, from gathering data to making instruction files. By the end, you’ll be equipped with practical knowledge and tools to prepare high-quality datasets for your fine-tuning tasks.

This post continues the details guides from preparing data for RAG, and building end-to-end RAG applications with Couchbase vector search.

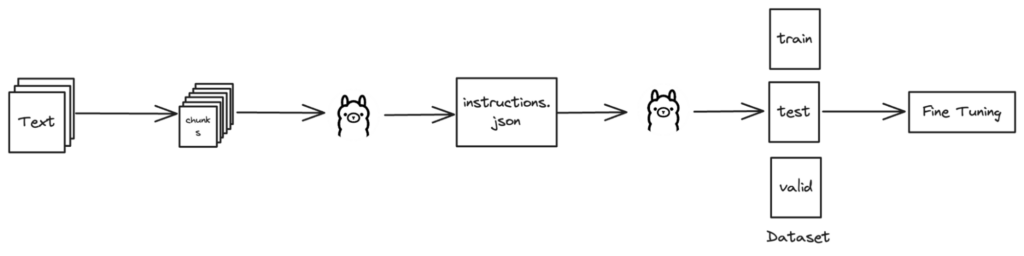

High-Level Overview

Data collection/gathering

The first step is gathering data from various sources. This involves collecting raw information that will later be cleaned and organized into structured datasets.

For an in-depth, step-by-step guide on preparing data for retrieval augmented generation, please refer to our comprehensive blog post: “Step by Step Guide to Prepare Data for Retrieval Augmented Generation”.

Our approach to data collection

In our approach, we utilized multiple methods to gather all relevant data:

-

- Web scraping using Scrapy:

- Scrapy is a powerful Python framework for extracting data from websites. It allows you to write spiders that crawl websites and scrape data efficiently.

- Extracting documents from Confluence:

- We directly downloaded documents stored within our Confluence workspace. But this can also be done by utilising the Confluence API which would involve writing scripts to automate the extraction process.

- Retrieving relevant files from Git repositories:

- Custom scripts were written to clone repositories and pull relevant files. This ensured we gathered all necessary data stored within our version control systems.

- Web scraping using Scrapy:

By combining these methods, we ensured a comprehensive and efficient data collection process, covering all necessary sources.

Text content extraction

Once data is collected, the next crucial step is extracting text from documents such as web pages and PDFs. This process involves parsing these documents to obtain clean, structured text data.

For detailed steps and code examples on extracting text from these sources, refer to our comprehensive guide in the blog post: “Step by Step Guide to Prepare Data for Retrieval Augmented Generation”.

Libraries used for text extraction

-

- HTML: BeautifulSoup is used to navigate HTML structures and extract text content.

- PDFs: PyPDF2 facilitates reading PDF files and extracting text from each page.

These tools enable us to transform unstructured documents into organized text data ready for further processing.

Creating sample JSON data

This section focuses on generating instructions for dataset creation using functions like generate_content() and generate_instructions(), which derive questions based on domain knowledge.

Generating instructions (questions)

To generate instruction questions, we’ll follow these steps:

-

- Chunk sections: The text is chunked semantically to ensure meaningful and contextually relevant questions.

- Formulate questions: These chunks are sent to a language model (LLM), which generates questions based on the received chunk.

- Create JSON format: Finally, we’ll structure the questions and associated information into a JSON format for easy access and utilization.

Sample instructions.json

Here’s an example of what the instructions.json file might look like after generating and saving the instructions:

|

1 2 3 4 |

[ "What is the significance of KV-Engine in the context of Magma Storage Engine?", "What is the significance of Architecture in the context of Magma Storage Engine?" ] |

Implementation

To implement this process:

-

- Load domain knowledge: retrieve domain-specific information from a designated file

- Generate instructions: utilize functions like

generate_content()to break down data and formulate questions usinggenerate_instructions() - Save questions: use

save_instructions()to store generated questions in a JSON file

generate_content function

The generate_content function tokenizes the domain knowledge into sentences and then generates logical questions based on those sentences:

|

1 2 3 4 5 6 7 8 9 10 11 |

def generate_content(domain_knowledge, context): questions = [] # Tokenize domain knowledge into sentences sentences = nltk.sent_tokenize(domain_knowledge) # Generate logical questions based on sentences for sentence in sentences: question = generate_instructions(sentence, context) questions.append(question) return questions |

generate_instructions function

This function demonstrates how to generate instruction questions using a language model API:

|

1 2 3 4 5 6 7 8 |

def generate_instructions(domain, context): prompt = "Generate a question from the domain knowledge provided which can be answered with the domain knowledge given. Don't create or print any numbered lists, no greetings, directly print the question." url = 'https://localhost:11434/api/generate' data = {"model": model, "stream": False, "prompt": f"[DOMAIN] {domain} [/DOMAIN] [CONTEXT] {context} [/CONTEXT] {prompt}"} response = requests.post(url, json=data) response.raise_for_status() return response.json()['response'].strip() |

Loading and saving domain knowledge

We use two additional functions: load_domain_knowledge() to load the domain knowledge from a file and save_instructions() to save the generated instructions to a JSON file.

load_domain_knowledge function

This function loads domain knowledge from a specified file.

|

1 2 3 4 |

def load_domain_knowledge(domain_file): with open(domain_file, 'r') as file: domain_knowledge = file.read() return domain_knowledge |

save_instructions function

This function saves the generated instructions to a JSON file:

|

1 2 3 |

def save_instructions(instructions, filename): with open(filename, 'w') as file: json.dump(instructions, file, indent=4) |

Example usage

Here’s an example demonstrating how these functions work together:

|

1 2 3 4 5 6 |

# Example usage domain_file = "domain_knowledge.txt" context = "sample context" domain_knowledge = load_domain_knowledge(domain_file) instructions = generate_content(domain_knowledge, context) save_instructions(instructions, "instructions.json") |

This workflow allows for efficient creation and storage of questions for dataset preparation.

Generating datasets (train, test, validate)

This section guides you through creating datasets to fine-tune various models, such as Mistral 7B, using Ollama’s Llama2. To ensure accuracy, you’ll need domain knowledge stored in files like domain.txt.

Python functions for dataset creation

query_ollama Function

This function asks Ollama’s Llama 2 model for answers and follow-up questions based on specific prompts and domain context:

|

1 2 3 4 5 6 7 8 9 10 11 |

def query_ollama(prompt, domain, context='', model='llama2'): url = 'https://localhost:11434/api/generate' data = {"model": model, "stream": False, "prompt": f"[DOMAIN] {domain} [/DOMAIN] [CONTEXT] {context} [/CONTEXT] {prompt}"} response = requests.post(url, json=data) response.raise_for_status() followup_data = {"model": model, "stream": False, "prompt": response.json()['response'].strip() + "What is a likely follow-up question or request? Return just the text of one question or request."} followup_response = requests.post(url, json=followup_data) followup_response.raise_for_status() return response.json()['response'].strip(), followup_response.json()['response'].replace("\"", "").strip() |

create_validation_file function

This function divides data into training, testing, and validation sets, saving them into separate files for model training:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

def create_validation_file(temp_file, train_file, valid_file, test_file): with open(temp_file, 'r') as file: lines = file.readlines() train_lines = lines[:int(len(lines) * 0.8)] test_lines = lines[int(len(lines) * 0.8):int(len(lines) * 0.9)] valid_lines = lines[int(len(lines) * 0.9):] with open(train_file, 'a') as file: file.writelines(train_lines) with open(valid_file, 'a') as file: file.writelines(valid_lines) with open(test_file, 'a') as file: file.writelines(test_lines) |

Managing dataset creation

main function

The main function coordinates dataset generation, from querying Ollama’s Llama 2 to formatting results into JSONL files for model training:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 |

def main(temp_file, instructions_file, train_file, valid_file, test_file, domain_file, context=''): # Check if instructions file exists if not Path(instructions_file).is_file(): sys.exit(f'{instructions_file} not found.') # Check if domain file exists if not Path(domain_file).is_file(): sys.exit(f'{domain_file} not found.') # Load domain knowledge domain = load_domain(domain_file) # Load instructions from file with open(instructions_file, 'r') as file: instructions = json.load(file) # Process each instruction for i, instruction in enumerate(instructions, start=1): print(f"Processing ({i}/{len(instructions)}): {instruction}") # Query Ollama's llama2 model to get model answer and follow-up question answer, followup_question = query_ollama(instruction, domain, context) # Format the result in JSONL format result = json.dumps({ 'text': f'<s>[INST] {instruction}[/INST] {answer}</s>[INST]{followup_question}[/INST]' }) + "\n" # Write the result to temporary file with open(temp_file, 'a') as file: file.write(result) # Create train, test, and validate files create_validation_file(temp_file, train_file, valid_file, test_file) print("Done! Training, testing, and validation JSONL files created.") |

Using these tools

To start refining models like Mistral 7B with Ollama’s Llama 2:

-

- Prepare domain knowledge: store domain-specific details in

domain.txt - Generate instructions: craft a JSON file,

instructions.json, with prompts for dataset creation - Run the main function: execute

main()with file paths to create datasets for model training and validation

- Prepare domain knowledge: store domain-specific details in

These Python functions empower you to develop datasets that optimize machine learning models, enhancing performance and accuracy for advanced applications.

Conclusion

That’s all for today! With these steps, you now have the knowledge and tools to improve your machine learning model training process. Thank you for reading, and we hope you’ve found this guide valuable. Be sure to explore our other blogs for more insights. Stay tuned for the next part in this series and check out other vector search-related blogs. Happy modeling, and see you next time!

References

Contributors

Sanjivani Patra – Nishanth VM – Ashok Kumar Alluri