Data preprocessing is a vital step in machine learning that transforms raw, messy data into a clean and structured format for model training. It involves cleaning, transforming, encoding, and splitting data to improve model accuracy, prevent data leakage, and ensure compatibility with algorithms. While often confused with data cleaning, preprocessing encompasses a broader set of tasks critical to reliable machine learning pipelines. Using tools like Pandas, Scikit-learn, and Apache Spark helps streamline this process, making it scalable and effective across different project sizes and complexities.

What is data preprocessing in machine learning?

Data preprocessing in machine learning refers to the steps taken to clean, organize, and transform raw data into a format that machine learning algorithms can use effectively. Real-world data is often messy because it includes missing values, inconsistent formats, outliers, and irrelevant features. Without proper preprocessing, even the most sophisticated machine learning models can struggle to find patterns or may produce misleading results.

Effective data preprocessing not only improves the accuracy and efficiency of ML models but also helps uncover deeper insights hidden within the data. It sets the foundation for any successful ML project by ensuring the input data is high quality, consistent, and relevant.

Data preprocessing vs. data cleaning

While data preprocessing and data cleaning are often used interchangeably, they refer to different stages in the data preparation pipeline. Data cleaning is actually a subset of the broader data preprocessing process. Understanding the differences between the two is crucial to building reliable machine learning models, as each plays a unique role in preparing raw data for analysis. The table below clarifies their specific purposes, tasks, and importance.

| Aspect | Data Cleaning | Data Preprocessing |

| Scope | Narrow – focuses on removing data issues | Broad – includes cleaning, transforming, and preparing data for machine learning |

| Main Goal | Improve data quality | Make data suitable for model training and evaluation |

| Typical Tasks | Removing duplicates, handling missing values | Cleaning, normalization, encoding, feature engineering, and splitting |

| Involves Transformation? | Rarely | Frequently (e.g., scaling, encoding, aggregation) |

| Used In | Data wrangling, early analysis | Full machine learning pipeline – from raw data to model-ready format |

| Tools Used | Pandas, OpenRefine, Excel | Scikit-learn, Pandas, TensorFlow, NumPy |

| Example | Filling in missing values with the mean | Filling in missing values and one-hot encoding, along with standardization and train/test split |

Why data preprocessing is important in machine learning

Effective data preprocessing is a critical step in the machine learning pipeline. It ensures that the data fed into a model is clean, consistent, and informative, directly impacting its performance and reliability. Here are some key reasons why data preprocessing is important in machine learning:

-

- Improves model accuracy: Clean and well-structured data enables algorithms to learn patterns more effectively, leading to better predictions and outcomes.

- Reduces noise and inconsistencies: Removing irrelevant or erroneous data helps prevent misleading insights and model confusion.

- Handles missing or incomplete data: Preprocessing techniques such as imputation or deletion ensure that gaps in data don’t degrade model performance.

- Ensures data compatibility: Many machine learning algorithms require data in specific formats; preprocessing steps like normalization or encoding make the data compatible with these requirements.

- Prevents data leakage: Proper data splitting during preprocessing (into training, validation, and test sets) helps avoid overfitting and ensures fair model evaluation.

- Saves time and resources: Clean, organized data streamlines model training, reduces computational costs, and shortens development cycles.

Data preprocessing techniques

Data preprocessing involves various techniques designed to prepare raw data for use in machine learning models. Each technique addresses specific challenges in the dataset and contributes to cleaner, more reliable inputs. Below are some of the most commonly used data preprocessing techniques:

-

- Data cleaning: Detects and corrects errors, removes duplicates, and handles missing values through strategies like imputation or deletion.

- Normalization and scaling: Adjusts numeric values to a common scale without distorting differences in the ranges, often essential for algorithms like KNN or gradient descent-based models.

- Encoding categorical variables: Converts non-numeric data (e.g., labels or categories) into numeric formats using one-hot encoding or label encoding.

- Outlier detection and removal: Identifies data points that deviate significantly from others, which can negatively impact model performance if left unaddressed.

- Dimensionality reduction: Reduces the number of input features while preserving important information, using methods like principal component analysis (PCA).

- Data splitting: Divides the dataset into training, validation, and test sets to evaluate the model effectively and prevent overfitting.

Data preprocessing steps in machine learning

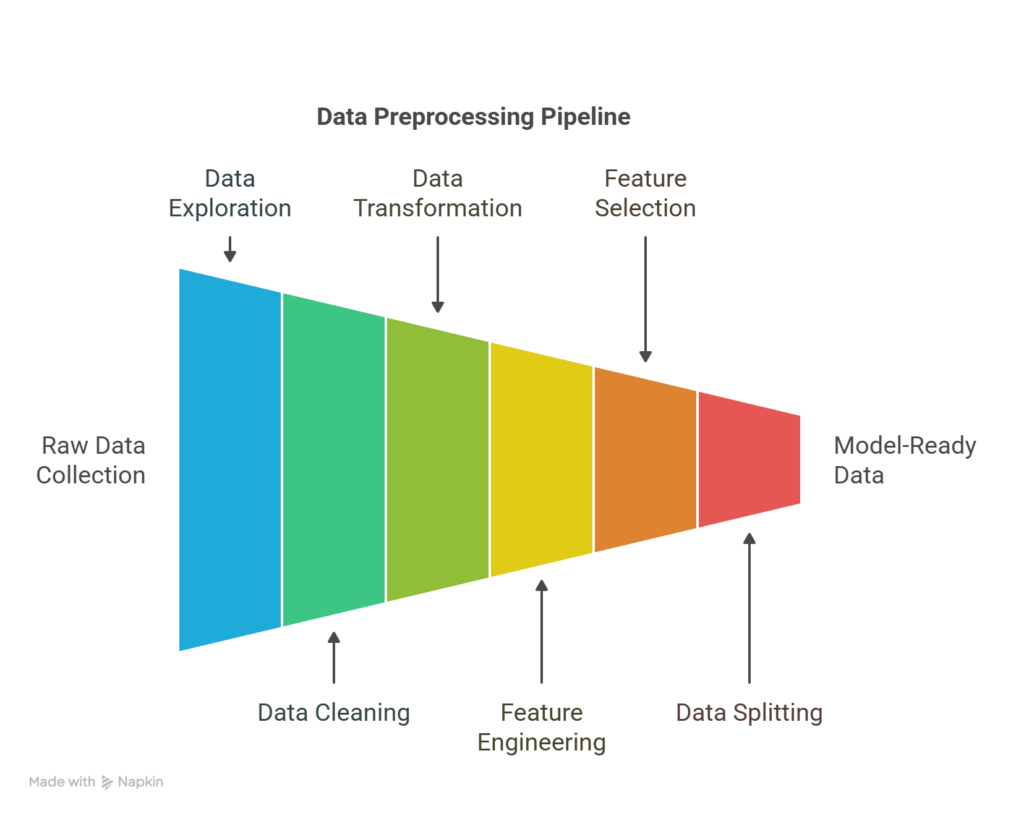

Steps in the data preprocessing pipeline

Data preprocessing is a multi-step process that prepares raw data for machine learning. Each step helps ensure the dataset is accurate, consistent, and optimized for model performance. Here’s a step-by-step breakdown of the typical data preprocessing workflow:

Data collection

The process begins with gathering data from relevant sources such as databases, APIs, sensors, or files. The quality and relevance of collected data directly influence the success of downstream tasks.

Data exploration

Before making changes, it’s essential to understand the dataset through exploratory data analysis (EDA). This step involves summarizing data characteristics, visualizing distributions, detecting patterns, and identifying anomalies or inconsistencies.

Data cleaning

This step addresses missing values, duplicate records, inconsistent formatting, and outliers. Cleaning ensures the dataset is reliable and free of noise or errors that could interfere with model training.

Data transformation

At this stage, the data is formatted for model compatibility. This process includes normalizing or scaling numerical values, encoding categorical variables, and transforming skewed distributions to improve model learning.

Feature engineering

New features are created based on existing data to better capture underlying patterns. This process might include extracting time-based variables, combining fields, or applying domain knowledge to enrich the dataset.

Feature selection

Not all features contribute equally to model performance. This step involves selecting the most relevant variables and removing redundant or irrelevant ones, which helps reduce overfitting and improve efficiency.

Data splitting

The cleaned and engineered dataset is divided into training, validation, and test sets. Doing this ensures that the model is evaluated on unseen data and generalizes to real-world scenarios.

Final review

Before modeling, a final check ensures that all preprocessing steps were correctly applied. This stage involves verifying distributions, feature quality, and data splits to prevent issues like data leakage or imbalance.

Data preprocessing example

Suppose you’re building a model to predict whether a customer will churn from a subscription service. Imagine you have a dataset from a telecom company with the following columns:

| Customer_ID | Age | Gender | Monthly_Charges | Contract_Type | Churn |

| 1 | 34 | Male | 70.5 | Month-to-month | Yes |

| 2 | NaN | Female | 85 | One year | No |

| 3 | 45 | Female | NaN | Month-to-month | Yes |

| 4 | 29 | Male | 65.5 | Two year | No |

Let’s walk through the preprocessing steps:

-

- Handling missing values

-

-

- Fill in the missing Age with the average age (36).

- Fill in the missing Monthly_Charges with the column median (73.5).

-

-

- Encoding categorical variables

-

-

- Gender (Male/Female) and Contract_Type (Month-to-month, One year, Two year) are categorical.

- Apply:

- Label encoding for Gender (Male = 0, Female = 1)

- One-hot encoding for Contract_Type, resulting in:

- Contract_Month_to_month, Contract_One_year, Contract_Two_year

-

-

- Feature scaling

-

-

- Normalize Age and Monthly_Charges to bring them to the same scale (this is especially useful for distance-based models like KNN).

-

-

- Target encoding

-

-

- Convert Churn (Yes/No) to binary:

- Yes = 1

- No = 0

- Convert Churn (Yes/No) to binary:

-

-

- Cleaned and preprocessed dataset

| Age | Gender | Monthly_Charges | Contract_Month | Contract_One | Contract_Two | Churn |

| 34 | 0 | 70.5 | 1 | 0 | 0 | 1 |

| 36 | 1 | 85 | 0 | 1 | 0 | 0 |

| 45 | 1 | 73.5 | 1 | 0 | 0 | 1 |

| 29 | 0 | 65.5 | 0 | 0 | 1 | 0 |

Now the dataset is clean, numeric, and ready for model training.

Data preprocessing tools

Choosing the right tools for data preprocessing can impact the effectiveness of your machine learning workflow. Below is a list of commonly used tools, along with their strengths and limitations:

Pandas (Python)

Best suited for:

-

- Handling structured data (e.g., CSVs, Excel, SQL tables)

- Data cleaning, filtering, and transformation

- Quick exploratory data analysis

Not suited for:

-

- Large-scale distributed processing

- Complex ETL pipelines or unstructured data (e.g., images, audio)

NumPy (Python)

Best suited for:

-

- Numerical operations and handling multidimensional arrays

- Performance-optimized matrix computations

Not suited for:

-

- High-level data manipulation or cleaning

- Working directly with labeled datasets (Pandas is more appropriate)

Scikit-learn (Python)

Best suited for:

-

- Feature scaling, encoding, and selection

- Data splitting (train/test/validation)

- Integration with ML models and pipelines

Not suited for:

-

- Deep learning tasks

- Heavy data manipulation (use with Pandas)

OpenRefine

Best suited for:

-

- Cleaning messy, unstructured, or inconsistent data

- Reconciling and transforming data from different sources

- Non-programmers needing a GUI-based tool

Not suited for:

-

- Large datasets

- Integration into automated machine learning workflows

Apache Spark (with PySpark or Scala)

Best suited for:

-

- Processing large-scale datasets in a distributed environment

- Data preprocessing in big data pipelines

- Integration with cloud platforms (AWS, Azure, GCP)

Not suited for:

-

- Small-to-medium datasets (overhead may not be justified)

- Fine-grained, interactive data manipulation

Dataiku

Best suited for:

-

- End-to-end ML workflows, including preprocessing, modeling, and deployment

- Teams with both technical and non-technical users

- Visual programming and automation

Not suited for:

-

- Deep customization or low-level data control

- Lightweight personal projects or code-only workflows

TensorFlow Data Validation (TFDV)

Best suited for:

-

- Validating data pipelines in production ML workflows

- Detecting schema anomalies and data drift at scale

- Use within the TensorFlow Extended (TFX) ecosystem

Not suited for:

-

- General-purpose data cleaning

- Use outside TensorFlow or TFX environments

The strengths and limitations of these tools ultimately depend on the size of your project, the complexity, and the technical environment. Combining tools (e.g., Pandas for cleaning and Scikit-learn for feature scaling) usually provides the best results.

Key takeaways and resources

Data preprocessing is crucial to the machine learning process. It transforms raw, messy data into a clean, structured dataset ready for model training. It includes tasks like handling missing values, encoding categorical variables, scaling features, and engineering new ones, all of which help improve model accuracy and reliability. Skipping preprocessing often leads to poor performance and misleading outcomes.

Tools like Pandas, Scikit-learn, and TensorFlow simplify the process, while OpenRefine or Excel are useful for lighter or visual tasks.

You can continue learning about different approaches to preparing data through the resources below: