This hands-on tutorial blog primarily covers details around setting up Couchbase Kubernetes Operator on a laptop/desktop running minikube. It features setting up custom TLS certs and persistent volumes. Along with checking how to scale up and down the cluster. Also running backup/restore of the Couchbase cluster and running sample application using Python SDK.

Setup uses Couchbase Operator 1.2 on open source kubernetes using minikube, which can run on a laptop. The deployment would be using command line tools to deploy on macos.

Overview of the hands-on tutorial

Pre-requisities

CLI commands for macOS, update the package manager for mac using command below

|

1 |

$ brew update |

Install hypervisor from link below

https://download.virtualbox.org/virtualbox/6.0.10/VirtualBox-6.0.10-132072-OSX.dmg

Install minikube

|

1 |

$ brew cask install minikube |

Install kubectl

https://kubernetes.io/docs/tasks/tools/install-kubectl/#install-kubectl-on-macos

Start minikube using command below

[HINT] Stop/exit all applications on the laptop if they are not required for this tutorial. minikube and couchbase cluster running on minukube needs good amount of resources.

|

1 2 3 |

$ sudo minikube start $ sudo kubectl cluster-info |

Environment details for the minikube on my laptop look like below

|

1 |

minikue on macos : v1.2.0 |

Set the vCPUs and Memory to 4 and 4GiB so that Couchbase Operator would work on laptop

|

1 2 |

sudo minikube config set memory 4096 sudo minikube config set cpus 4 |

|

1 2 3 4 |

$ sudo minikube config view - cpus: 4 - memory: 4096 |

minikube cluster details

|

1 2 3 4 |

$ sudo kubectl get nodes NAME STATUS ROLES AGE VERSION minikube Ready master 3d11h v1.15.0 |

Deploying Couchbase Autonomous Operator

Deploying Admission Controller

cd into the files dir to access the required yaml files The package needs to be downloaded onto laptop into your local directory

First we will create a namespace to localize the scope of our deployment

|

1 |

$ sudo kubectl create namespace cbdb |

Deployment Admission Controller which is a mutating webhook for schema validation

|

1 |

$ sudo kubectl create -f admission.yaml --namespace cbdb |

Query the deployment for Admission Controller

|

1 2 3 |

$ sudo kubectl get deployments --namespace cbdb NAME READY UP-TO-DATE AVAILABLE AGE couchbase-operator-admission 1/1 1 1 11m |

Deploy Couchbase Autonomous Operator

Deploy the Custom Resource Definition

Scope of the CRD can be k8s cluster wide or localized to the namespace. Choice is upto devops/k8s administrator. In the example below its localized to the a particular namespace

|

1 |

sudo kubectl create -f crd.yaml --namespace cbdb |

Deploy Operator Role

|

1 |

$ sudo kubectl create -f operator-role.yaml --namespace cbdb |

Create service account

|

1 |

$ sudo kubectl create serviceaccount couchbase-operator --namespace cbdb |

Bind the service account ‘couchbase-operator’ with operator-role

|

1 |

$ sudo kubectl create rolebinding couchbase-operator --role couchbase-operator --serviceaccount cbdb:couchbase-operator --namespace cbdb |

Deploy Couchbase Autonomous Operator Deployment

|

1 |

$ sudo kubectl create -f operator-deployment.yaml --namespace cbdb |

Query deployment

|

1 2 3 4 |

$ sudo kubectl get deployment --namespace cbdb NAME READY UP-TO-DATE AVAILABLE AGE couchbase-operator 1/1 1 1 20m couchbase-operator-admission 1/1 1 1 20m |

Deploying Couchbase Cluster

Deploy TLS certs in namespace cbdb

Using help file below in the link, make sure use appropriate namespace, here I have used ‘cbdb’. Link is provided here

Query the TLS secrets deployed via kubectl

|

1 2 3 4 |

$ sudo kubectl get secrets --namespace cbdb NAME TYPE DATA AGE couchbase-operator-tls Opaque 1 14h couchbase-server-tls Opaque 2 14h |

Deploy secret to access Couchbase UI

|

1 |

$ sudo kubectl create -f secret.yaml --namespace cbdb |

Get StorageClass details for minikube k8s cluster

|

1 2 3 |

$ sudo kubectl get storageclass NAME PROVISIONER AGE standard (default) k8s.io/minikube-hostpath 3d14h |

Deploy the Couchbase cluster

|

1 |

$ sudo kubectl create -f couchbase-persistent-cluster-tls-k8s-minikube.yaml --namespace cbdb |

yaml file for the above deployment can be found here

If everything goes well then we should see the Couchbase cluster deployed with PVs, TLS certs

|

1 2 3 4 5 6 7 |

$ sudo kubectl get pods --namespace cbdb NAME READY STATUS RESTARTS AGE cb-opensource-k8s-0000 1/1 Running 0 5h58m cb-opensource-k8s-0001 1/1 Running 0 5h58m cb-opensource-k8s-0002 1/1 Running 0 5h57m couchbase-operator-864685d8b9-j72jd 1/1 Running 0 20h couchbase-operator-admission-7d7d594748-btnm9 1/1 Running 0 20h |

Access the Couchbase UI

Get the service details for Couchbase cluster

|

1 2 3 |

$ sudo kubectl get svc --namespace cbdb NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE 6h11m cb-opensource-k8s-ui NodePort 10.100.90.161 8091:30477/TCP,18091:30184/TCP |

Expose the CB cluster via CB UI service

|

1 2 3 |

$ sudo kubectl port-forward service/cb-opensource-k8s-ui 8091:8091 --namespace cbdb Forwarding from 127.0.0.1:8091 -> 8091 Forwarding from [::1]:8091 -> 8091 |

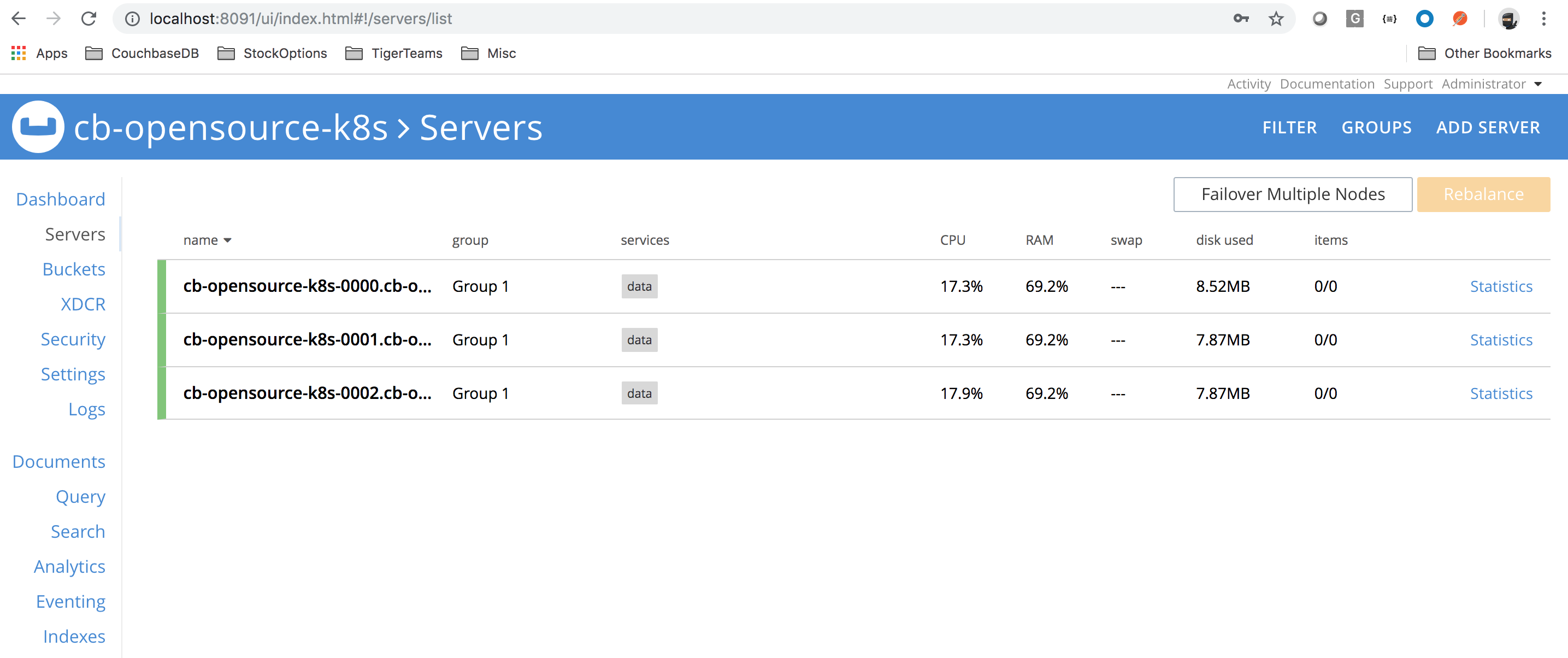

Accessing Couchbase UI

Login to http://localhost:8091 to access CB UI

Verify the root ca to check custom x509 cert is being used

Click Security->Root Certificate

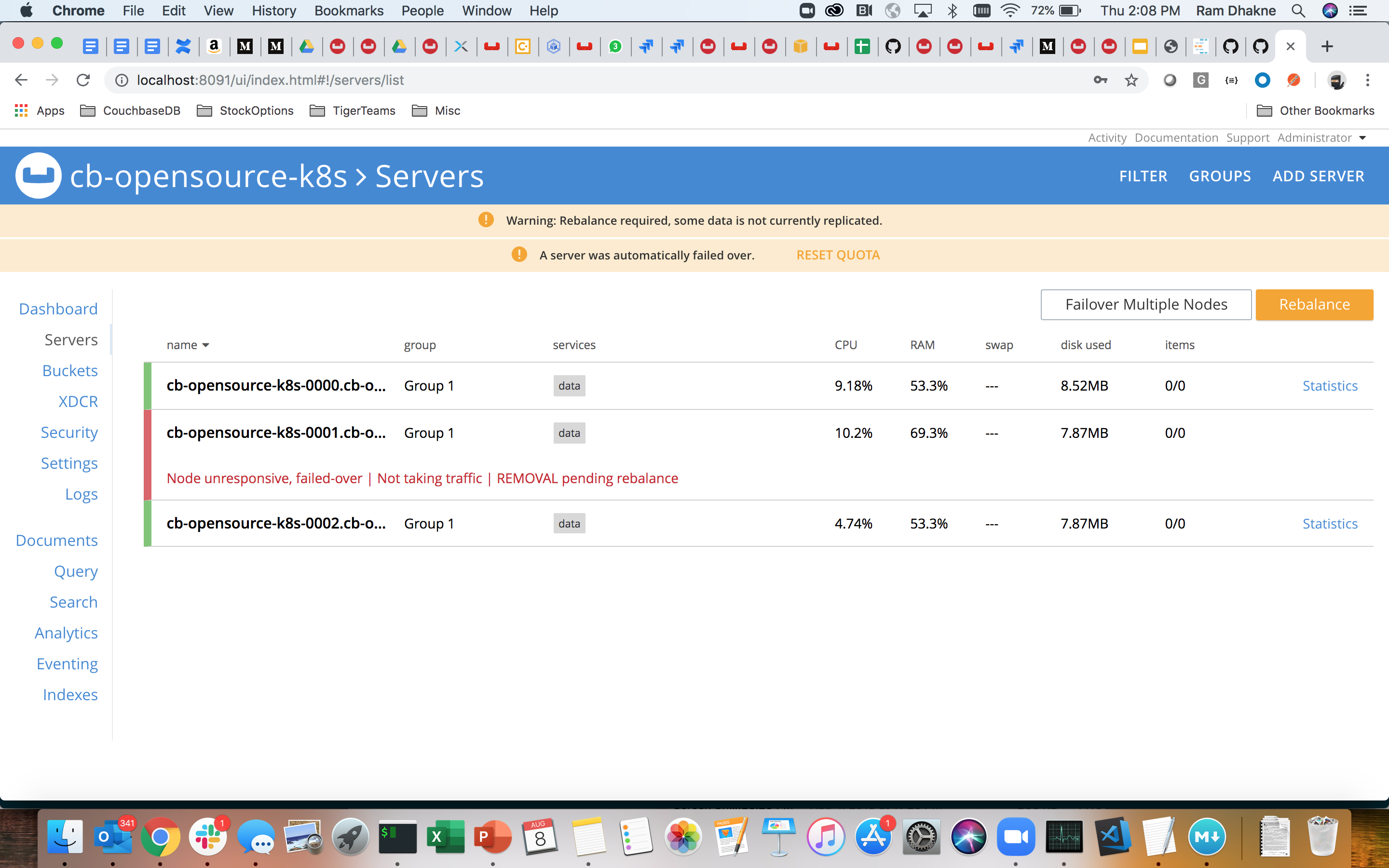

Delete a pod at random, lets delete pod 001

|

1 2 |

$ sudo kubectl delete pod cb-opensource-k8s-0001 --namespace cbdb pod "cb-opensource-k8s-0001" deleted |

Server would automatically failover, depending on the autoFailovertimeout

A lost Couchbase node is auto-recovered by Couchbase Operator as its constantly watching cluster definition

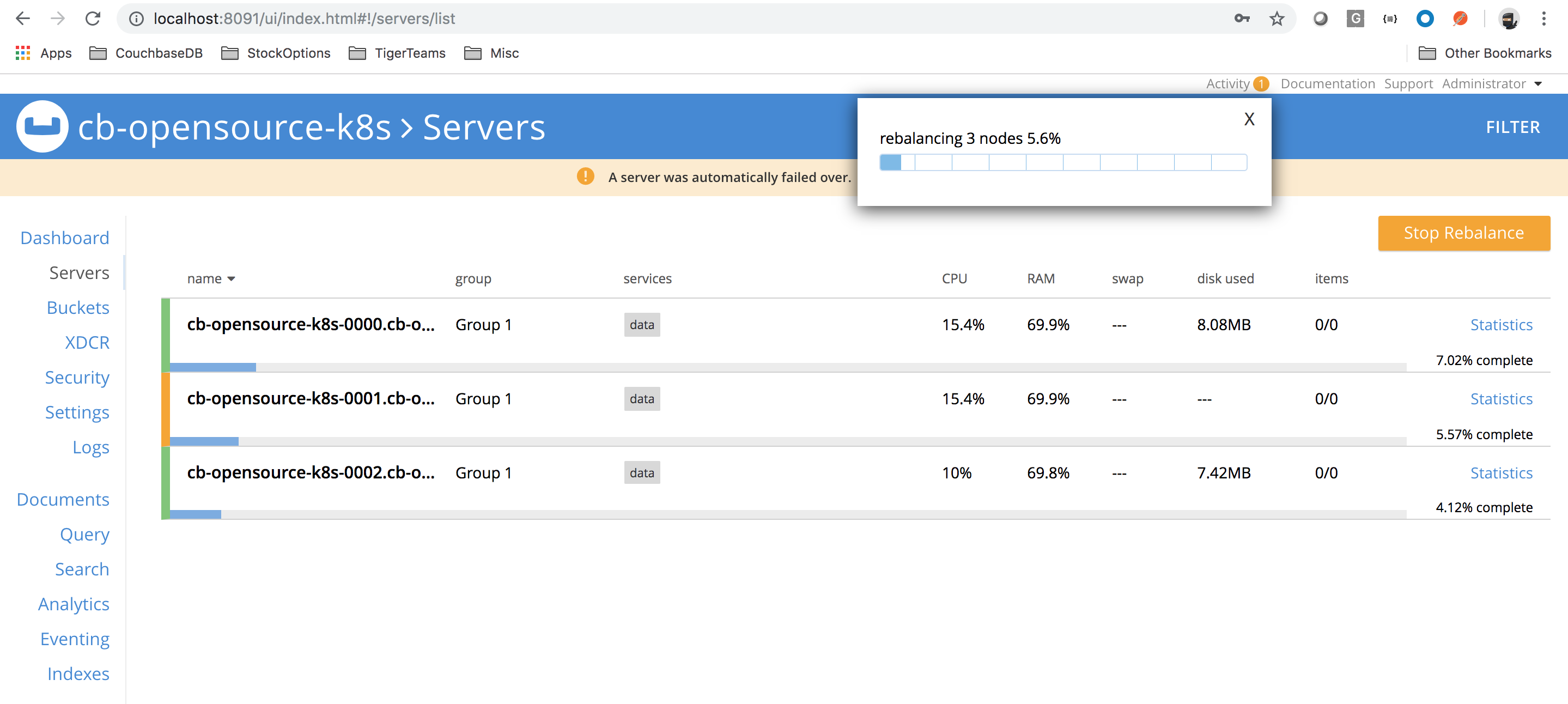

Scaling up/down

Its a single click change scale or scale down.

Scaling up

Change size to 4 from 3

|

1 2 3 4 5 6 7 8 9 |

--- a/opensrc-k8s/cmd-line/files/couchbase-persistent-cluster-tls-k8s-minikube.yaml enableIndexReplica: false compressionMode: passive servers: - - size: 3 + - size: 4 name: data services: - data |

Run the command below

|

1 |

sudo kubectl apply -f couchbase-persistent-cluster-tls-k8s-minikube.yaml --namespace cbdb |

Boom!

Cluster scales up.

Caution: K8s cluster needs to have enough resources to scale up.

Scaling down

Its exact opposite of scaling up, reduce the cluster to any number. But not less than 3.

Couchbase MVP is 3 nodes.

Backup and Restore Couchbase server

Backup CB cluster via cbbackupmgr

Create backup repo on given backup mount/volume

|

1 2 |

$ cbbackupmgr config --archive /tmp/data/ --repo myBackupRepo Backup repository `myBackupRepo` created successfully in archive `/tmp/data/` |

Backup

|

1 |

$ cbbackupmgr backup -c couchbase://127.0.0.1 -u Administrator -p password -a /tmp/data/ -r myBackupRepo |

Restore

|

1 2 |

# use --force-updates to use all updates from backup repo rather than current state of cluster $ cbbackupmgr restore -c couchbase://127.0.0.1 -u Administrator -p password -a /tmp/data/ -r myBackupRepo --force-updates |

Run sample Python application from a different namespace

Create namespace for app tier

|

1 2 |

$ sudo kubectl create namespace apps namespace/apps created |

Deploy the app pod

|

1 2 |

$ sudo kubectl create -f app_pod.yaml --namespace apps pod/app01 created |

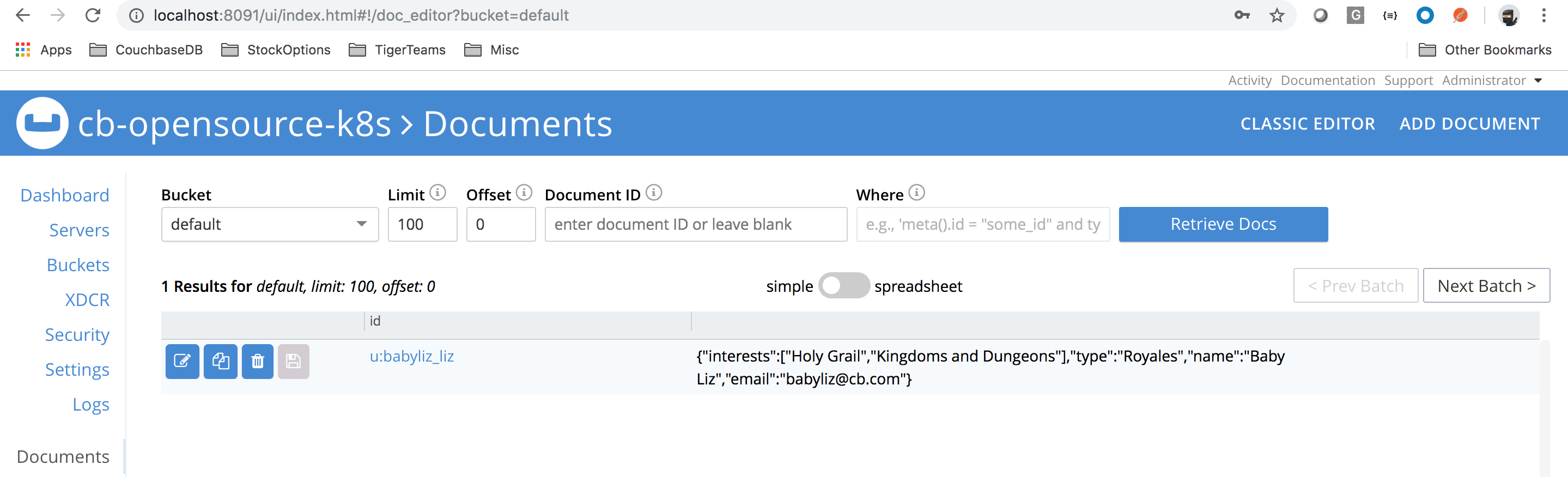

Run the sample python program to upsert a document into couchbase cluster

Login to the pods shell/exec into app pod

|

1 |

$ sudo kubectl exec -ti app01 bash --namespace apps |

Prep the pod for installing python SDK

Edit the program with FQDN of the pod

Run below command after exec’ing into the couchbase pod

|

1 |

$ sudo kubectl exec -ti cb-opensource-k8s-0000 bash --namespace cbdb |

Get the FQDN for the app pod

|

1 2 |

root@cb-opensource-k8s-0000:/# hostname -f cb-opensource-k8s-0000.cb-opensource-k8s.cbdb.svc.cluster.local |

Edit the program with correct connection string

Connection string for me looks like below:

|

1 |

cluster = Cluster('couchbase://cb-opensource-k8s-0000.cb-opensource-k8s.cbdb.svc.cluster.local') |

Since both the namespaces in minikube share same kube-dns

Run the program

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

root@app01:/# python python_sdk_example.py CB Server connection PASSED Open the bucket... Done... Upserting a document... Done... Getting non-existent key. Should fail.. Got exception for missing doc Inserting a doc... Done... Getting an existent key. Should pass... Value for key 'babyliz_liz' Value for key 'babyliz_liz' {u'interests': [u'Holy Grail', u'Kingdoms and Dungeons'], u'type': u'Royales', u'name': u'Baby Liz', u'email': u'babyliz@cb.com'} Delete a doc with key 'u:baby_arthur'... Done... Value for key [u:baby_arthur] Got exception for missing doc for key [u:baby_arthur] with error Closing connection to the bucket... root@app01:/# |

Upserted document should looks like this

Conclusion

We deployed Couchbase Autonomous Operator with version 1.2 on minikube version: v1.2.0. Couchbase cluster requires admission controller, RBACs with role limited to the namespace (more secure). CRD deployed has cluster wide scope, but that is by design. Couchbase cluster deployed had PV support and customer x509 certs.

We saw how how Couchbase cluster self-heals, and brings cluster up and healthy back without any user intervention.

Backup and restore are very critical for the Couchbase server. cbbackupmgr is our recommended utility for performing backups and restore. We also saw how to install Couchbase python sdk in a Application pod deployed in its namespace and we can have that application talk to Couchbase server and perform CRUD operations.

Cleanup (Optional)

Perform these steps below to un-config all the k8s assets created.

|

1 2 3 4 5 6 7 8 |

sudo kubectl delete -f secret.yaml --namespace cbdb sudo kubectl delete -f couchbase-persistent-cluster-tls-k8s-minikube.yaml --namespace cbdb sudo kubectl delete rolebinding couchbase-operator --namespace cbdb sudo kubectl delete serviceaccount couchbase-operator --namespace cbdb sudo kubectl delete -f operator-deployment.yaml --namespace cbdb sudo kubectl get deployments --namespace cbdb sudo kubectl delete -f admission.yaml --namespace cbdb sudo kubectl delete pod app01 --namespace apps |

hi Ram ,

Thanks … this is good comprehensive documents .. Few points what I was looking for :

Can I edit cluster config to 0 for all services to make all nodes disappear in webconsole .

Also what is the method or process to stop the running pod’s and processes if I want to restart the entire cluster in autonomous framework ? Any ability to do that w/o loosing data?

Sometime the operations like rebalancing is crazy and never stops (I see lot of open ticket on CB with no resolution ) so in this scenario I would like to restart cluster if possible .