Introdução

No mundo atual, as máquinas de infraestrutura de servidor estão em data centers locais, data centers privados ou data centers de nuvem pública. Essas máquinas são máquinas físicas bare metal, máquinas virtuais (VMs) com hipervisores ou pequenos contêineres, como contêineres docker, em cima de máquinas físicas ou virtuais. Essas máquinas podem estar fisicamente no laboratório local. Em um cenário de data center privado, em que seus próprios hosts adquiridos são colocados em um espaço físico compartilhado em um data center de terceiros e conectados remotamente. Já nos data centers públicos, como AWS, GCP, Azure e OCI, as máquinas são reservadas ou criadas sob demanda para as necessidades altamente escalonáveis que se conectam remotamente. Cada um deles tem suas próprias vantagens em termos de escalabilidade, segurança, confiabilidade, gerenciamento e custos associados a essas infraestruturas.

As equipes do ambiente de desenvolvimento de produtos podem precisar de muitos servidores durante o processo de SDLC. Digamos que alguém tenha escolhido o data center privado com suas próprias máquinas físicas e servidores Xen. Agora, o desafio é como o ciclo de vida das VMs é gerenciado para provisionamento ou encerramento, de forma semelhante aos ambientes de nuvem com processos enxutos e ágeis.

O objetivo deste documento é fornecer o modelo básico de infraestrutura, a arquitetura, as APIs mínimas e os exemplos de trechos de código para que seja possível criar facilmente ambientes de infraestrutura dinâmicos.

Benefícios

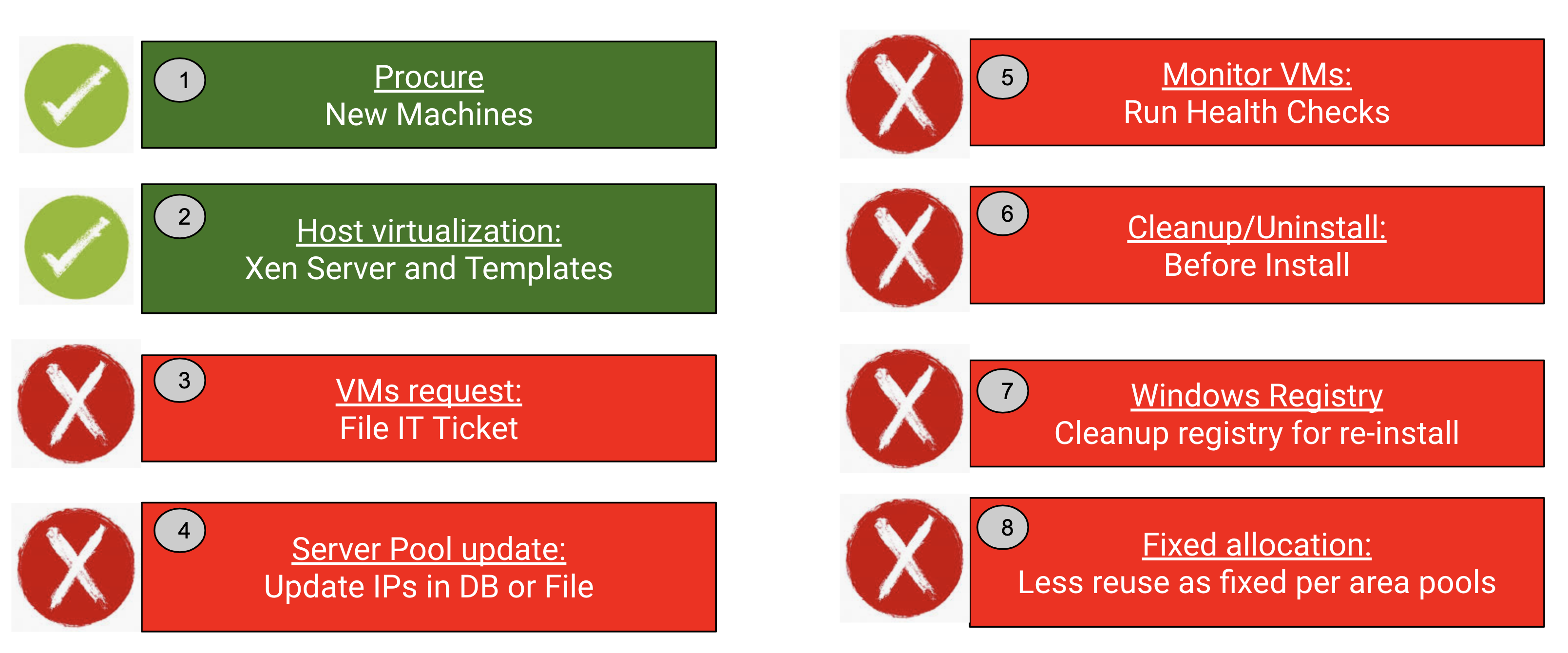

Primeiro, vamos entender a sequência típica das etapas seguidas no processo de infraestrutura desse servidor. Você pode relembrá-la da seguinte forma.

-

- Aquisição de novas máquinas pela TI

- Virtualização de host - Instalar o Xen Server e criar modelos de VM por TI

- Solicitação de VMs estáticas pelas equipes de desenvolvimento e teste por meio de tíquetes (digamos, JIRA) para a TI

- Mantenha os IPs de VM recebidos em um banco de dados ou em um arquivo estático ou codificados em arquivos de configuração ou ferramentas de CI, como no config.xml do Jenkins

- Monitore as VMs quanto a verificações de integridade para garantir que elas estejam íntegras antes de usá-las para instalar os produtos

- Limpeza ou desinstalação antes ou depois das instalações do servidor

- O Windows pode precisar de alguma limpeza do registro antes de instalar o produto

- A alocação fixa de VMs para uma área ou uma equipe ou dedicada a um engenheiro pode ter sido feita

Agora, como você pode tornar esse processo mais enxuto e ágil? Você pode eliminar a maioria das etapas acima com uma automação simples?

Sim. Em nosso ambiente, tínhamos mais de 1.000 VMs e tentamos alcançar e, principalmente, o seguinte.

"VMs descartáveis sob demanda, conforme necessário durante a execução dos testes. Resolva problemas de limpeza do Windows com ciclos de teste regulares."

Como você pode ver abaixo, usando o serviço de API do gerenciador de servidor de VMs dinâmicas, 6 das 8 etapas podem ser eliminadas e isso proporciona uma visão ilimitada da infraestrutura para toda a equipe de produtos. Somente as duas primeiras etapas - aquisição e virtualização de host - são necessárias. De fato, isso economiza em termos de tempo e custo!

Fluxo típico para obter infraestrutura

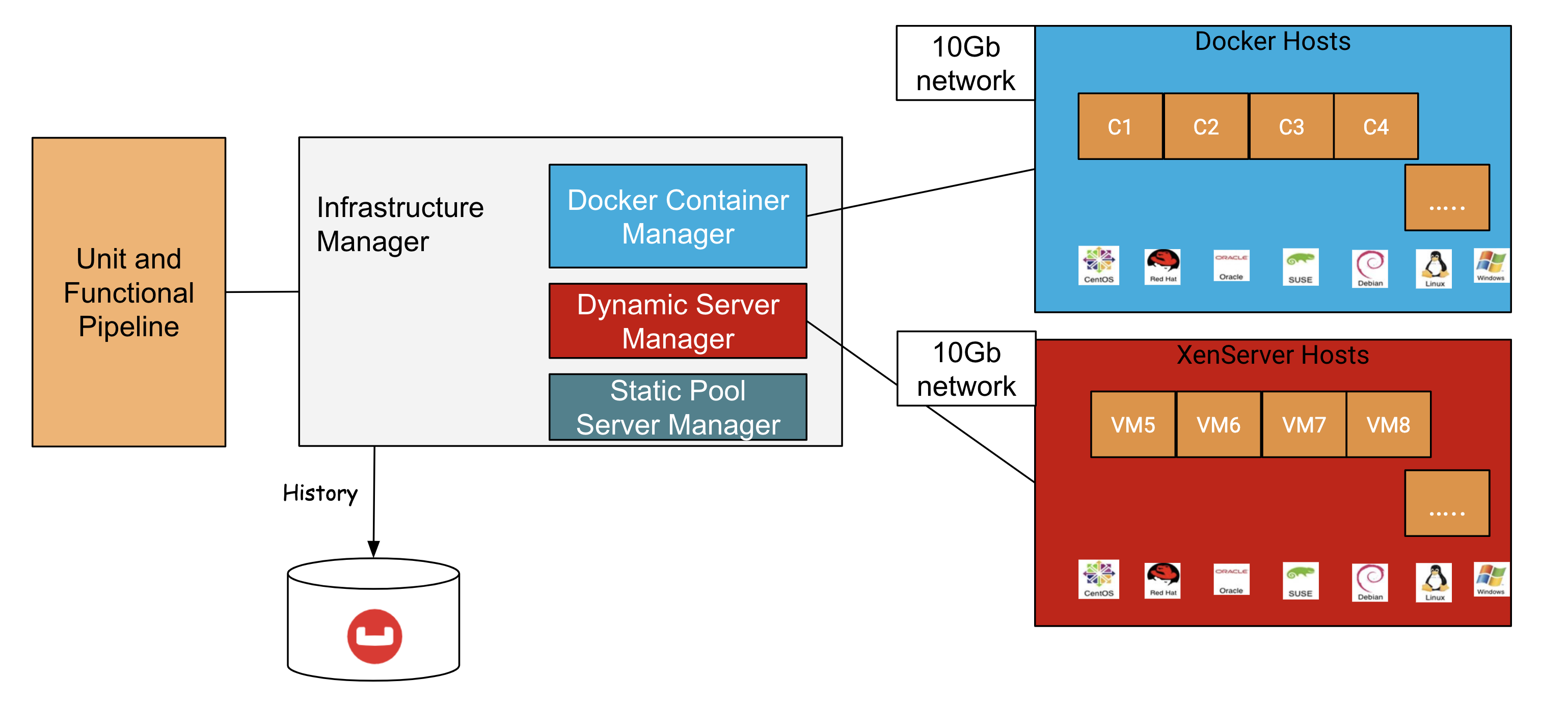

Modelo de infraestrutura dinâmica

A imagem abaixo mostra nossa infraestrutura proposta para um ambiente típico de produto de servidor, com 80% de contêineres do docker, 15% como VMs dinâmicas e 5% como VMs estáticas em pool para casos especiais. Essa distribuição pode ser ajustada com base no que funciona melhor em seu ambiente.

Modelo de infraestrutura

Daqui em diante, discutiremos mais sobre a parte do gerenciador do servidor de VM dinâmica.

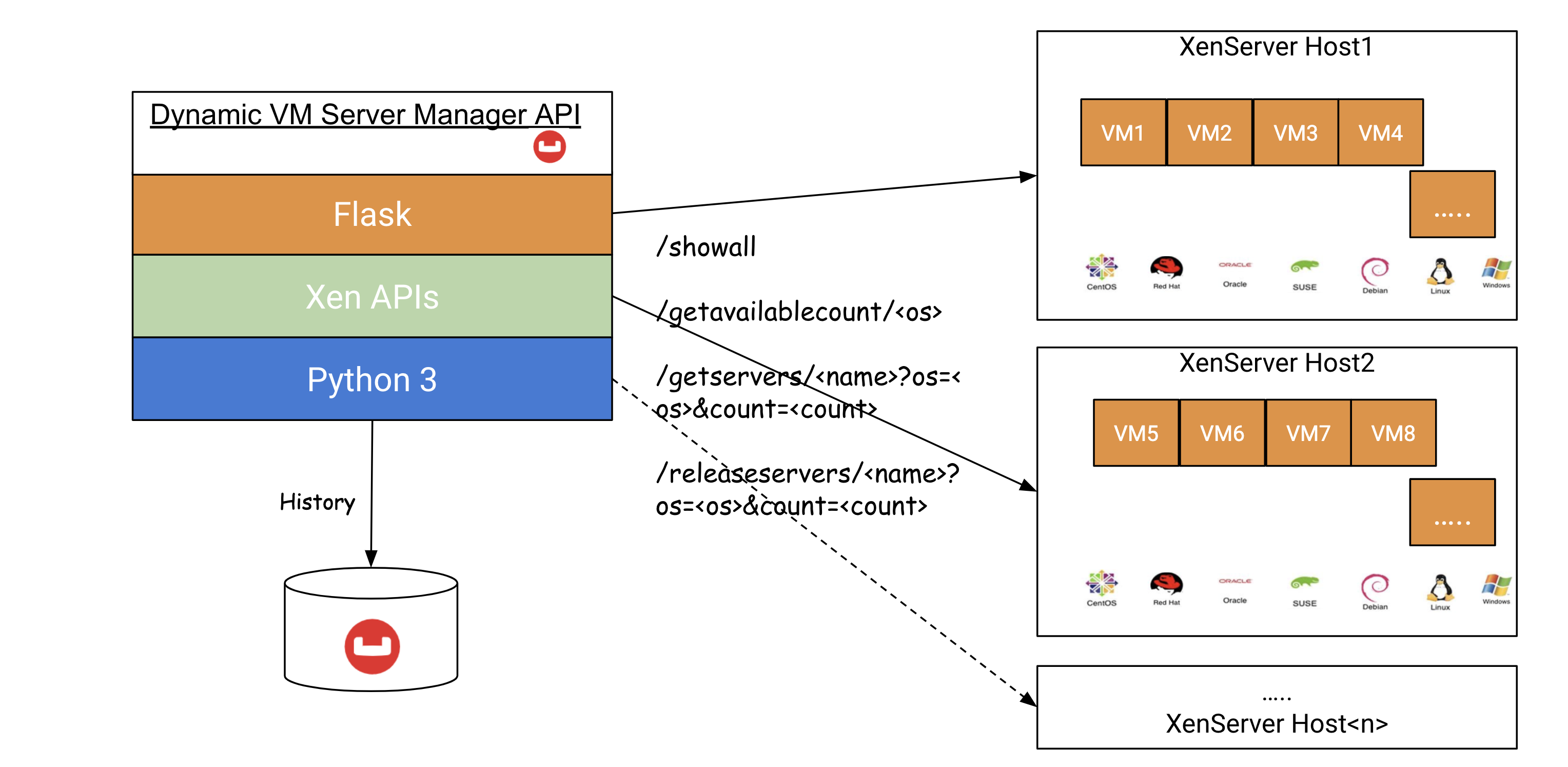

Arquitetura do Dynamic Server Manager

No gerenciador de servidor de VMs dinâmicas, um serviço de API simples em que as APIs REST abaixo podem ser expostas e usadas em qualquer lugar no processo automatizado. Como mostra a pilha de tecnologia, as APIs Xen baseadas em python 3 e Python são usadas para a criação real de VMs com o host XenServer. O Flask está sendo usado para a criação da camada de serviço REST. O sistema operacional pode ser qualquer uma das plataformas compatíveis com seu produto, como windows2016, centos7, centos8, debian10, ubuntu18, oel8, suse15.

Arquitetura do gerenciador de servidor de VMs dinâmicas

Salve o histórico das VMs para rastrear o uso e o tempo de provisionamento ou encerramento que pode ser analisado posteriormente. Para armazenar o documento json, pode ser usado o servidor corporativo Couchbase, que é um banco de dados de documentos nosql.

APIs REST simples

| Método | URI(s) | Finalidade |

| OBTER | /showall | Lista todas as VMs no formato json |

| OBTER | /getavailablecount/ | Obtém a lista de contagem de VMs disponíveis para o fornecido |

| OBTER | /getservers/?os=

/getservers/?os=&count= /getservers/?os=&count=&cpus=&mem= /getservers/?os=&expiresin= |

Provisões dadas VMs de .

contagem de cpus e tamanho de memória também podem ser suportados. parâmetro expiresin em minutos para obter a expiração (encerramento automático) das VMs. |

| OBTER | /releaseservers/?os=

/releaseservers/?os=&count= |

Encerra determinadas VMs de |

Pré-requisitos para hosts Xen direcionados a VMs dinâmicas

- Identificar hosts Xen de VM dinâmicos direcionados

- Copiar/criar os modelos de VM

- Mova esses hosts Xen para uma VLAN/sub-rede separada (trabalhe com a TI) para reciclar os IPs

Implementação

Em um nível alto -

- Criar funções para cada API REST

- Chame um serviço comum para executar diferentes ações REST.

- Entenda a criação da sessão Xen, obtendo os registros, clonando a VM a partir do modelo, anexando o disco correto, aguardando a criação da VM e o recebimento do IP; exclusão de VMs, exclusão de discos

- Iniciar um thread para expiração automática de VMs

- Ler a configuração comum, como o formato .ini

- Compreender o trabalho com o banco de dados Couchbase e salvar documentos

- Teste todas as APIs com os sistemas operacionais e parâmetros necessários

- Corrigir problemas, se houver

- Executar um POC com poucos hosts Xen

Os trechos de código abaixo podem ajudá-lo a entender ainda melhor.

Criação de APIs

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 |

@aplicativo.rota('/showall/') @aplicativo.rota("/showall") def showall_service(os=Nenhum): contagem, _ = get_all_xen_hosts_count(os) registro.informações("--> count: {}".formato(contagem)) todos_vms = {} para xen_host_ref em alcance(1, contagem + 1): registro.informações("Obtendo xen_host_ref=" + str(xen_host_ref)) todos_vms[xen_host_ref] = perform_service(xen_host_ref, nome_do_serviço='listvms', os=os) retorno json.lixeiras(todos_vms, recuo=2, sort_keys=Verdadeiro) @aplicativo.rota('/getavailablecount/') @aplicativo.rota('/getavailablecount') def getavailable_count_service(os='centos'): """ Calcular a contagem disponível: Obter total de CPUs, total de memória Obtenha CPUs gratuitas, memória gratuita Obter todas as VMs - CPUs e memória alocadas Obter cada modelo de sistema operacional - CPUs e memória Contagem disponível1 = (CPUs livres - CPUs de VMs)/OS_Template_CPUs Contagem disponível2 = (Memória livre - Memória das VMs)/OS_Template_Memory Retorna min(count1,count2) """ contagem, available_counts, xen_hosts = get_all_available_count(os) registro.informações("{},{},{},{}".formato(contagem, available_counts, xen_hosts, reserved_count)) se contagem > reserved_count: contagem -= reserved_count registro.informações("Contagem menos reservada: {},{},{},{}".formato(contagem, available_counts, xen_hosts, reserved_count)) retorno str(contagem) # /getservers/username?count=number&os=centos&ver=6&expiresin=30 @aplicativo.rota('/getservers/') def getervers_service(nome de usuário): global reserved_count se solicitação.argumentos.obter('count' (contagem)): vm_count = int(solicitação.argumentos.obter('count' (contagem))) mais: vm_count = 1 nome_do_os = solicitação.argumentos.obter('os') se solicitação.argumentos.obter('cpus'): cpus_count = solicitação.argumentos.obter('cpus') mais: cpus_count = "default" se solicitação.argumentos.obter('mem'): mem = solicitação.argumentos.obter('mem') mais: mem = "default" se solicitação.argumentos.obter('expiresin'): exp = int(solicitação.argumentos.obter('expiresin')) mais: exp = MAX_EXPIRY_MINUTES se solicitação.argumentos.obter('formato'): formato de saída = solicitação.argumentos.obter('formato') mais: formato de saída = "gerenciador de servidor" xhostref = Nenhum se solicitação.argumentos.obter('xhostref'): xhostref = solicitação.argumentos.obter('xhostref') reserved_count += vm_count se xhostref: registro.informações("--> VMs em um determinado xenhost" + xhostref) vms_ips_list = perform_service(xhostref, 'createvm', nome_do_os, nome de usuário, vm_count, cpus=cpus_count, memória máxima=mem, expiry_minutes=exp, formato de saída=formato de saída) retorno json.lixeiras(vms_ips_list) ... # /releaseservers/{username} @aplicativo.rota('/releaseservers//') @aplicativo.rota('/releaseservers/') def releaseservers_service(nome de usuário): se solicitação.argumentos.obter('count' (contagem)): vm_count = int(solicitação.argumentos.obter('count' (contagem))) mais: vm_count = 1 nome_do_os = solicitação.argumentos.obter('os') delete_vms_res = [] para vm_index em alcance(vm_count): se vm_count > 1: vm_name = nome de usuário + str(vm_index + 1) mais: vm_name = nome de usuário xen_host_ref = get_vm_existed_xenhost_ref(vm_name, 1, Nenhum) registro.informações("VM a ser excluída de xhost_ref=" + str(xen_host_ref)) se xen_host_ref != 0: delete_per_xen_res = perform_service(xen_host_ref, 'deletevm', nome_do_os, vm_name, 1) para deleted_vm_res em delete_per_xen_res: delete_vms_res.anexar(deleted_vm_res) se len(delete_vms_res) < 1: retorno "Error: VM " + nome de usuário + " não existe" mais: retorno json.lixeiras(delete_vms_res, recuo=2, sort_keys=Verdadeiro) def perform_service(xen_host_ref=1, nome_do_serviço='list_vms', os="centos", vm_prefix_names="", número_de_vms=1, cpus="default", memória máxima="default", expiry_minutes=MAX_EXPIRY_MINUTES, formato de saída="gerenciador de servidor", sufixo inicial=0): xen_host = get_xen_host(xen_host_ref, os) ... def principal(): # options = parse_arguments() set_log_level() aplicativo.executar(hospedeiro='0.0.0.0', depurar=Verdadeiro) |

Criação da sessão Xen

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

def get_xen_session(xen_host_ref=1, os="centos"): xen_host = get_xen_host(xen_host_ref, os) se não xen_host: retorno Nenhum url = "http://" + xen_host['host.name'] registro.informações("\nXen Server host: " + xen_host['host.name'] + "\n") tentar: sessão = XenAPI.Sessão(url) sessão.xenapi.login_with_password(xen_host['host.user'], xen_host['host.password']) exceto XenAPI.Falha como f: erro = "Falha ao adquirir uma sessão: {}".formato(f.detalhes) registro.erro(erro) retorno erro retorno sessão |

Listar VMs

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 |

def list_vms(sessão): vm_count = 0 vms = sessão.xenapi.VM.get_all() registro.informações("O servidor tem {} objetos de VM (isso inclui modelos):".formato(len(vms))) registro.informações("-----------------------------------------------------------") registro.informações("S.No., VMname, PowerState, Vcpus, MaxMemory, Networkinfo, Description") registro.informações("-----------------------------------------------------------") vm_details = [] para vm em vms: informação_de_rede = 'N/A' registro = sessão.xenapi.VM.get_record(vm) se não (registro["is_a_template"]) e não (registro["is_control_domain"]): registro.depurar(registro) vm_count = vm_count + 1 nome = registro["name_label"] name_description = registro["name_description"] power_state = registro["power_state"] vcpus = registro["VCPUs_max"] memory_static_max = registro["memory_static_max"] se registro["power_state"] != "Parado: ip_ref = sessão.xenapi.VM_guest_metrics.get_record(registro['guest_metrics']) informação_de_rede = ','.unir-se([str(elem) para elem em ip_ref["redes].valores()]) mais: continuar # Listando apenas VMs em execução vm_info = {"nome: nome, 'power_state': power_state, 'vcpus': vcpus, 'memory_static_max': memory_static_max, 'networkinfo': informação_de_rede, 'name_description': name_description} vm_details.anexar(vm_info) registro.informações(vm_info) registro.informações("O servidor tem {} objetos de VM e {} modelos.".formato(vm_count, len(vms) - vm_count)) registro.depurar(vm_details) retorno vm_details |

Criar VM

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 |

def create_vm(sessão, nome_do_os, modelo, new_vm_name, cpus="default", memória máxima="default", expiry_minutes=MAX_EXPIRY_MINUTES): erro = '' vm_os_name = '' vm_ip_addr = '' prov_start_time = tempo.tempo() tentar: registro.informações("\n--- Criando VM: " + new_vm_name + " usando " + modelo) pifs = sessão.xenapi.PIF.get_all_records() mais baixo = Nenhum para pifRef em pifs.chaves(): se (mais baixo é Nenhum) ou (pifs[pifRef]['dispositivo'] < pifs[mais baixo]['dispositivo']): mais baixo = pifRef registro.depurar("Escolhendo PIF com dispositivo: {}".formato(pifs[mais baixo]['dispositivo'])) ref = mais baixo mac = pifs[ref]['MAC'] dispositivo = pifs[ref]['dispositivo'] modo = pifs[ref]['ip_configuration_mode'] ip_addr = pifs[ref]['IP'] máscara_de_rede = pifs[ref]['IP'] portal = pifs[ref]['gateway'] dns_server = pifs[ref]['DNS'] registro.depurar("{},{},{},{},{},{},{}".formato(mac, dispositivo, modo, ip_addr, máscara_de_rede, portal, dns_server)) # Listar todos os objetos da VM vms = sessão.xenapi.VM.get_all_records() registro.depurar("O servidor tem {} objetos de VM (isso inclui modelos)".formato(len(vms))) modelos = [] todos_templates = [] para vm em vms: registro = vms[vm] tipo_de_res = "VM" se registro["is_a_template"]: tipo_de_res = "Template" (Modelo) todos_templates.anexar(vm) # Procurar um determinado modelo se registro["name_label"].começa com(modelo): modelos.anexar(vm) registro.depurar(" Encontrados %8s com name_label = %s" % (tipo_de_res, registro["name_label"])) registro.depurar("O servidor tem {} modelos e {} VM objects.".formato(len(todos_templates), len(vms) - len( todos_templates))) registro.depurar("Escolhendo um modelo {} para clonar".formato(modelo)) se não modelos: registro.erro("Não foi possível encontrar nenhum modelo {}. Exiting.".formato(modelo)) sistema.saída(1) template_ref = modelos[0] registro.depurar(" Modelo selecionado: {}".formato(sessão.xenapi.VM.get_name_label(template_ref))) # Tenta novamente quando o endereço 169.x é recebido ipaddr_max_retries = 3 retry_count = 1 is_local_ip = Verdadeiro vm_ip_addr = "" enquanto is_local_ip e retry_count != ipaddr_max_retries: registro.informações("Instalando nova VM a partir do modelo - tentativa #{}".formato(retry_count)) vm = sessão.xenapi.VM.clone(template_ref, new_vm_name) rede = sessão.xenapi.PIF.get_network(mais baixo) registro.depurar("O PIF escolhido está conectado à rede: {}".formato( sessão.xenapi.rede.get_name_label(rede))) vifs = sessão.xenapi.VIF.get_all() registro.depurar(("Número de VIFs=" + str(len(vifs)))) para i em alcance(len(vifs)): vmref = sessão.xenapi.VIF.get_VM(vifs[i]) a_vm_name = sessão.xenapi.VM.get_name_label(vmref) registro.depurar(str(i) + "." + sessão.xenapi.rede.get_name_label( sessão.xenapi.VIF.get_network(vifs[i])) + " " + a_vm_name) se a_vm_name == new_vm_name: sessão.xenapi.VIF.mover(vifs[i], rede) registro.depurar("Adição de não interativo à linha de comando do kernel") sessão.xenapi.VM.set_PV_args(vm, "não interativo") registro.depurar("Escolha de um SR para instanciar os discos da VM") piscina = sessão.xenapi.piscina.get_all()[0] default_sr = sessão.xenapi.piscina.get_default_SR(piscina) default_sr = sessão.xenapi.SR.get_record(default_sr) registro.depurar("Escolhendo SR: {} (uuid {})".formato(default_sr['name_label'], default_sr['uuid'])) registro.depurar("Solicitando que o servidor provisione o armazenamento a partir da especificação do modelo") descrição = new_vm_name + " de " + modelo + " em " + str(data e hora.data e hora.utcnow()) sessão.xenapi.VM.set_name_description(vm, descrição) se cpus != "default": registro.informações("Configurando cpus para " + cpus) sessão.xenapi.VM.set_VCPUs_max(vm, int(cpus)) sessão.xenapi.VM.set_VCPUs_at_startup(vm, int(cpus)) se memória máxima != "default": registro.informações("Configurando a memória para " + memória máxima) sessão.xenapi.VM.set_memory(vm, memória máxima) # 8GB="8589934592" ou 4GB="4294967296" sessão.xenapi.VM.provisão(vm) registro.informações("Iniciando VM") sessão.xenapi.VM.iniciar(vm, Falso, Verdadeiro) registro.depurar(" A VM está sendo inicializada") registro.depurar("Aguardando a conclusão da instalação") # Obtenha o nome do sistema operacional e os IPs registro.informações("Obtendo o nome e o IP do sistema operacional...") configuração = read_config() vm_network_timeout_secs = int(configuração.obter("comum", "vm.network.timeout.secs")) se vm_network_timeout_secs > 0: TIMEOUT_SECS = vm_network_timeout_secs registro.informações("O tempo máximo de espera em segundos para o endereço do sistema operacional da VM é {0}".formato(str(TIMEOUT_SECS))) se "ganhar" não em modelo: tempo máximo = tempo.tempo() + TIMEOUT_SECS enquanto read_os_name(sessão, vm) é Nenhum e tempo.tempo() < tempo máximo: tempo.dormir(1) vm_os_name = read_os_name(sessão, vm) registro.informações("Nome do sistema operacional da VM: {}".formato(vm_os_name)) mais: # TBD: aguardar a atualização da rede na VM do Windows tempo.dormir(60) registro.informações("O tempo máximo de espera em segundos para o endereço IP é " + str(TIMEOUT_SECS)) tempo máximo = tempo.tempo() + TEMPO LIMITE_SCEE # Aguarde até que o IP não seja Nenhum ou 169.xx (quando não houver IPs disponíveis, esse é o padrão) e o tempo limite # não é acessado. enquanto (read_ip_address(sessão, vm) é Nenhum ou read_ip_address(sessão, vm).começa com( '169')) e \ tempo.tempo() < tempo máximo: tempo.dormir(1) vm_ip_addr = read_ip_address(sessão, vm) registro.informações("VM IP: {}".formato(vm_ip_addr)) se vm_ip_addr.começa com('169'): registro.informações("Nenhum IP de rede disponível. Excluindo esta VM ... ") registro = sessão.xenapi.VM.get_record(vm) power_state = registro["power_state"] se power_state != "Parado: sessão.xenapi.VM.hard_shutdown(vm) delete_all_disks(sessão, vm) sessão.xenapi.VM.destruir(vm) tempo.dormir(5) is_local_ip = Verdadeiro retry_count += 1 mais: is_local_ip = Falso registro.informações("IP final da VM: {}".formato(vm_ip_addr)) |

Excluir VM

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 |

def delete_vm(sessão, vm_name): registro.informações("Excluindo a VM: " + vm_name) delete_start_time = tempo.tempo() vm = sessão.xenapi.VM.get_by_name_label(vm_name) registro.informações("Número de VMs encontradas com o nome - " + vm_name + " : " + str(len(vm))) para j em alcance(len(vm)): registro = sessão.xenapi.VM.get_record(vm[j]) power_state = registro["power_state"] se power_state != "Parado: # session.xenapi.VM.shutdown(vm[j]) sessão.xenapi.VM.hard_shutdown(vm[j]) # print_all_disks(session, vm[j]) delete_all_disks(sessão, vm[j]) sessão.xenapi.VM.destruir(vm[j]) delete_end_time = tempo.tempo() delete_duration = rodada(delete_end_time - delete_start_time) # excluir do CB uuid = registro["uuid"] chave_documento = uuid cbdoc = CBDoc() resultado_doc = cbdoc.get_doc(chave_documento) se resultado_doc: valor_doc = resultado_doc.valor valor_doc["estado"] = "excluído current_time = tempo.tempo() valor_doc["deleted_time"] = current_time se valor_doc["created_time"]: valor_doc["live_duration_secs"] = rodada(current_time - valor_doc["created_time"]) valor_doc["delete_duration_secs"] = delete_duration cbdoc.salvar_dynvm_doc(chave_documento, valor_doc) |

Histórico de uso de VMs

É melhor manter o histórico de todas as VMs criadas e encerradas junto com outros dados úteis. Aqui está o exemplo de um documento json armazenado no Couchbase, um banco de dados Nosql gratuito servidor. Insira um novo documento usando a chave como o uuid de referência do xen opac sempre que uma nova VM for provisionada e atualize o mesmo sempre que a VM for encerrada. Acompanhe o tempo de uso da VM e também como o provisionamento/encerramento foi feito por cada usuário.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

registro = sessão.xenapi.VM.get_record(vm) uuid = registro["uuid"] # Salvar como documento no CB estado = "disponível" nome de usuário = new_vm_name piscina = "dynamicpool" valor_doc = {"ipaddr": vm_ip_addr, "origem": xen_host_description, "os": nome_do_os, "estado": estado, "poolId": piscina, "prevUser": "", "nome de usuário": nome de usuário, "ver": "12", "memória": memory_static_max, "os_version": vm_os_name, "name" (nome): new_vm_name, "created_time": prov_end_time, "create_duration_secs": create_duration, "cpu": vcpus, "disco": informação_discos} # doc_value["mac_address"] = mac_address chave_documento = uuid cb_doc = CBDoc() cb_doc.salvar_dynvm_doc(chave_documento, valor_doc) |

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 |

classe CBDoc: def __init__(autônomo): configuração = read_config() autônomo.cb_servidor = configuração.obter("couchbase", "couchbase.server") autônomo.cb_bucket = configuração.obter("couchbase", "couchbase.bucket") autônomo.cb_username = configuração.obter("couchbase", "couchbase.username") autônomo.cb_userpassword = configuração.obter("couchbase", "couchbase.userpassword") tentar: autônomo.cb_cluster = Aglomerado('couchbase://' + autônomo.cb_servidor) autônomo.cb_auth = PasswordAuthenticator(autônomo.cb_username, autônomo.cb_userpassword) autônomo.cb_cluster.autenticar(autônomo.cb_auth) autônomo.cb = autônomo.cb_cluster.open_bucket(autônomo.cb_bucket) exceto Exceção como e: registro.erro('Falha na conexão: %s ' % autônomo.cb_servidor) registro.erro(e) def get_doc(autônomo, chave_documento): tentar: retorno autônomo.cb.obter(chave_documento) exceto Exceção como e: registro.erro('Erro ao obter o documento %s! % chave_documento) registro.erro(e) def salvar_dynvm_doc(autônomo, chave_documento, valor_doc): tentar: registro.informações(valor_doc) autônomo.cb.upsert(chave_documento, valor_doc) registro.informações("%s adicionado/atualizado com sucesso" % chave_documento) exceto Exceção como e: registro.erro('Erro de salvamento do documento com chave: %s' % chave_documento) registro.erro(e) |

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

"dynserver-pool": { "cpu": "6", "create_duration_secs": 65, "created_time": 1583518463.8943903, "delete_duration_secs": 5, "deleted_time": 1583520211.8498628, "disco": "75161927680", "ipaddr": "x.x.x.x", "live_duration_secs": 1748, "memória": "6442450944", "name" (nome): "Win2019-Server-1node-DynVM", "origem": "s827", "os": "win16", "os_version": "", "poolId": "dynamicpool", "prevUser": "", "estado": "excluído", "nome de usuário": "Win2019-Server-1node-DynVM", "ver": "12" } |

Configuração

A configuração do serviço do gerenciador de servidores Dynamic VM, como o servidor couchbase, os servidores xenhost, os detalhes do modelo, a expiração padrão e os valores de tempo limite da rede podem ser mantidos em um formato .ini simples. Qualquer novo host Xen recebido, basta adicionar como uma seção separada. A configuração é carregada dinamicamente sem reiniciar o serviço Dynamic VM SM.

Exemplo de arquivo de configuração: .dynvmservice.ini

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 |

[couchbase] couchbase.servidor=<couchbase-hostIp> couchbase.balde=<balde-nome> couchbase.nome de usuário=<nome de usuário> couchbase.senha de usuário=<senha> [comum] vm.expiração.minutos=720 vm.rede.tempo limite.segundos=400 [xenhost1] hospedeiro.nome=<xenhostip1> hospedeiro.usuário=raiz hospedeiro.senha=xxxx hospedeiro.armazenamento.nome=Local Armazenamento 01 centos.modelo=tmpl-cnt7.7 centos7.modelo=tmpl-cnt7.7 janelas.modelo=tmpl-win16dc - PATCHES (1) centos8.modelo=tmpl-cnt8.0 oel8.modelo=tmpl-oel8 deb10.modelo=tmpl-deb10-também debian10.modelo=tmpl-deb10-também ubuntu18.modelo=tmpl-ubu18-4cgb suse15.modelo=tmpl-suse15 [xenhost2] hospedeiro.nome=<xenhostip2> hospedeiro.usuário=raiz hospedeiro.senha=xxxx hospedeiro.armazenamento.nome=ssd centos.modelo=tmpl-cnt7.7 janelas.modelo=tmpl-win16dc - PATCHES (1) centos8.modelo=tmpl-cnt8.0 oel8.modelo=tmpl-oel8 deb10.modelo=tmpl-deb10-também debian10.modelo=tmpl-deb10-também ubuntu18.modelo=tmpl-ubu18-4cgb suse15.modelo=tmpl-suse15 [xenhost3] ... [xenhost4] ... |

Exemplos

Exemplo de chamadas à API REST usando curl

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 |

$ enrolar 'http://127.0.0.1:5000/showall' { "1": [ { "memory_static_max": "6442450944", "name" (nome): "tunable-rebalance-out-Apr-29-13:43:59-7.0.0-19021", "name_description": "tunable-rebalance-out-Apr-29-13:43:59-7.0.0-19021 from tmpl-win16dc - PATCHED (1) on 2020-04-29 20:43:33.785778", "networkinfo": "172.23.137.20,172.23.137.20,fe80:0000:0000:0000:0585:c6e8:52f9:91d1", "power_state": "Em execução", "vcpus": "6" }, { "memory_static_max": "6442450944", "name" (nome): "nserv-nserv-rebalanceinout_P0_Set1_compression-Apr-29-06:36:12-7.0.0-19022", "name_description": "nserv-nserv-rebalanceinout_P0_Set1_compression-Apr-29-06:36:12-7.0.0-19022 from tmpl-win16dc - PATCHED (1) on 2020-04-29 13:36:53.717776", "networkinfo": "172.23.136.142,172.23.136.142,fe80:0000:0000:0000:744d:fd63:1a88:2fa8", "power_state": "Em execução", "vcpus": "6" } ], "2": [ .. ] "3": [ .. ] "4": [ .. ] "5": [ .. ] "6": [ .. ] } $ enrolar 'http://127.0.0.1:5000/getavailablecount/windows' 2 $ enrolar 'http://127.0.0.1:5000/getavailablecount/centos' 10 $ enrolar 'http://127.0.0.1:5000/getservers/demoserver?os=centos&count=3' ["172.23.137.73", "172.23.137.74", "172.23.137.75"] $ enrolar 'http://127.0.0.1:5000/getavailablecount/centos' 7 $ enrolar 'http://127.0.0.1:5000/releaseservers/demoserver?os=centos&count=3' [ "demoserver1", "demoserver2", "demoserver3" ] $ enrolar 'http://127.0.0.1:5000/getavailablecount/centos' 10 $ |

Trabalhos Jenkins com uma única VM

|

1 2 3 4 5 6 7 8 |

enrolar -s -o ${BUILD_TAG}_vm.json "http:///getservers/${BUILD_TAG}?os=windows&format=detailed" ENDEREÇO IP DA VM="`cat ${BUILD_TAG}_vm.json |egrep ${BUILD_TAG}|cut -f2 -d':'|xargs`" se [[ $ENDEREÇO IP DA VM =~ ^([0-9]{1,3}\.){3}[0-9]{1,3}$ ]]; então eco Válido IP recebido mais eco NÃO a válido IP recebido saída 1 fi |

São necessários trabalhos Jenkins com várias VMs

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 |

enrolar -s -o ${BUILD_TAG}.json "${SERVER_API_URL}/getservers/${BUILD_TAG}?os=${OS}&count=${COUNT}&format=detailed" gato ${BUILD_TAG}.json ENDEREÇO IP DA VM="`cat ${BUILD_TAG}.json |egrep ${BUILD_TAG}|cut -f2 -d':'|xargs|sed 's/,//g'`" eco $ENDEREÇO IP DA VM ADDR=() ÍNDICE=1 para IP em `eco $ENDEREÇO IP DA VM` fazer se [[ $IP =~ ^([0-9]{1,3}\.){3}[0-9]{1,3}$ ]]; então eco Válido IP=$IP recebido ADDR[$ÍNDICE]=${IP} ÍNDICE=`expr ${ÍNDICE} + 1` mais eco NÃO a válido IP=$IP recebido saída 1 fi feito eco ${ADDR[1]} eco ${ADDR[2]} eco ${ADDR[3]} eco ${ADDR[4]} |

Principais considerações

Aqui estão algumas de minhas observações feitas durante o processo e é melhor lidar com elas para torná-las mais confiáveis.

- Manipular nome/ID de armazenamento diferente entre diferentes hosts Xen

- Mantenha o controle do nome do dispositivo de armazenamento da VM no arquivo de configuração de entrada do serviço.

- Lidar com modelos parciais disponíveis apenas em alguns hosts Xen durante o provisionamento

- Quando os IPs de rede não estão disponíveis e as APIs do Xen obtêm o padrão 169.254.xx.yy no Windows. Aguarde até obter o endereço não 169 ou o tempo limite.

- Os servidores de versão devem ignorar o modelo do sistema operacional, pois alguns dos modelos podem não estar presentes Xen Hosts

- Provisão em uma referência específica de um determinado Xen Host

- Manipular Não há IPs disponíveis ou nnão está conseguindo obter IPs de rede para algumas das VMs criadas.

- PO Xen Hosts é uma sub-rede diferente para VMs dinâmicas direcionadas a hosts Xen. A expiração do contrato de aluguel de IP DHCP da rede padrão pode ser em dias (digamos, 7 dias) e não são fornecidos novos IPs.

- Trate a verificação de capacidade para contar os IPs em andamento como IPs reservados e deve mostrar uma contagem menor do que a total no momento. Caso contrário, as solicitações em andamento e as solicitações de entrada poderão ter problemas. Uma ou duas VMs (tamanhos de cpus/memória/disco) podem estar no buffer durante a criação e a verificação de solicitações paralelas.

Referências

Algumas das principais referências que ajudam na criação do serviço de gerenciador de servidor de VM dinâmico.

- https://www.couchbase.com/downloads

- https://wiki.xenproject.org/wiki/XAPI_Command_Line_Interface

- https://xapi-project.github.io/xen-api/

- https://docs.citrix.com/en-us/citrix-hypervisor/command-line-interface.html

- https://github.com/xapi-project/xen-api-sdk/tree/master/python/samples

- https://www.citrix.com/community/citrix-developer/citrix-hypervisor-developer/citrix-hypervisor-developing-products/citrix-hypervisor-staticip.html

- https://docs.ansible.com/ansible/latest/modules/xenserver_guest_module.html

- https://github.com/terra-farm/terraform-provider-xenserver

- https://github.com/xapi-project/xen-api/blob/master/scripts/examples/python/renameif.py

- https://xen-orchestra.com/forum/topic/191/single-device-not-reporting-ip-on-dashboard/14

- https://xen-orchestra.com/blog/xen-orchestra-from-the-cli/

- https://support.citrix.com/article/CTX235403

Espero que você tenha tido um bom momento de leitura!

Isenção de responsabilidade: Veja isso como uma referência se estiver lidando com Xen Hosts. Sinta-se à vontade para compartilhar se tiver aprendido algo novo que possa nos ajudar. Seu feedback positivo é muito bem-vindo!

Agradecemos a Raju Suravarjjala, Ritam Sharma, Wayne Siu, Tom Thrush e James Lee por sua ajuda durante o processo.