Introduction

In today’s world, the server infrastructure machines are either in on-premise data centers, private data centers or public cloud data centers. These machines are either physical bare metal machines, virtual machines (VMs) with hypervisors or small containers like docker containers on top of physical or virtual machines. These machines physically might be in the local lab. In a private data center scenario, where your own procured hosts are placed in a physical space that is shared in a third party data center and connected remotely. Whereas in the public data centers like AWS, GCP, Azure, OCI the machines are either reserved or created on-demand for the highly scalable needs connecting remotely. Each of these have its own advantages w.r.t scalability, security, reliability, management and costs associated with those infrastructures.

The product development environment teams might need many servers during the SDLC process. Let us say if one had chosen the private data center with their own physical machines along with Xen Servers. Now, the challenge is how the VMs lifecycle is managed for provision or termination in similar to cloud environments with lean and agile processes.

This document is aiming to provide the basic infrastructure model, architecture, minimum APIs and sample code snippets so that one can easily build dynamic infrastructure environments.

Benefits

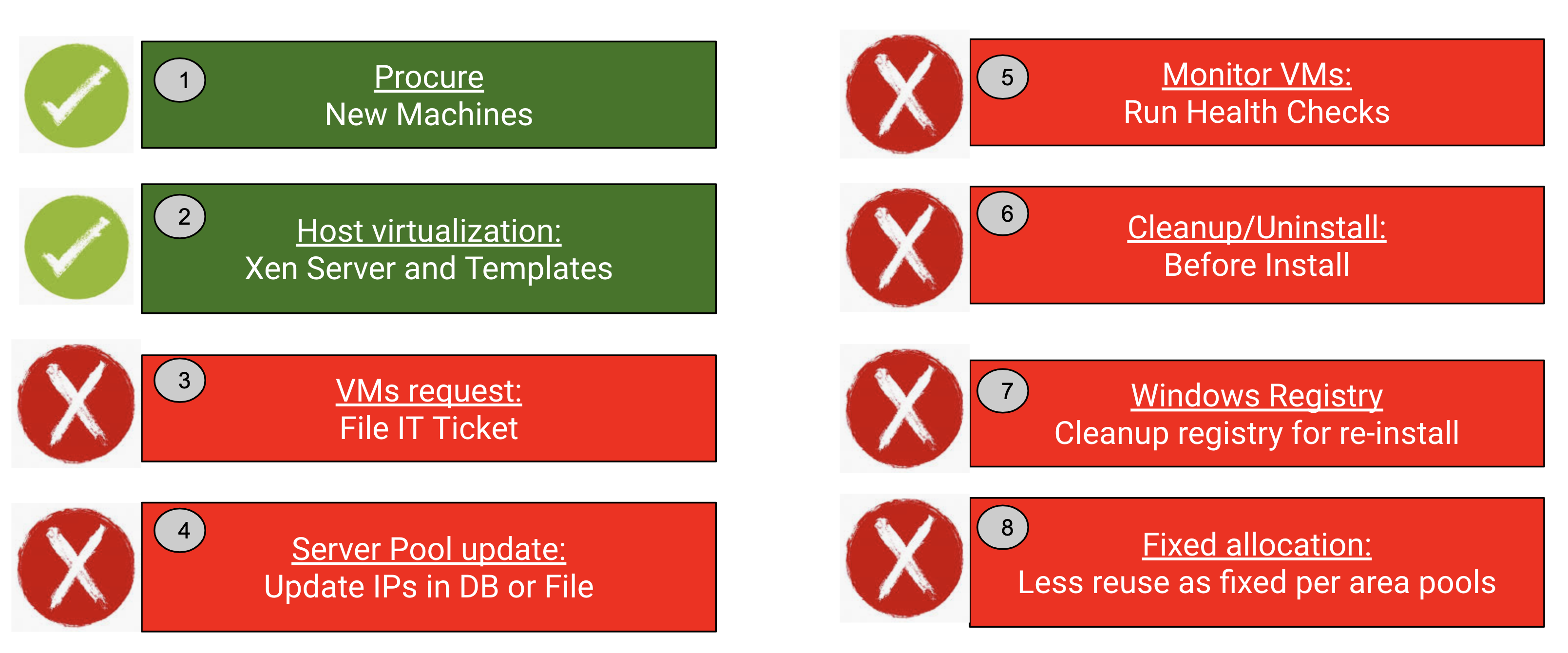

First let us understand the typical sequence of the steps followed in this server’s infrastructure process. You can recollect it as below.

-

- Procurement of the new machines by IT

- Host virtualization – Install Xen Server and Create VM Templates by IT

- Static VMs request by Dev and test teams via (say JIRA) tickets to IT

- Maintain the received VM IPs in a database or a static file or hardcoded in configuration files or CI tools like in Jenkins config.xml

- Monitor the VMs for health checks to make sure these are healthy before using to install the products

- Cleanup or uninstall before or after Server installations

- Windows might need some registry cleanup before installing the product

- Fixed allocation of VMs to an area or a team or dedicated to an engineer might have been done

Now, how can you make this process more lean and agile? Can you eliminate most of the above steps with simple automation?

Yes. In our environment, we had more than 1000 VMs and tried to achieved and mainly the below.

“Disposable VMs on-demand as required during tests execution. Solve Windows cleanup issues with regular test cycles.”

As you see below, using the dynamic VMs server manager API service, 6 out of 8 steps can be eliminated and it gives the unlimited infrastructure view for the entire product team. Only the first 2 steps – procure and host virtualization are needed. In effect, this saves in terms of time and cost!

Typical flow to get infrastructure

Dynamic Infrastructure model

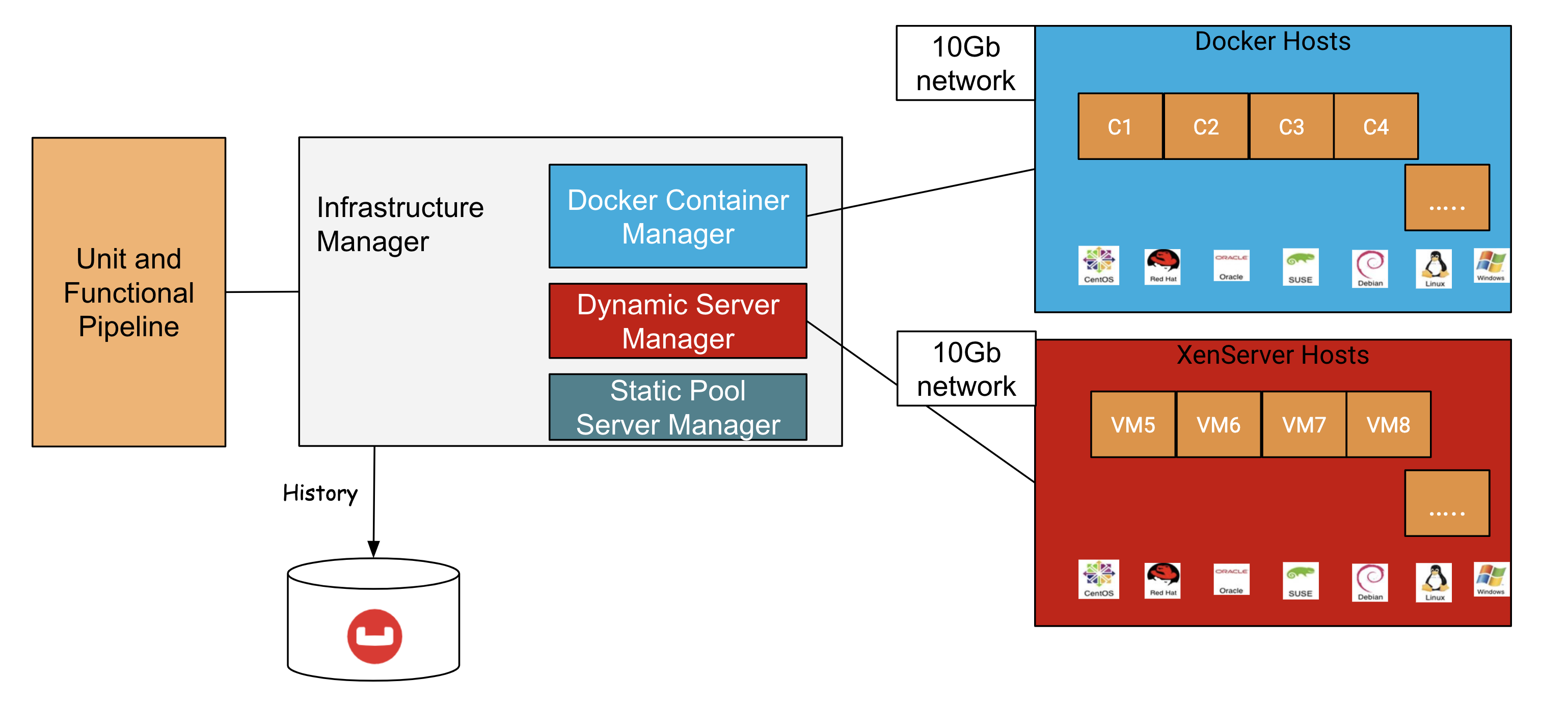

The below picture shows our proposed infrastructure for a typical server product environments where 80% of docker containers, 15% as dynamic VMs and 5% as static pooled VMs for special cases. This distribution can be adjusted based on what works most for your environment.

Infrastructure model

From here on, we will discuss more about Dynamic VM server manager part.

Dynamic Server Manager architecture

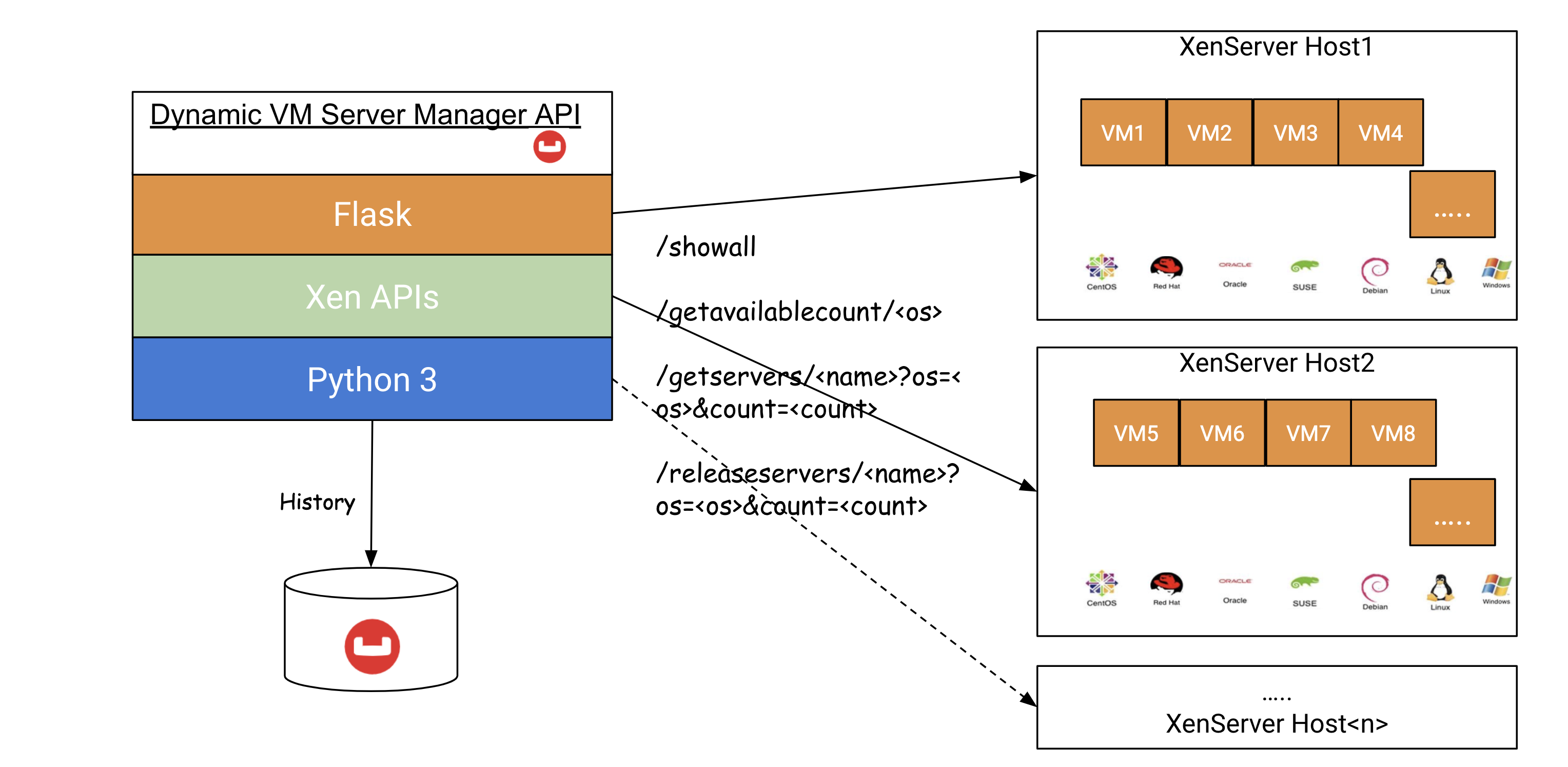

In the dynamic VMs server manager, a simple API service where the below REST APIs can be exposed and can be used anywhere in the automated process. As the tech stack shows, python 3 and Python based Xen APIs are used for actual creation of VMs with XenServer host. Flask is being used for the REST service layer creation. The OS can be any of your product supported platforms like windows2016, centos7, centos8, debian10, ubuntu18, oel8, suse15.

Dynamic VMs server manager architecture

Save the history of the VMs to track the usage and time to provision or termination can be analyzed further. For storing the json document, Couchbase enterprise server, which is a nosql document database can be used.

Simple REST APIs

| Method | URI(s) | Purpose |

| GET | /showall | Lists all VMs in json format |

| GET | /getavailablecount/<os> | Gets the list of available VMs count for the given <os> |

| GET | /getservers/<name>?os=<os>

/getservers/<name>?os=<os>&count=<count> /getservers/<name>?os=<os>&count=<count>&cpus=<cpus>&mem=<memsize> /getservers/<name>?os=<os>&expiresin=<minutes> |

Provisions given <count> VMs of <os>.

cpus count and mem size also can be supported. expiresin parameter in minutes to get expiry (auto termination) of the VMs. |

| GET | /releaseservers/<name>?os=<os>

/releaseservers/<name>?os=<os>&count=<count> |

Terminates given <count> VMs of <os> |

Pre-requirements for dynamic VM targeted Xen Hosts

- Identify targeted dynamic VM Xen Hosts

- Copy/create the VM templates

- Move these Xen Hosts a separate VLAN/Subnet (work with IT) for IPs recycle

Implementation

At a high level –

- Create functions each REST API

- Call a common service to perform different REST actions.

- Understand the Xen Session creation, getting the records, cloning VM from template, attaching the right disk, waiting for the VM creation and IP received; deletion of VMs, deletion of disks

- Start a thread for expiry of VMs automatically

- Read the common configuration such as .ini format

- Understand working with Couchbase database and save documents

- Test all APIs with required OSes and parameters

- Fix issues if any

- Perform a POC with few Xen Hosts

The below code snippets can help you to understand even better.

APIs creation

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 |

@app.route('/showall/<string:os>') @app.route("/showall") def showall_service(os=None): count, _ = get_all_xen_hosts_count(os) log.info("--> count: {}".format(count)) all_vms = {} for xen_host_ref in range(1, count + 1): log.info("Getting xen_host_ref=" + str(xen_host_ref)) all_vms[xen_host_ref] = perform_service(xen_host_ref, service_name='listvms', os=os) return json.dumps(all_vms, indent=2, sort_keys=True) @app.route('/getavailablecount/<string:os>') @app.route('/getavailablecount') def getavailable_count_service(os='centos'): """ Calculate the available count: Get Total CPUs, Total Memory Get Free CPUs, Free Memory Get all the VMs - CPUs and Memory allocated Get each OS template - CPUs and Memory Available count1 = (Free CPUs - VMs CPUs)/OS_Template_CPUs Available count2 = (Free Memory - VMs Memory)/OS_Template_Memory Return min(count1,count2) """ count, available_counts, xen_hosts = get_all_available_count(os) log.info("{},{},{},{}".format(count, available_counts, xen_hosts, reserved_count)) if count > reserved_count: count -= reserved_count log.info("Less reserved count: {},{},{},{}".format(count, available_counts, xen_hosts, reserved_count)) return str(count) # /getservers/username?count=number&os=centos&ver=6&expiresin=30 @app.route('/getservers/<string:username>') def getservers_service(username): global reserved_count if request.args.get('count'): vm_count = int(request.args.get('count')) else: vm_count = 1 os_name = request.args.get('os') if request.args.get('cpus'): cpus_count = request.args.get('cpus') else: cpus_count = "default" if request.args.get('mem'): mem = request.args.get('mem') else: mem = "default" if request.args.get('expiresin'): exp = int(request.args.get('expiresin')) else: exp = MAX_EXPIRY_MINUTES if request.args.get('format'): output_format = request.args.get('format') else: output_format = "servermanager" xhostref = None if request.args.get('xhostref'): xhostref = request.args.get('xhostref') reserved_count += vm_count if xhostref: log.info("--> VMs on given xenhost" + xhostref) vms_ips_list = perform_service(xhostref, 'createvm', os_name, username, vm_count, cpus=cpus_count, maxmemory=mem, expiry_minutes=exp, output_format=output_format) return json.dumps(vms_ips_list) ... # /releaseservers/{username} @app.route('/releaseservers/<string:username>/<string:available>') @app.route('/releaseservers/<string:username>') def releaseservers_service(username): if request.args.get('count'): vm_count = int(request.args.get('count')) else: vm_count = 1 os_name = request.args.get('os') delete_vms_res = [] for vm_index in range(vm_count): if vm_count > 1: vm_name = username + str(vm_index + 1) else: vm_name = username xen_host_ref = get_vm_existed_xenhost_ref(vm_name, 1, None) log.info("VM to be deleted from xhost_ref=" + str(xen_host_ref)) if xen_host_ref != 0: delete_per_xen_res = perform_service(xen_host_ref, 'deletevm', os_name, vm_name, 1) for deleted_vm_res in delete_per_xen_res: delete_vms_res.append(deleted_vm_res) if len(delete_vms_res) < 1: return "Error: VM " + username + " doesn't exist" else: return json.dumps(delete_vms_res, indent=2, sort_keys=True) def perform_service(xen_host_ref=1, service_name='list_vms', os="centos", vm_prefix_names="", number_of_vms=1, cpus="default", maxmemory="default", expiry_minutes=MAX_EXPIRY_MINUTES, output_format="servermanager", start_suffix=0): xen_host = get_xen_host(xen_host_ref, os) ... def main(): # options = parse_arguments() set_log_level() app.run(host='0.0.0.0', debug=True) |

Creation Xen session

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

def get_xen_session(xen_host_ref=1, os="centos"): xen_host = get_xen_host(xen_host_ref, os) if not xen_host: return None url = "http://" + xen_host['host.name'] log.info("\nXen Server host: " + xen_host['host.name'] + "\n") try: session = XenAPI.Session(url) session.xenapi.login_with_password(xen_host['host.user'], xen_host['host.password']) except XenAPI.Failure as f: error = "Failed to acquire a session: {}".format(f.details) log.error(error) return error return session |

List VMs

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 |

def list_vms(session): vm_count = 0 vms = session.xenapi.VM.get_all() log.info("Server has {} VM objects (this includes templates):".format(len(vms))) log.info("-----------------------------------------------------------") log.info("S.No.,VMname,PowerState,Vcpus,MaxMemory,Networkinfo,Description") log.info("-----------------------------------------------------------") vm_details = [] for vm in vms: network_info = 'N/A' record = session.xenapi.VM.get_record(vm) if not (record["is_a_template"]) and not (record["is_control_domain"]): log.debug(record) vm_count = vm_count + 1 name = record["name_label"] name_description = record["name_description"] power_state = record["power_state"] vcpus = record["VCPUs_max"] memory_static_max = record["memory_static_max"] if record["power_state"] != 'Halted': ip_ref = session.xenapi.VM_guest_metrics.get_record(record['guest_metrics']) network_info = ','.join([str(elem) for elem in ip_ref['networks'].values()]) else: continue # Listing only Running VMs vm_info = {'name': name, 'power_state': power_state, 'vcpus': vcpus, 'memory_static_max': memory_static_max, 'networkinfo': network_info, 'name_description': name_description} vm_details.append(vm_info) log.info(vm_info) log.info("Server has {} VM objects and {} templates.".format(vm_count, len(vms) - vm_count)) log.debug(vm_details) return vm_details |

Create VM

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 |

def create_vm(session, os_name, template, new_vm_name, cpus="default", maxmemory="default", expiry_minutes=MAX_EXPIRY_MINUTES): error = '' vm_os_name = '' vm_ip_addr = '' prov_start_time = time.time() try: log.info("\n--- Creating VM: " + new_vm_name + " using " + template) pifs = session.xenapi.PIF.get_all_records() lowest = None for pifRef in pifs.keys(): if (lowest is None) or (pifs[pifRef]['device'] < pifs[lowest]['device']): lowest = pifRef log.debug("Choosing PIF with device: {}".format(pifs[lowest]['device'])) ref = lowest mac = pifs[ref]['MAC'] device = pifs[ref]['device'] mode = pifs[ref]['ip_configuration_mode'] ip_addr = pifs[ref]['IP'] net_mask = pifs[ref]['IP'] gateway = pifs[ref]['gateway'] dns_server = pifs[ref]['DNS'] log.debug("{},{},{},{},{},{},{}".format(mac, device, mode, ip_addr, net_mask, gateway, dns_server)) # List all the VM objects vms = session.xenapi.VM.get_all_records() log.debug("Server has {} VM objects (this includes templates)".format(len(vms))) templates = [] all_templates = [] for vm in vms: record = vms[vm] res_type = "VM" if record["is_a_template"]: res_type = "Template" all_templates.append(vm) # Look for a given template if record["name_label"].startswith(template): templates.append(vm) log.debug(" Found %8s with name_label = %s" % (res_type, record["name_label"])) log.debug("Server has {} Templates and {} VM objects.".format(len(all_templates), len(vms) - len( all_templates))) log.debug("Choosing a {} template to clone".format(template)) if not templates: log.error("Could not find any {} templates. Exiting.".format(template)) sys.exit(1) template_ref = templates[0] log.debug(" Selected template: {}".format(session.xenapi.VM.get_name_label(template_ref))) # Retries when 169.x address received ipaddr_max_retries = 3 retry_count = 1 is_local_ip = True vm_ip_addr = "" while is_local_ip and retry_count != ipaddr_max_retries: log.info("Installing new VM from the template - attempt #{}".format(retry_count)) vm = session.xenapi.VM.clone(template_ref, new_vm_name) network = session.xenapi.PIF.get_network(lowest) log.debug("Chosen PIF is connected to network: {}".format( session.xenapi.network.get_name_label(network))) vifs = session.xenapi.VIF.get_all() log.debug(("Number of VIFs=" + str(len(vifs)))) for i in range(len(vifs)): vmref = session.xenapi.VIF.get_VM(vifs[i]) a_vm_name = session.xenapi.VM.get_name_label(vmref) log.debug(str(i) + "." + session.xenapi.network.get_name_label( session.xenapi.VIF.get_network(vifs[i])) + " " + a_vm_name) if a_vm_name == new_vm_name: session.xenapi.VIF.move(vifs[i], network) log.debug("Adding non-interactive to the kernel commandline") session.xenapi.VM.set_PV_args(vm, "non-interactive") log.debug("Choosing an SR to instantiate the VM's disks") pool = session.xenapi.pool.get_all()[0] default_sr = session.xenapi.pool.get_default_SR(pool) default_sr = session.xenapi.SR.get_record(default_sr) log.debug("Choosing SR: {} (uuid {})".format(default_sr['name_label'], default_sr['uuid'])) log.debug("Asking server to provision storage from the template specification") description = new_vm_name + " from " + template + " on " + str(datetime.datetime.utcnow()) session.xenapi.VM.set_name_description(vm, description) if cpus != "default": log.info("Setting cpus to " + cpus) session.xenapi.VM.set_VCPUs_max(vm, int(cpus)) session.xenapi.VM.set_VCPUs_at_startup(vm, int(cpus)) if maxmemory != "default": log.info("Setting memory to " + maxmemory) session.xenapi.VM.set_memory(vm, maxmemory) # 8GB="8589934592" or 4GB="4294967296" session.xenapi.VM.provision(vm) log.info("Starting VM") session.xenapi.VM.start(vm, False, True) log.debug(" VM is booting") log.debug("Waiting for the installation to complete") # Get the OS Name and IPs log.info("Getting the OS Name and IP...") config = read_config() vm_network_timeout_secs = int(config.get("common", "vm.network.timeout.secs")) if vm_network_timeout_secs > 0: TIMEOUT_SECS = vm_network_timeout_secs log.info("Max wait time in secs for VM OS address is {0}".format(str(TIMEOUT_SECS))) if "win" not in template: maxtime = time.time() + TIMEOUT_SECS while read_os_name(session, vm) is None and time.time() < maxtime: time.sleep(1) vm_os_name = read_os_name(session, vm) log.info("VM OS name: {}".format(vm_os_name)) else: # TBD: Wait for network to refresh on Windows VM time.sleep(60) log.info("Max wait time in secs for IP address is " + str(TIMEOUT_SECS)) maxtime = time.time() + TIMEOUT_SECS # Wait until IP is not None or 169.xx (when no IPs available, this is default) and timeout # is not reached. while (read_ip_address(session, vm) is None or read_ip_address(session, vm).startswith( '169')) and \ time.time() < maxtime: time.sleep(1) vm_ip_addr = read_ip_address(session, vm) log.info("VM IP: {}".format(vm_ip_addr)) if vm_ip_addr.startswith('169'): log.info("No Network IP available. Deleting this VM ... ") record = session.xenapi.VM.get_record(vm) power_state = record["power_state"] if power_state != 'Halted': session.xenapi.VM.hard_shutdown(vm) delete_all_disks(session, vm) session.xenapi.VM.destroy(vm) time.sleep(5) is_local_ip = True retry_count += 1 else: is_local_ip = False log.info("Final VM IP: {}".format(vm_ip_addr)) |

Delete VM

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 |

def delete_vm(session, vm_name): log.info("Deleting VM: " + vm_name) delete_start_time = time.time() vm = session.xenapi.VM.get_by_name_label(vm_name) log.info("Number of VMs found with name - " + vm_name + " : " + str(len(vm))) for j in range(len(vm)): record = session.xenapi.VM.get_record(vm[j]) power_state = record["power_state"] if power_state != 'Halted': # session.xenapi.VM.shutdown(vm[j]) session.xenapi.VM.hard_shutdown(vm[j]) # print_all_disks(session, vm[j]) delete_all_disks(session, vm[j]) session.xenapi.VM.destroy(vm[j]) delete_end_time = time.time() delete_duration = round(delete_end_time - delete_start_time) # delete from CB uuid = record["uuid"] doc_key = uuid cbdoc = CBDoc() doc_result = cbdoc.get_doc(doc_key) if doc_result: doc_value = doc_result.value doc_value["state"] = 'deleted' current_time = time.time() doc_value["deleted_time"] = current_time if doc_value["created_time"]: doc_value["live_duration_secs"] = round(current_time - doc_value["created_time"]) doc_value["delete_duration_secs"] = delete_duration cbdoc.save_dynvm_doc(doc_key, doc_value) |

Historic Usage of VMs

It is better to maintain the history of all the VMs created and terminated along with other useful data. Here is the example of json document stored in the Couchbase, a free Nosql database server. Insert a new document using the key as the xen opac reference uuid whenever a new VM is provisioned and update the same whenever VM is terminated. Track the live usage time of the VM and also how the provisioning/termination done by each user.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

record = session.xenapi.VM.get_record(vm) uuid = record["uuid"] # Save as doc in CB state = "available" username = new_vm_name pool = "dynamicpool" doc_value = {"ipaddr": vm_ip_addr, "origin": xen_host_description, "os": os_name, "state": state, "poolId": pool, "prevUser": "", "username": username, "ver": "12", "memory": memory_static_max, "os_version": vm_os_name, "name": new_vm_name, "created_time": prov_end_time, "create_duration_secs": create_duration, "cpu": vcpus, "disk": disks_info} # doc_value["mac_address"] = mac_address doc_key = uuid cb_doc = CBDoc() cb_doc.save_dynvm_doc(doc_key, doc_value) |

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 |

class CBDoc: def __init__(self): config = read_config() self.cb_server = config.get("couchbase", "couchbase.server") self.cb_bucket = config.get("couchbase", "couchbase.bucket") self.cb_username = config.get("couchbase", "couchbase.username") self.cb_userpassword = config.get("couchbase", "couchbase.userpassword") try: self.cb_cluster = Cluster('couchbase://' + self.cb_server) self.cb_auth = PasswordAuthenticator(self.cb_username, self.cb_userpassword) self.cb_cluster.authenticate(self.cb_auth) self.cb = self.cb_cluster.open_bucket(self.cb_bucket) except Exception as e: log.error('Connection Failed: %s ' % self.cb_server) log.error(e) def get_doc(self, doc_key): try: return self.cb.get(doc_key) except Exception as e: log.error('Error while getting doc %s !' % doc_key) log.error(e) def save_dynvm_doc(self, doc_key, doc_value): try: log.info(doc_value) self.cb.upsert(doc_key, doc_value) log.info("%s added/updated successfully" % doc_key) except Exception as e: log.error('Document with key: %s saving error' % doc_key) log.error(e) |

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

"dynserver-pool": { "cpu": "6", "create_duration_secs": 65, "created_time": 1583518463.8943903, "delete_duration_secs": 5, "deleted_time": 1583520211.8498628, "disk": "75161927680", "ipaddr": "x.x.x.x", "live_duration_secs": 1748, "memory": "6442450944", "name": "Win2019-Server-1node-DynVM", "origin": "s827", "os": "win16", "os_version": "", "poolId": "dynamicpool", "prevUser": "", "state": "deleted", "username": "Win2019-Server-1node-DynVM", "ver": "12" } |

Configuration

The Dynamic VM server manager service configuration such as couchbase server, xenhost servers, template details, default expiry and network timeout values can be maintained in a simple .ini format. Any new Xen Host received, then just add as a separate section.The config is dynamically loaded without restarting the Dynamic VM SM service.

Sample config file: .dynvmservice.ini

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 |

[couchbase] couchbase.server=<couchbase-hostIp> couchbase.bucket=<bucket-name> couchbase.username=<username> couchbase.userpassword=<password> [common] vm.expiry.minutes=720 vm.network.timeout.secs=400 [xenhost1] host.name=<xenhostip1> host.user=root host.password=xxxx host.storage.name=Local Storage 01 centos.template=tmpl-cnt7.7 centos7.template=tmpl-cnt7.7 windows.template=tmpl-win16dc - PATCHED (1) centos8.template=tmpl-cnt8.0 oel8.template=tmpl-oel8 deb10.template=tmpl-deb10-too debian10.template=tmpl-deb10-too ubuntu18.template=tmpl-ubu18-4cgb suse15.template=tmpl-suse15 [xenhost2] host.name=<xenhostip2> host.user=root host.password=xxxx host.storage.name=ssd centos.template=tmpl-cnt7.7 windows.template=tmpl-win16dc - PATCHED (1) centos8.template=tmpl-cnt8.0 oel8.template=tmpl-oel8 deb10.template=tmpl-deb10-too debian10.template=tmpl-deb10-too ubuntu18.template=tmpl-ubu18-4cgb suse15.template=tmpl-suse15 [xenhost3] ... [xenhost4] ... |

Examples

Sample REST API calls using curl

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 |

$ curl 'http://127.0.0.1:5000/showall' { "1": [ { "memory_static_max": "6442450944", "name": "tunable-rebalance-out-Apr-29-13:43:59-7.0.0-19021", "name_description": "tunable-rebalance-out-Apr-29-13:43:59-7.0.0-19021 from tmpl-win16dc - PATCHED (1) on 2020-04-29 20:43:33.785778", "networkinfo": "172.23.137.20,172.23.137.20,fe80:0000:0000:0000:0585:c6e8:52f9:91d1", "power_state": "Running", "vcpus": "6" }, { "memory_static_max": "6442450944", "name": "nserv-nserv-rebalanceinout_P0_Set1_compression-Apr-29-06:36:12-7.0.0-19022", "name_description": "nserv-nserv-rebalanceinout_P0_Set1_compression-Apr-29-06:36:12-7.0.0-19022 from tmpl-win16dc - PATCHED (1) on 2020-04-29 13:36:53.717776", "networkinfo": "172.23.136.142,172.23.136.142,fe80:0000:0000:0000:744d:fd63:1a88:2fa8", "power_state": "Running", "vcpus": "6" } ], "2": [ .. ] "3": [ .. ] "4": [ .. ] "5": [ .. ] "6": [ .. ] } $ curl 'http://127.0.0.1:5000/getavailablecount/windows' 2 $ curl 'http://127.0.0.1:5000/getavailablecount/centos' 10 $ curl 'http://127.0.0.1:5000/getservers/demoserver?os=centos&count=3' ["172.23.137.73", "172.23.137.74", "172.23.137.75"] $ curl 'http://127.0.0.1:5000/getavailablecount/centos' 7 $ curl 'http://127.0.0.1:5000/releaseservers/demoserver?os=centos&count=3' [ "demoserver1", "demoserver2", "demoserver3" ] $ curl 'http://127.0.0.1:5000/getavailablecount/centos' 10 $ |

Jenkins jobs with single VM

|

1 2 3 4 5 6 7 8 |

curl -s -o ${BUILD_TAG}_vm.json "http://<host:port>/getservers/${BUILD_TAG}?os=windows&format=detailed" VM_IP_ADDRESS="`cat ${BUILD_TAG}_vm.json |egrep ${BUILD_TAG}|cut -f2 -d':'|xargs`" if [[ $VM_IP_ADDRESS =~ ^([0-9]{1,3}\.){3}[0-9]{1,3}$ ]]; then echo Valid IP received else echo NOT a valid IP received exit 1 fi |

Jenkins jobs with multiple VMs needed

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 |

curl -s -o ${BUILD_TAG}.json "${SERVER_API_URL}/getservers/${BUILD_TAG}?os=${OS}&count=${COUNT}&format=detailed" cat ${BUILD_TAG}.json VM_IP_ADDRESS="`cat ${BUILD_TAG}.json |egrep ${BUILD_TAG}|cut -f2 -d':'|xargs|sed 's/,//g'`" echo $VM_IP_ADDRESS ADDR=() INDEX=1 for IP in `echo $VM_IP_ADDRESS` do if [[ $IP =~ ^([0-9]{1,3}\.){3}[0-9]{1,3}$ ]]; then echo Valid IP=$IP received ADDR[$INDEX]=${IP} INDEX=`expr ${INDEX} + 1` else echo NOT a valid IP=$IP received exit 1 fi done echo ${ADDR[1]} echo ${ADDR[2]} echo ${ADDR[3]} echo ${ADDR[4]} |

Key considerations

Here are few of my observations noted during the process and it is better to handle to make it more reliable.

- Handle Storage name/ID different among different Xen Hosts

- Keep track of VM storage device name in the service input config file.

- Handle partial templates only available on some Xen Hosts while provisioning

- When Network IPs not available and Xen APIs gets the default 169.254.xx.yy on Windows. Wait until getting the non 169 address or timeout.

- Release servers should ignore os template as some of the templates might not be there Xen Hosts

- Provision on a specific given Xen Host reference

- Handle No IPs available or not getting network IPs for some of the VMs created.

- Plan to have a different subnet for dynamic VMs targeted Xen Hosts. The default network DHCP IP lease expiry might be in days (say 7 days) and no new IPs are provided.

- Handle the capacity check to count the in progress as Reserved IPs and should show less count than full at the moment. Otherwise, both in-progress and incoming requests might have issues. One or two VMs (cpus/memory/disk sizes) can be in buffer while creating and checking if any parallel requests.

References

Some of the key references that help while creating the dynamic VM server manager service.

- https://www.couchbase.com/downloads

- https://wiki.xenproject.org/wiki/XAPI_Command_Line_Interface

- https://xapi-project.github.io/xen-api/

- https://docs.citrix.com/en-us/citrix-hypervisor/command-line-interface.html

- https://github.com/xapi-project/xen-api-sdk/tree/master/python/samples

- https://www.citrix.com/community/citrix-developer/citrix-hypervisor-developer/citrix-hypervisor-developing-products/citrix-hypervisor-staticip.html

- https://docs.ansible.com/ansible/latest/modules/xenserver_guest_module.html

- https://github.com/terra-farm/terraform-provider-xenserver

- https://github.com/xapi-project/xen-api/blob/master/scripts/examples/python/renameif.py

- https://xen-orchestra.com/forum/topic/191/single-device-not-reporting-ip-on-dashboard/14

- https://xen-orchestra.com/blog/xen-orchestra-from-the-cli/

- https://support.citrix.com/article/CTX235403

Hope you had a good reading time!

Disclaimer: Please view this as a reference if you are dealing with Xen Hosts. Feel free to share if you learned something new that can help us. Your positive feedback is appreciated!

Thanks to Raju Suravarjjala, Ritam Sharma, Wayne Siu, Tom Thrush, James Lee for their help during the process.