What is federated learning?

Federated learning is a machine learning (ML) approach that enables multiple devices or systems to train a shared model collaboratively without exchanging raw data. Instead of sending data to a central server, each participant, such as a mobile device, edge server, or organization, trains the model locally on its data and sends only the model updates (e.g., gradients or weights) to a central coordinator. These updates are then aggregated to improve the global model, preserving data privacy and reducing bandwidth usage.

This decentralized approach is ideal for scenarios where data privacy, security, or data locality are concerns (e.g., healthcare, finance, or personalized mobile services). By keeping data on-device or on-premise, federated learning helps organizations comply with regulations while still benefiting from collective learning across distributed datasets.

How does federated learning work?

The federated learning process typically unfolds in these steps:

-

- Model initialization: A global model is initialized on a central server or coordinator. This can be a pre-trained model or a randomly initialized one.

- Local training: A subset of client devices (e.g., smartphones, edge nodes, or hospitals) receives the global model and trains it locally using their data. Each device runs several epochs and improves the model based on its unique dataset.

- Model update sharing: Instead of uploading their raw data, clients send the updated model parameters back to the central server. These updates are often encrypted or obfuscated to enhance privacy.

- Aggregation: The central server aggregates all the received updates using a technique like Federated Averaging (FedAvg), combining them into a new version of the global model.

- Model distribution: The updated global model is sent back to the clients, and the cycle repeats over multiple rounds until the model converges or reaches a desired level of accuracy.

This iterative loop enables learning from decentralized data while maintaining data locality. To further enhance privacy, federated learning is often combined with techniques like differential privacy and secure multi-party computation. The result is a strong framework that aids collaborative AI development without compromising data ownership or confidentiality.

Types of federated learning

Federated learning comes in several forms, each designed to suit different data distribution scenarios and organizational setups. Understanding these types will help you determine which approach aligns best with your privacy, infrastructure, and collaboration goals.

-

- Horizontal federated learning: This type is used when participants have datasets with the same feature space but different user samples. For example, two hospitals may collect the same types of patient data (age, symptoms, diagnosis) but serve different patient populations. Horizontal federated learning enables them to train a model collaboratively without sharing individual records.

- Vertical federated learning: In this case, participants have data on the same users but with different sets of features. For instance, a bank and an e-commerce platform might serve the same customers, but one has financial data while the other has purchase history. Vertical federated learning allows joint model training by securely aligning data across shared users.

- Federated transfer learning: When datasets differ in samples and features, federated transfer learning bridges the gap using transfer learning techniques. This is useful when participants have limited overlapping data but still want to benefit collaboratively from each other’s knowledge, which is common in cross-industry collaborations.

- Cross-device vs. cross-silo: Other dimensions are scale and trust. Cross-device federated learning involves millions of edge devices like smartphones and wearables, each contributing small amounts of data. Conversely, cross-silo learning involves fewer, more stable participants such as enterprises, hospitals, or banks with larger datasets and consistent infrastructure.

Federated learning algorithms

Federated learning algorithms govern how local model updates are combined and optimized across distributed clients. They must account for data heterogeneity, limited communication bandwidth, and potentially unreliable clients. Here are some of the ones commonly used:

-

- FedAvg: The most widely used algorithm, FedAvg allows clients to train the model locally for multiple epochs and then send only the updated weights to the server. The server averages these updates to refine the global model. It strikes a balance between performance and communication efficiency.

- FedProx: An extension of FedAvg, FedProx adds a regularization term to address data heterogeneity and prevent local updates from drifting too far from the global model. This improves convergence when client datasets vary significantly.

- Secure aggregation: This is not a training algorithm per se, but a cryptographic technique often paired with others. Secure aggregation allows the server to compute the average of local updates without learning any individual participant’s update, adding an extra layer of privacy.

- Adaptive federated optimization: These more advanced algorithms incorporate adaptive learning rates (like Adam or Yogi) into the federated setting to improve performance and handle non-IID (non-independent and identically distributed) data across clients.

Choosing the right algorithm depends on your use case, the nature of your data, and the trade-offs you’re willing to make between speed, accuracy, and privacy.

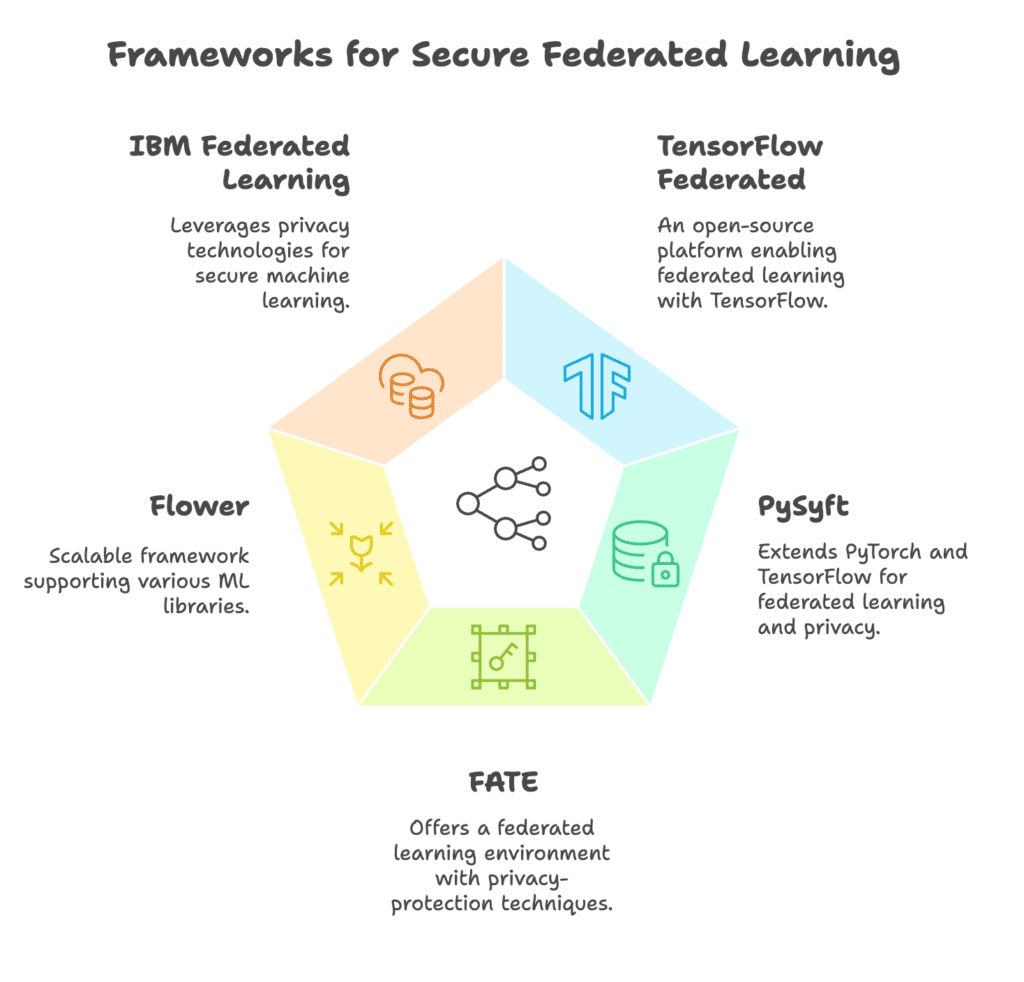

Federated learning frameworks

Several organizations and developers have created federated learning frameworks to assist with challenges like client-server coordination, secure model aggregation, and scalable deployment, allowing them to focus on model design and experimentation rather than infrastructure. Here are some of the most widely used federated learning frameworks:

-

- IBM Federated Learning: IBM’s solution enables secure, decentralized model training across multiple data sources by coordinating model updates without sharing sensitive data between participants.

- TensorFlow Federated (TFF): Developed by Google, TFF is a flexible framework for experimenting with federated learning algorithms using TensorFlow. It supports simulation of federated environments and provides building blocks for implementing custom aggregation and training strategies.

- PySyft: Created by OpenMined, PySyft is a Python library focused on privacy-preserving machine learning. It supports federated learning, differential privacy, and encrypted computation. PySyft integrates with PyTorch and is designed for building decentralized AI systems with strong privacy guarantees.

- FATE (Federated AI Technology Enabler): Created by WeBank, FATE offers a federated learning environment with privacy-protection techniques like homomorphic encryption and secure multi-party computation.

- Flower (flwr): Flower is a highly customizable, lightweight, federated learning framework that works with any ML library (like PyTorch, TensorFlow, or scikit-learn). Its flexible architecture makes it easy to prototype and scale federated learning systems in academic and industrial settings.

Federated learning applications across industries

As regulatory requirements become stricter and decentralized data grows in volume, federated learning provides a viable, scalable approach to AI innovation that respects privacy, strengthens compliance, and encourages collaboration across organizations. By enabling collaborative model training without exposing raw data, federated learning supports numerous use cases across industries.

Healthcare

Hospitals and research institutions use federated learning to build predictive models from distributed patient data without compromising privacy. Applications include early disease detection, personalized treatment recommendations, and medical imaging diagnostics, all trained on data that never leaves the institution.

Finance

Banks and insurance providers leverage federated learning to detect fraud, assess credit risk, and improve personalization, while keeping customer data siloed and compliant with regulations like GDPR and HIPAA. It ensures that multiple institutions can collaborate without exposing sensitive financial records.

Telecommunications

Mobile network operators use federated learning to improve user experience through on-device models that adapt to usage patterns, optimize network performance, and enable predictive maintenance without transmitting customer data to the cloud.

Retail and e-commerce

Federated learning supports collaborative personalization and recommendation engines across retailers or platforms, allowing them to improve customer insights while keeping individual browsing and purchase behavior confidential.

Manufacturing and IoT

In industrial environments, federated learning allows edge devices, like sensors and smart machines, to train models for anomaly detection, predictive maintenance, and quality control using localized data, reducing latency and bandwidth while protecting intellectual property (IP) and operational data.

Autonomous vehicles

Auto manufacturers use federated learning to train models for navigation, object recognition, and driver behavior based on data collected by distributed fleets. This supports continuous learning across vehicles while keeping location and usage data secure.

Benefits of federated learning

Federated learning offers an alternative to traditional, centralized machine learning by enabling model training across distributed data sources while keeping the data local. This approach has benefits like:

Data privacy and security

One of the core advantages of federated learning is that raw data never leaves the source device or system. This minimizes exposure to breaches, supports compliance with privacy regulations like GDPR and HIPAA, and reduces the risk associated with centralized data storage.

Compliance with data residency regulations

Federated learning allows organizations to train models across regions or jurisdictions without transferring data across borders. This is especially important in industries like finance and healthcare, where data residency rules restrict how and where sensitive data can be processed.

Reduced data transfer and bandwidth costs

By transmitting only model updates rather than entire datasets, federated learning significantly reduces the amount of data that needs to be transferred over networks, making it ideal for environments with limited bandwidth or high data volumes, such as edge computing or IoT deployments.

Improved personalization

Because federated models can learn directly from user behavior on devices, they can deliver highly personalized experiences, such as next-word prediction or content recommendations, without compromising user privacy.

Scalability across edge devices

Federated learning is designed to work across various devices, from smartphones to sensors to enterprise servers. This capability makes it well-suited for edge computing scenarios where distributed training at scale is essential.

Collaboration without data sharing

Organizations unable or unwilling to share data, such as hospitals, banks, or competing businesses, can still collaborate on joint machine learning initiatives. Federated learning allows them to build more accurate models collectively while preserving data sovereignty.

By addressing privacy, bandwidth, and data access challenges, federated learning opens up new opportunities for innovation in sectors where data is distributed, sensitive, or tightly regulated.

Challenges of federated learning

While federated learning introduces a transformative approach to privacy-preserving AI, it also brings a unique set of technical and operational challenges that can complicate large-scale implementation. Here are some of the issues you might run into:

Data heterogeneity

In federated learning, data remains decentralized and is often collected across diverse devices, environments, or organizations. This results in non-IID data, which can degrade model performance or lead to bias if not properly addressed.

System and device variability

Client devices vary widely when it comes to computing power, connectivity, and availability. This makes it difficult to coordinate training rounds consistently, especially in cross-device scenarios where some clients may drop out or be unavailable intermittently.

Communication overhead

While federated learning reduces the need to share raw data, it introduces frequent transmission of model updates between clients and a central server. This can create bandwidth bottlenecks, particularly when dealing with large models or resource-constrained devices.

Privacy and security risks

Although data isn’t directly shared, model updates can leak sensitive information through inference or reconstruction attacks. Implementing robust defenses like differential privacy, secure aggregation, or homomorphic encryption adds complexity and computational cost.

Model convergence and optimization

Training models across heterogeneous, distributed environments can make convergence slower and less stable. Ensuring consistent performance requires specialized optimization techniques and thoughtful aggregation strategies.

Debugging and observability

With no centralized dataset to inspect, identifying the root cause of poor performance, anomalies, or failures becomes more difficult. Developers should build tooling to monitor client behavior, data drift, and update quality in real time.

Despite these challenges, ongoing research and advances in federated learning frameworks make it increasingly viable for production use. Careful planning, privacy safeguards, and thoughtful model architecture can help mitigate many of these issues.

How to implement federated learning

Implementing federated learning involves more than just training a model; it’s also about setting up secure, decentralized learning across multiple clients. Whether you’re working across mobile devices, edge sensors, or organizational silos, the process requires careful design and the right tooling. Here are the main steps involved:

-

- Define the use case and participants: Start by identifying the problem you’re trying to solve and the entities that will participate in training. These could be user devices (cross-device learning) or multiple organizations (cross-silo learning). Understanding your participants’ infrastructure, data types, and constraints will inform architecture and strategy.

- Prepare a base model: Develop or choose a machine learning model architecture that fits your problem domain. The initial model is typically trained on public or synthetic data to establish a starting point before being distributed to clients for federated updates.

- Set up the environment: Implement or adopt a federated learning framework (e.g., TensorFlow Federated, PySyft, or Flower) to manage client-server communication, model synchronization, and update aggregation. Set up the orchestration server to handle model distribution and collect updates.

- Distribute the model to clients: Send the base model to participating clients. Each client will train the model locally using its private data for a predefined number of epochs or steps.

- Perform local training: Run local model training on each client while keeping the raw data on-device or in-place. After training, only the model updates (e.g., gradients or weights) will be sent back to the central server.

- Aggregate model updates: Apply an aggregation algorithm (commonly FedAvg) on the server to combine client updates. This step may include filtering, weighting, or applying privacy-preserving techniques like secure aggregation or differential privacy.

- Iterate and repeat: Repeat the training process across multiple rounds. The model will be updated and redistributed with each iteration to clients for further refinement, gradually improving performance.

- Monitor, evaluate, and deploy: Regularly assess the performance of the global model using a validation dataset. Monitor key metrics such as model accuracy, client participation rates, and data drift. When the model reaches the ideal performance level, deploy it to production.

- Secure and maintain: Throughout implementation, enforce strong security and privacy practices. Use encryption, authentication, and audit mechanisms to protect the integrity of the training process and ensure trust among participants.

10 key takeaways and resources

To help solidify your understanding of federated learning, here are 10 key takeaways regarding its core concepts, benefits, and challenges:

-

- Federated learning enables model training without sharing raw data, preserving privacy and reducing data transfer by keeping data local.

- It operates through a cyclical process involving initializing a global model, local client training, sharing updates, server aggregation, and model redistribution.

- There are multiple types of federated learning, including horizontal, vertical, and federated transfer learning, each suited to different data distribution scenarios.

- Federated learning algorithms like FedAvg and FedProx handle update aggregation while balancing performance, privacy, and system variability.

- Frameworks such as IBM Federated Learning, TensorFlow Federated, and Flower simplify deployment by managing orchestration, security, and scalability.

- Federated learning is used across industries, including healthcare, finance, telecom, retail, and autonomous vehicles, supporting privacy-sensitive innovation.

- The approach offers major benefits, such as data privacy, reduced bandwidth costs, compliance with data residency laws, and enhanced personalization.

- Challenges include data heterogeneity, client variability, communication overhead, and potential privacy risks from shared model updates.

- Implementing federated learning requires a structured approach, including defining participants, setting up infrastructure, selecting aggregation strategies, and securing the process.

- With the right tools and safeguards, federated learning is production-ready, offering a scalable, privacy-conscious alternative to centralized machine learning.

To continue your AI journey, review these resources from Couchbase and the federated learning frameworks listed in this blog post:

Couchbase resources

Federated learning framework resources

FAQs

What is a federated learning model? A federated learning model is an ML model trained on decentralized data across multiple clients. It evolves by aggregating updates from local models trained independently on each client’s data.

What is an example of federated learning? A common example of federated learning is predictive text on smartphones, where the keyboard model learns from user typing behavior on-device and sends only updates (not the typed text) to improve the global model shared across users.

What is the difference between federated learning and machine learning? Traditional machine learning centralizes data for training, whereas federated learning keeps data decentralized and trains models across distributed devices. Federated learning enhances privacy and is better suited for scenarios involving sensitive or siloed data.

What is the difference between federated learning and meta learning? Federated learning focuses on training a shared model from decentralized data, while meta learning aims to train models that can quickly adapt to new tasks with minimal data. They serve different goals; federated learning emphasizes privacy and collaboration, and meta learning emphasizes adaptability and generalization.