In a previous blog, I talked about various storage strategies that need to be considered when choosing the correct storage to meet your business requirements. This becomes more salient as datasets continue to grow and consistent performance is mandated by the business.

Now, with the introduction of Couchbase’s new Storage engine, Magma, you have even more options to help you meet the business goals. Addressing storage priorities is even more possible as Magma deals with large datasets that don’t fit in memory and primarily rely on disk subsystems.

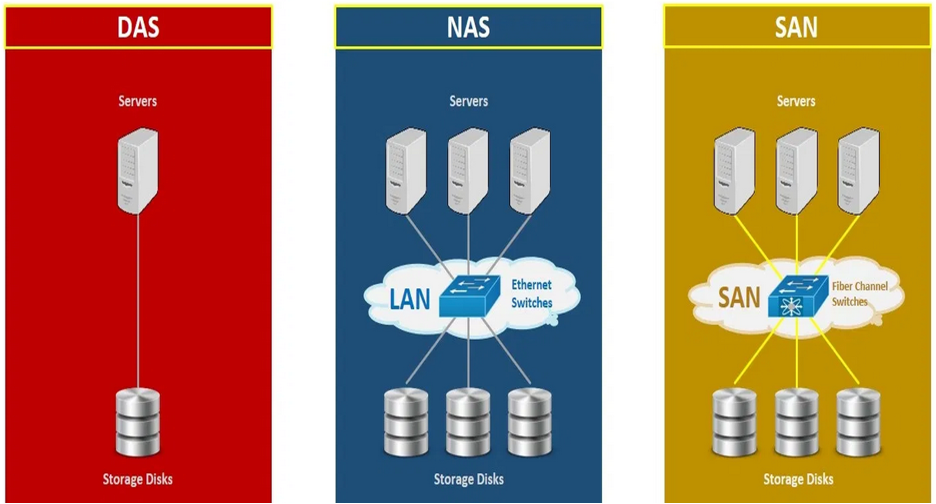

When assessing how to use Couchbase as a persistent system of records, the focus needs to move away from the virtual storage layer (also known as software-defined storage) and toward looking at the underlying physical storage layer instead. Each type of storage option has different implications to consider when choosing the underpinning Storage subsystem layer.

First, let’s look at the options, then investigate further:

Storage area network (SAN) using SCSI with arrays & traditional HDDs (spinning disk)

-

-

- e.g. EMC, HP, IBM…

-

SAN using NVMe with arrays & flash/SSDs

-

-

- e.g. Pure Storage, Violin, EMC…

-

Directly attached storage devices (DASD) using NVMe with arrays & flash/SSDs

Network Storage using NFS/TCP

-

-

- NetApp

-

Considerations when selecting the storage subsystem

This is not an exhaustive list, there are other items that need to be considered, but this gives you a good idea of the areas to start considering:

Performance

-

- IOPS

- Latency

Resilience

-

- RAID – at the physical layer

- Mirroring – at the physical layer

Management

-

- Who will manage the storage hardware?

- Who will configure/implement the storage?

Status Quo

-

- Is the required storage and infrastructure already deployed?

- Is there requirements for a new storage solution?

- Is there experience to support a new solution if decided upon?

Agility

-

- Future Proof – Ability to move to new technology when required to meet changing demands.

Database Profile

-

- Read Intensive

- Write Intensive

- These can influence the hardware requirements with some storage better at dealing with write intensive workloads and inversely read intensive workloads.

Cost

When looking at the cost it needs to be more holistic than just looking at the underlying physical storage and storage networking layers, it needs to take into consideration: management, implementation, existing infrastructure, cooling. Each, when built into the TCO, will cost more than the underlying hardware costs, so it’s imperative that these are considered.

Comparison of physical storage options

The following table compares the various approaches:

| Considerations | San SCSI HDD | San NVMe | DAS SSD NVMe | NFS |

| Performance | High | Very High | Ultra High | Medium |

| Resilience | RAID

Multiple Arrays Multiple Paths |

RAID

Multiple Arrays Multiple Paths |

RAID

Single Array |

RAID

Multiple Arrays Multiple Paths |

| Management | Complex

Multiple Teams Storage & Network |

Complex

Multiple Teams Storage & Network |

Complex

Multiple Teams Storage & Network |

Complex

Multiple Teams Storage & Network |

| Agility | Array Mobility

Multiple Server access Ease of adding more storage |

Array Mobility

Multiple Server access Ease of adding more storage |

Infrastructure & Network Change

Configuration changes to add more storage |

Array Mobility

Multiple Server access Potential storage islands |

| Cost | $$$ | $$$$ | $$$$ | $$ |

| Infrastructure Requirements | San

Array |

San

Array |

Fiber

Array |

TCP/IP

Array / Filer |

| Shared Workload Impact | Possible Noisy Neighbor Syndrome | Possible Noisy Neighbor Syndrome | Guarantee QOS

No noisy neighbor |

Possible Noisy Neighbor Syndrome |

As previously discussed, choosing the correct storage solution can have a big impact on your applications! Proper planning and engagement with other stakeholders is key to the success when selecting and implementing the storage subsystem.

Further thoughts that should be considered, when choosing a storage solution:

- Understand your workloads, whether primarily reads or writes, the amount of I/O operations that your database is required to deliver.

- Choose a solution that meets the performance and latency requirements as above.

- Meeting the business requirements now with foresight for the future forecasted workloads. Remain agile to adopt new technologies as they are released. Balance the business budgetary requirements with appropriate storage solutions.

Finally, with workloads growing, RAM is struggling to scale to contain the entire working dataset. As discussed earlier, Magma – the new Couchbase Storage engine, is designed to accommodate this with partial datasets residing on disk. Now would be a good time for you to investigate how you can take advantage of Magma features.

In a nutshell, the performance of disk access will only be as good as the underlying disk subsystems and using NVMe SSDs will give best parity to entire data sets contained in memory.