저희는 다음과 같이 발표하게 되어 기쁘게 생각합니다. 카우치베이스 라이트 3.2 출시 벡터 검색을 지원합니다. 이번 출시는 카펠라 및 카우치베이스 서버 7.6에서 벡터 검색 지원. 이제 Couchbase Lite에서 벡터 검색을 지원하여 다음을 수행할 수 있습니다. 클라우드와 엣지에서 AI 애플리케이션을 구동하는 벡터 검색을 위한 클라우드 투 엣지 지원.

이 블로그 게시물에서는 엣지에서 벡터 검색을 지원함으로써 얻을 수 있는 주요 이점에 대해 설명하고, Couchbase Lite 애플리케이션에 해당하는 사용 사례에 대해 간략하게 살펴봅니다.

벡터 검색이란 무엇인가요?

벡터 검색 는 의미론적으로 검색하는 기술입니다. 유사한 항목을 기준으로 벡터 임베딩 다차원 공간에서 항목의 표현입니다. 거리 메트릭은 항목 간의 유사성을 결정하는 데 사용됩니다. 벡터 검색은 제너레이티브 AI 및 예측 AI 애플리케이션.

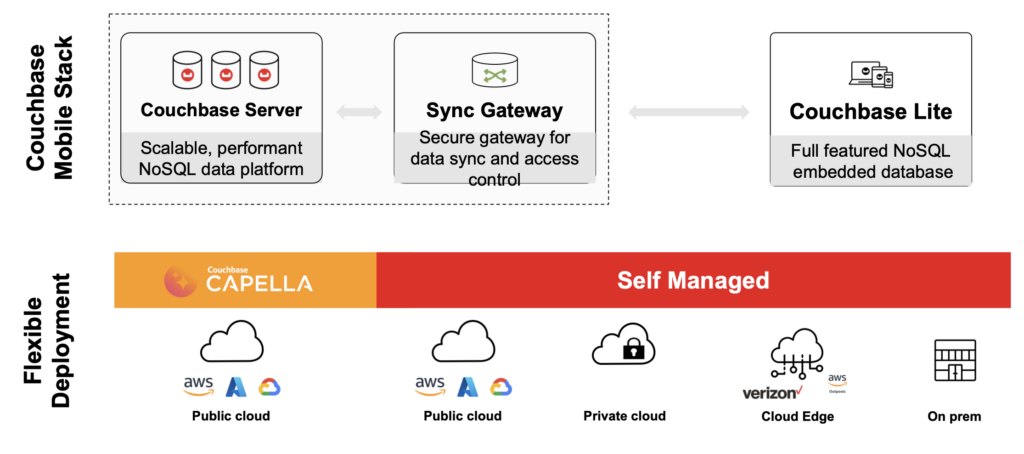

카우치베이스 모바일 스택

Couchbase를 처음 사용하시는 분들을 위해 Couchbase Mobile에 대한 간단한 입문서를 소개합니다.

카우치베이스 모바일은 오프라인 우선클라우드-엣지 데이터베이스 플랫폼입니다. 다음과 같이 구성되어 있습니다:

클라우드 데이터베이스: 다음과 같은 완전 관리형 및 호스팅형 서비스형 데이터베이스로 사용 가능 카우치베이스 카펠라, 또는 배포 및 호스팅 카우치베이스 서버 혼자서.

임베디드 데이터베이스: 카우치베이스 라이트 는 모바일, 데스크톱 및 IoT 애플리케이션을 위한 모든 기능을 갖춘 NoSQL 임베디드 데이터베이스입니다.

데이터 동기화: 웹을 통한 데이터 동기화 및 장치 간 피어 투 피어 동기화를 위한 안전한 게이트웨이입니다. 다음을 통해 완전 호스팅 및 관리형 동기화로 제공 카펠라 앱 서비스, 또는 설치 및 관리 카우치베이스 동기화 게이트웨이 스스로.

저희의 문서 에서 자세한 내용을 확인하세요.

벡터 검색 사용 사례 및 이점

다음과 같은 이점이 있지만 벡터 검색 가 꽤 잘 이해되고 있는데 왜 엣지에서 벡터 검색을 원할까요?

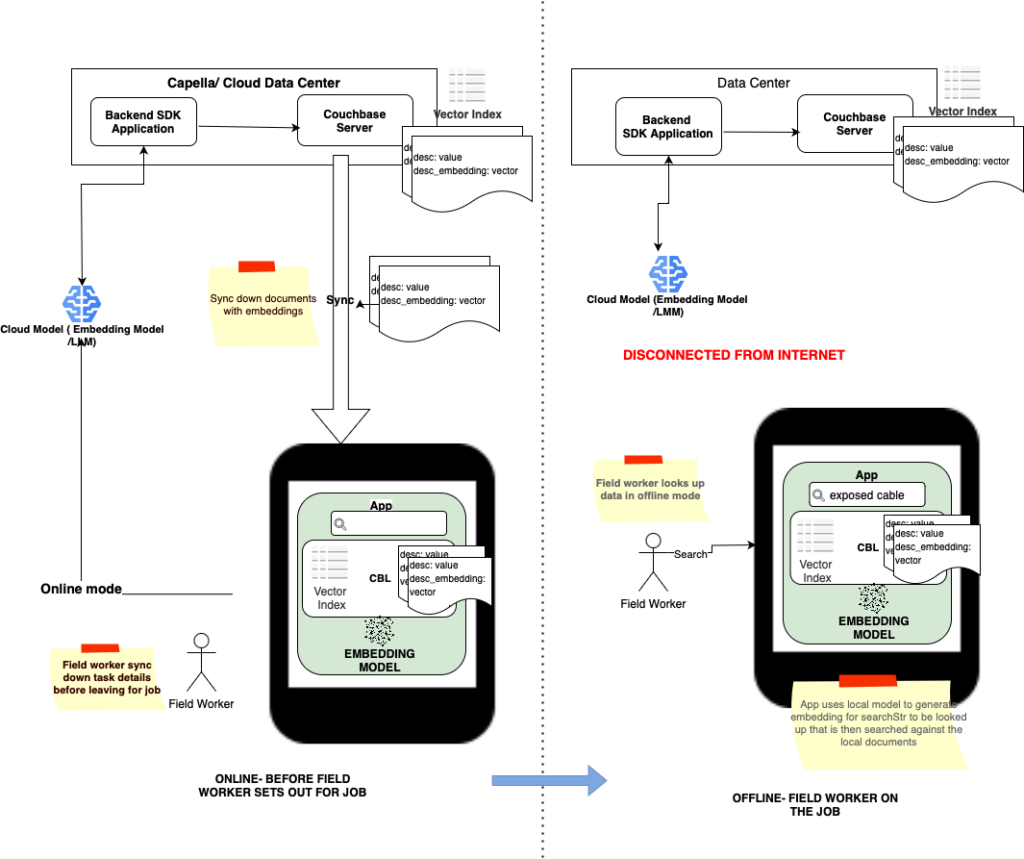

오프라인 우선 모드에서의 시맨틱 검색

이제 단순한 텍스트 기반 검색만으로는 부족한 애플리케이션에서 로컬 데이터에 대한 시맨틱 검색을 지원하여 기기가 오프라인 모드일 때에도 상황에 맞는 데이터를 검색할 수 있습니다. 이렇게 하면 검색 결과를 항상 사용할 수 있습니다.

예

대표적인 현장 적용 사례 수리 현장과 재난 지역에서 근무하는 유틸리티 작업자는 인터넷 연결이 원활하지 않거나 전혀 없는 지역에서 작업합니다:

-

- 단어, 라인, 케이블, 와이어 는 유틸리티 회사의 대명사입니다. 현장의 유틸리티 직원이 해당 문구를 검색할 때, 라인가 포함된 문서 케이블, wire 도 반환해야 합니다.

- 전체 텍스트 검색(FTS)을 사용하면 애플리케이션이 동의어 목록을 유지해야 하는데, 이는 생성, 관리 및 유지 관리가 어렵습니다.

- 관련성 또한 중요합니다. 따라서 다음에 대한 쿼리 다운된 전력선에 대한 안전 절차 - 는 정전된 전력선, 전기 케이블, 고압선 등과 관련된 매뉴얼에 중점을 두어야 합니다.

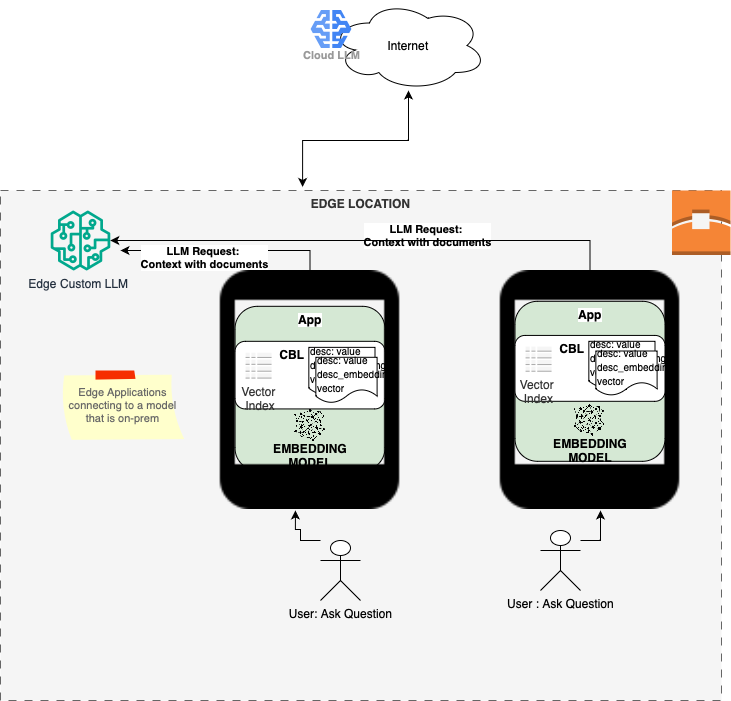

데이터 프라이버시 우려 완화

벡터 검색 데이터베이스의 주요 사용 사례 중 하나는 문맥과 관련된 데이터를 가져오는 기능입니다. 그런 다음 검색 결과는 쿼리 응답을 사용자 지정하기 위해 대규모 언어 모델(LLM)로 전송되는 쿼리에 컨텍스트 데이터로 포함되며, 이는 다음을 위한 초석입니다. 검색 증강 세대(RAG). 사적이거나 민감한 데이터에 대해 검색을 실행하면 개인정보 보호 문제가 발생할 수 있습니다. 로컬 디바이스에서 검색을 수행할 경우, 인증되고 디바이스의 비공개 데이터에 액세스할 권한이 있는 사용자로만 검색을 제한할 수 있습니다. 벡터 검색 결과의 모든 개인 식별 정보(PII)는 삭제된 다음 RAG 쿼리 내에서 LLM으로 활용될 수 있습니다.

또한 병원, 소매점 등 엣지 위치에 맞춤형 LLM을 배포하는 경우 인터넷을 통해 상황에 맞는 검색 결과를 원격 클라우드 서비스로 전송하는 것에 대한 우려를 더욱 줄일 수 있습니다.

예

다음 건강 관리 애플리케이션의 예를 생각해 보세요:

-

- 병원 의사가 수술 후 회복 중인 환자를 위한 치료 옵션을 찾고 있습니다.

- 병력 및 선호도에서 관련 환자 컨텍스트를 검색합니다. 이 데이터에 대한 액세스는 인증 및 권한이 부여됩니다.

- 환자 컨텍스트는 쿼리와 함께 병원에서 호스팅되는 에지 LLM 모델로 전송되어 맞춤형 회복 계획을 생성할 수 있습니다.

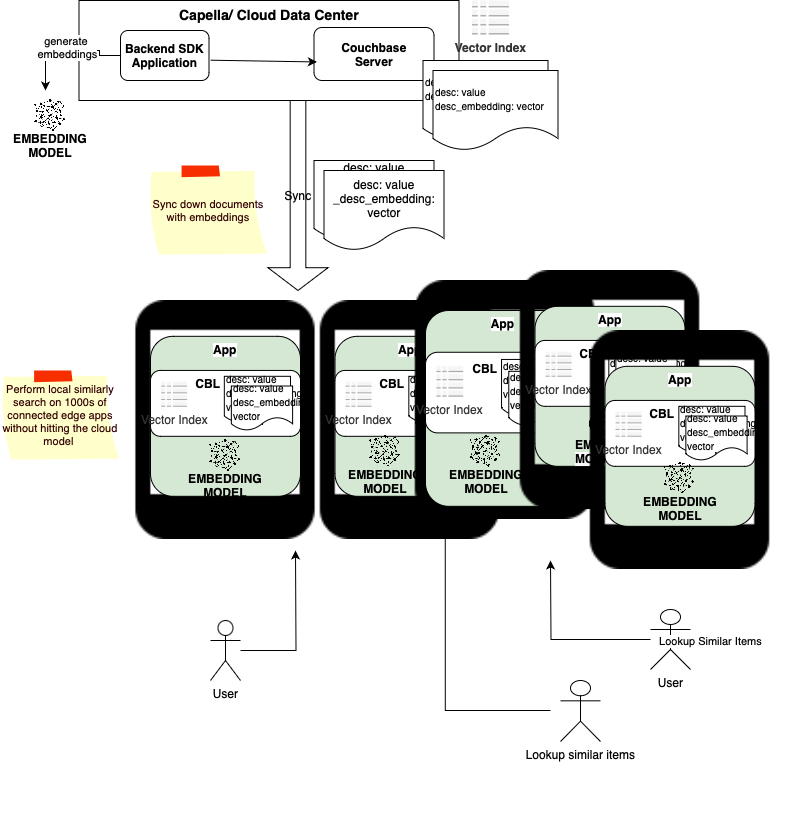

쿼리당 비용 절감

클라우드 기반 LLM에 대해 100~1000개의 연결된 클라이언트가 쿼리하는 경우 클라우드 모델에 대한 부하와 클라우드 기반 모델을 실행하는 데 드는 운영 비용이 상당히 높아질 수 있습니다. 디바이스에서 로컬로 쿼리를 실행하면 데이터 전송 비용과 클라우드 송신 비용을 절감하고 운영 비용도 분산할 수 있습니다.

예

다음 디지털 고객 서비스 도우미 애플리케이션의 예를 살펴보세요:

-

- 소매점은 제품 카탈로그, 매장별 가격 및 프로모션 데이터를 매장의 고객 서비스 키오스크(엣지 디바이스)와 동기화합니다.

- 키오스크에서 한 사용자가 입고 있는 재킷과 어울리는 모자를 카메라로 검색하고 있습니다. 그녀는 세일 중인 모자에도 관심이 있습니다.

- 키오스크가 검색 쿼리를 원격 서버로 전송하는 대신 키오스크에서 카탈로그에서 로컬로 유사성 검색을 수행하여 다음을 찾습니다. 유사 항목 판매 중입니다.

- 또한 캡처한 이미지는 키오스크에서 즉시 폐기할 수 있어 개인정보 보호에 대한 우려를 덜어줍니다.

짧은 지연 시간 검색

로컬 임베디드 모델을 사용하여 로컬 데이터 세트에 대해 로컬로 실행되는 검색은 네트워크 변동성을 제거하며 일관되게 빠릅니다. 모델이 로컬 디바이스에 내장되어 있지 않고 엣지 위치에 배포된 경우에도 인터넷을 통한 검색에 비해 쿼리와 관련된 왕복 시간(RTT)을 크게 줄일 수 있습니다.

예

소매점 애플리케이션 수정하기:

-

- 고객 서비스 키오스크에 동기화되는 제품 카탈로그, 매장별 가격 및 프로모션 문서에는 벡터 임베딩이 포함됩니다. 벡터 임베딩은 클라우드의 LLM 임베딩 모델에 의해 생성됩니다.

- 동기화된 문서는 키오스크에서 로컬로 색인화됩니다.

- 매장 키오스크에서 특정 품목을 찾는 고객이 다음을 정기적으로 검색합니다. 아디다스 여성용 테니스화 사이즈 9 를 실행할 수도 있습니다. 관련 항목 찾기 기능을 사용하면 일반 검색을 통해 검색된 제품과 나머지 제품 문서를 비교하여 유사 검색을 수행할 수 있습니다. 검색은 로컬에서 이루어지며 속도가 빠릅니다.

- 이 경우 벡터 임베딩은 클라우드에서 생성되지만 유사성 검색은 로컬에서 수행됩니다. 실제로 이 특정 애플리케이션에서는 키오스크 애플리케이션에 임베딩 모델조차 필요하지 않습니다.

벡터 유사성 검색을 위한 통합 클라우드-엣지 지원

클라우드에 가장 적합한 쿼리가 있는 반면, 앞서 설명한 이유로 인해 엣지에 더 적합한 쿼리가 있는 경우도 있습니다. 클라우드나 엣지 또는 둘 다에서 쿼리를 실행할 수 있는 유연성을 갖추면 개발자는 두 환경의 장점을 모두 활용하는 애플리케이션을 구축할 수 있습니다.

예

- 지난 6개월 동안의 사용자별 거래 내역이 동기화되어 기기에 로컬로 저장되는 모바일 뱅킹 앱을 예로 들어 보겠습니다.

- 한 사용자가 몇 달 전에 구매한 거래와 관련된 거래를 찾고 있습니다. 검색은 로컬에서 이루어지므로 빠르고 오프라인에서도 사용 가능합니다.

- 모든 사용자와 관련된 거래는 사기 탐지 애플리케이션에서 시맨틱 검색을 사용하여 사기 활동 패턴을 탐지하는 클라우드 서버에 저장됩니다.

코드를 보여주세요!

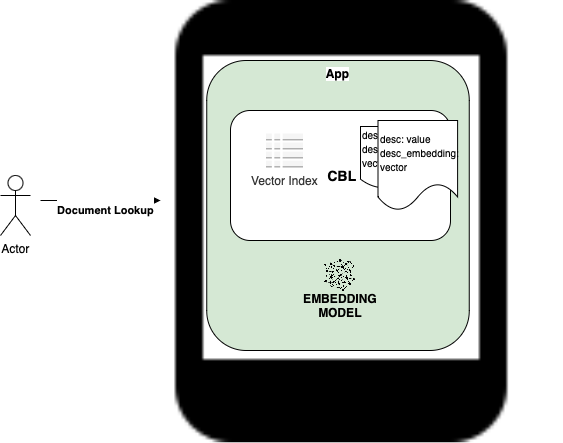

이제 엣지 애플리케이션 내에서 벡터 검색의 이점에 대해 알아보셨으니, 이제 이를 구현하는 데 무엇이 필요한지 알아보겠습니다. 아주 간단하며 몇 줄의 코드만 있으면 엣지 애플리케이션 내에서 시맨틱 검색의 강력한 기능을 사용할 수 있습니다. 아래 예제는 스위프트 언어로 되어 있지만, 원하는 언어로 된 코드 스니펫은 아래 리소스 섹션에서 확인하세요.

벡터 인덱스 만들기

이 예에서는 기본값으로 벡터 인덱스를 생성합니다. 애플리케이션은 다른 거리 메트릭, 인덱스 인코딩 유형 및 중심 트레이닝 매개변수를 사용하여 벡터 인덱스 구성을 추가로 사용자 지정할 수 있습니다:

|

1 2 3 4 5 6 |

// 벡터 인덱스 구성을 생성합니다. 예를 들어, "description" 문서 속성은 인덱싱됩니다(모든 SQL++ 표현식이 될 수 있음). var 구성 = 벡터 인덱스 구성(표현식: "설명", 치수: 158, 중심: 20) // 지정된 구성으로 벡터 인덱스 생성 시도 컬렉션.createIndex(withName: "myIndex", 구성: 구성) |

유사도 검색 수행

이 예제에서는 SQL++ 쿼리를 실행하여 대상 임베딩의 설명과 일치하는 상위 10개 유사 문서를 검색하고 있습니다. searchPhrase:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

// 임베딩 모델에서 searchPhrase에 대한 벡터 임베딩 가져오기 guard let 검색임베딩 = 모델 참조.getEmbedding(에 대한: 검색구문) else { 던지기 오류.notFound } // 검색 구문과 유사한 콘텐츠를 가진 데이터베이스에서 상위 10개 문서를 반환하는 SQL++ 쿼리를 작성합니다. let sql = "SELECT meta().id, description FROM _ ORDER BY APPROX_VECTOR_DISTANCE(벡터, $searchParam) LIMIT 10" // 쿼리 생성 let 쿼리 = 시도 db.createQuery(sql) // 검색 매개변수와 관련된 임베딩 벡터를 설정합니다. let 매개변수 = 매개변수() 매개변수.setValue(검색임베딩, forName: "searchParam") 쿼리.매개변수 = 매개변수 // 벡터 검색 쿼리 실행 시도 쿼리.실행() |

리소스

다음은 몇 가지 유용한 리소스에 대한 직접 링크입니다.

-

- 단계별 설치 가이드

- Couchbase Lite 3.2 다운로드

- 카우치베이스 라이트 벡터 확장 라이브러리 다운로드

- 벡터 검색을 지원하려면 기본 Couchbase Lite SDK 외에 애플리케이션에 연결해야 하는 별도의 확장 라이브러리가 필요합니다.

- 카우치베이스 라이트 벡터 검색 설명 동영상

- 샘플 앱 카우치베이스 라이트 벡터 검색용

벡터 검색을 지원하는 레퍼런스 아키텍처에 대한 다음 블로그 포스팅을 기대해 주세요.