Category: Generative AI (GenAI)

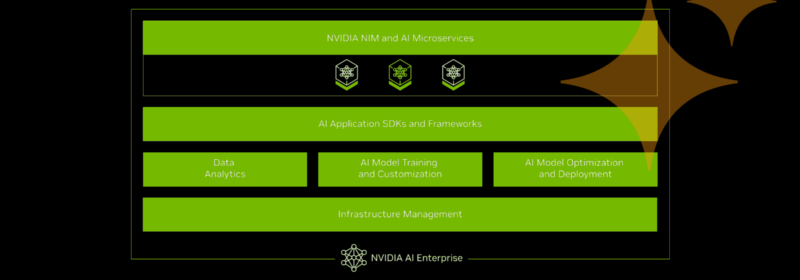

Couchbase and NVIDIA Team Up to Help Accelerate Agentic Application Development

Couchbase and NVIDIA team up to make agentic applications easier and faster to build, to feed with data, and to run.

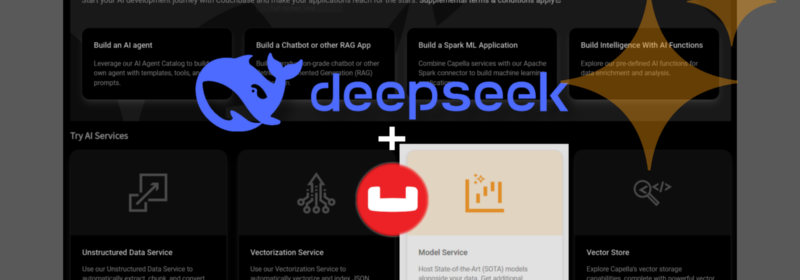

DeepSeek Models Now Available in Capella AI Services

DeepSeek-R1 is now in Capella AI Services! Unlock advanced reasoning for enterprise AI at lower TCO. 🚀 Sign up for early access!

A Tool to Ease Your Transition From Oracle PL/SQL to Couchbase JavaScript UDF

Convert PL/SQL to JavaScript UDFs seamlessly with an AI-powered tool. Automate Oracle PL/SQL migration to Couchbase with high accuracy using ANTLR and LLMs.

Integrate Groq’s Fast LLM Inferencing With Couchbase Vector Search

Integrate Groq’s fast LLM inference with Couchbase Vector Search for efficient RAG apps. Compare its speed with OpenAI, Gemini, and Ollama.

2025 Enterprise AI Predictions: Four Prominent Shifts Reshaping Infrastructure and Strategy

Discover four key AI predictions for 2025 that will shape enterprise strategy, including the rise of hybrid AI models and evolving data architectures. Read more!

Introducing Couchbase as a Vector Store in MindsDB

Combine Couchbase and MindsDB to unlock AI-driven applications with high-performance vector storage and seamless integration.

A Guide to Data Chunking

Data chunking refers to the process of breaking up datasets into smaller chunks. Learn how it improves performance, speed, and memory management.

Couchbase + Dify: High-Power Vector Capabilities for AI Workflows

Couchbase meets Dify.ai, enabling high-performance vector storage for streamlined AI application development.

Cracking the Code on Quality Control with Vector Search

Use Couchbase vector search to evaluate blog comment quality with AI. Learn how to set up and explore practical applications.

Top Posts

- Couchbase Introduces Capella AI Services to Expedite Agent Develo...

- New Enterprise Analytics Brings Next Generation JSON Analytics to...

- Data Analysis Methods: Qualitative vs. Quantitative Techniques

- Integrate Groq’s Fast LLM Inferencing With Couchbase Vector...

- MongoDB Ends Mobile Support Today: Migrate to Couchbase

- Data Modeling Explained: Conceptual, Physical, Logical

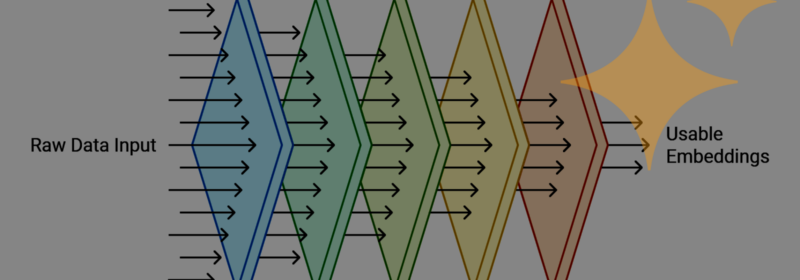

- What are Embedding Models? An Overview

- Optimizing AI Workflows with a Human in the Middle

- Reinventing the Future of Media and Entertainment with AI and Cou...