Over the previous 3 years, the AI landscape has gone through a massive transformation. We’ve gone from basic language models to full-fledged AI Agents that can take action on our behalf in just a few short years. AI is the new buzz word everywhere. We all joke about it, but in reality, it has had an incredible boom and is extremely powerful. And, as you can see, AI is not new. It has been around for a while, but since the introduction of LLMs and Generative AI in 2023, there has been a spike in its use.

While the potential for productivity using AI is huge, there are security concerns that should be addressed when working with autonomous systems like AI Agents. Misconfigured access policies to data could lead to AI retrieving sensitive internal documents or exposing confidential data. Therefore, in this blog we explore how traditional access control approaches fall short when AI systems need contextual, document-level permissions at scale and speed. And we cover how Fine-Grained Authorization (FGA) provides robust security for Retrieval-Augmented Generation (RAG) and agentic AI systems. Thus, learn how to implement permission models that protect sensitive information while enabling AI to access only authorized data.

The changing AI landscape – and the security gaps

AI agents perform tasks for the human by calling APIs, learning from errors, and sometimes working with no human supervision. But, of course, there are risks associated with this fast growth and one of those big risks is security. We’ve seen several tweets as well as heard many folks within the industry discuss the importance of security and authentication when leveraging AI and AI agents. Currently, there’s no universal blueprint for building AI securely into applications.

OWASP started defining the Top 10 for LLM Applications in 2023 as a community-driven effort to highlight and address security issues specific to AI applications, and there are the top 10 points for 2025. One of them is Sensitive information disclosure. AI agents can be autonomous, so without the proper handling they might reveal sensitive information or confidential enterprise data, and this can happen as a result of a deliberate attack or accidentally.

AI must consider user permissions when accessing data. How do we enforce that an Agent cannot modify existent records or access documents restricted to other employees at runtime?

The answer is with authorization. We need to make sure our AI systems only show the right information to the right user.

Why traditional authorization falls short

Role Based Access Control: RBAC is the most common way people implement authorization in their applications and websites. When we use RBAC, we are checking for roles. Whether the user has a certain role assigned to them or not before making access decisions. If they have the role they get access, if they don’t they get a 403 Forbidden error. The main downside to RBAC is mainly scalability. It doesn’t scale well when there are multiple roles.

Attribute based access control (ABAC): ABAC is a step up from RBAC for fine grained access, allowing us to grant some users access to individual documents, and others access to others.

However, it still falls short when the document is in nested folders, you would need to retrieve all the folders recursively up the chain. When the user is in nested groups, you need to do the same thing. And you need to do all this in order to authorize the request.

So let’s see what is an even better way of doing authorization. This is where ReBAC (Relationship-Based Access Control) comes in. ReBAC allows expressing authorization rules based on relations that users and objects in a system have amongst each other. ReBAC services use their knowledge of the relationships between the different entities in the system in order to reach an authorization decision. The good thing about RebAC is that it can do both RBAC and ABAC depending on how you define those relationships.

Fine-grained authorization – the missing layer

Fine Grained Authorization (FGA) dynamically enforces access rules at the resource level. Instead of granting blanket permissions, FGA determines at query time exactly which documents a user is allowed to see.

FGA is all about controlling who can do what with what kind of resources, down to an individual level. In a typical scenario showing a role-based system, one might say, “Admins can see everything, but Regular Users can see only some subset.” But in a real-world app, especially one that deals with many documents, this might not be flexible enough. This is where OpenFGA comes in.

OpenFGA is a CNCF-hosted, open-source project maintained by Okta. It was inspired by Google’s Zanzibar system which describes how authorization for all of Google’s services was built. OpenFGA addresses the above by letting you define authorization relationships. The relationships defined in the authorization model can be either direct or indirect. Simply put, direct relationships are directly assigned between a user and object and stored in a database. Indirect relationships are the relationships we can infer based on the data and the authorization model.

Setting up OpenFGA ReBAC

There are 4 main concepts about OpenFGA and how it works:

-

- Store: A store is an OpenFGA entity used to organize authorization models and tuples. Literally where you store your data

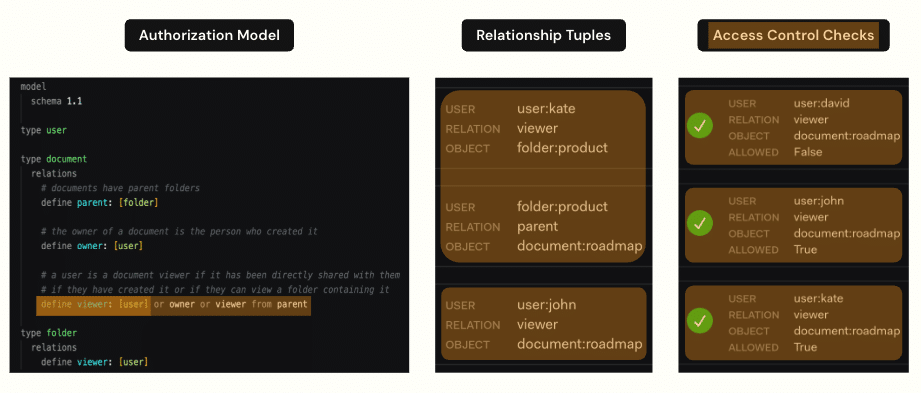

- Authorization Model: An authorization model is where you define who can do what and under which conditions. These are going to be your authorization policies expressed in a model. In the model, we have to define the entities which are going to be relevant when making authorization decisions.

- Relationship Tuples: A relationship tuple is a base tuple or triplet consisting of a user, relation, and object. You can think of tuples as the “facts” of your authorization system. We have a form of user-to-object relationship. The data that is present on the relationship tuples essentially defines the state of your system, and you modify the tuples as the state of your system evolves

- Queries: Last of all, to use this to check authorization we have to be able to query the system. And what the OpenFGA system does to answer this question is to traverse the graph. So the FGA system starts at the resource (the expense report) and – from the top down – it asks

In summary, the data in the relationship tuples define the graph. The authorization model defines rules for traversing the graph. And when you query the system, the query traverses the graph according to the rules and returns either “Yes, you’re authorized” or “No you’re not” depending upon the result.

OpenFGA relationship-based access control

|

1 2 3 4 5 6 7 8 9 10 11 12 |

# Model definition type user type team relations define member: [user] type document relations define viewer: [team#member] # Relationship tuples team:finance#member@user:kate document:forecast.pdf#viewer@team:finance |

Meaning: Kate can view forecast.pdf because she’s a member of the Finance team, which has viewer rights on that document.

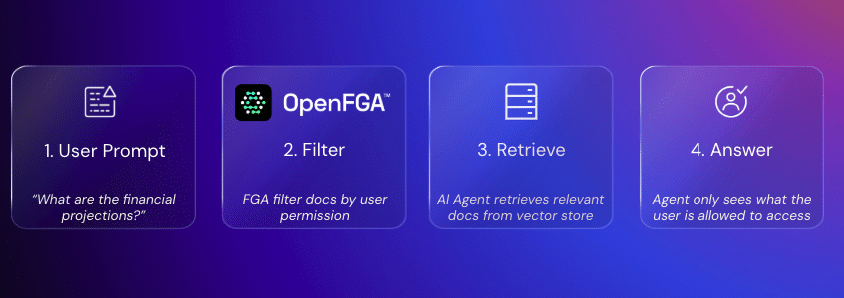

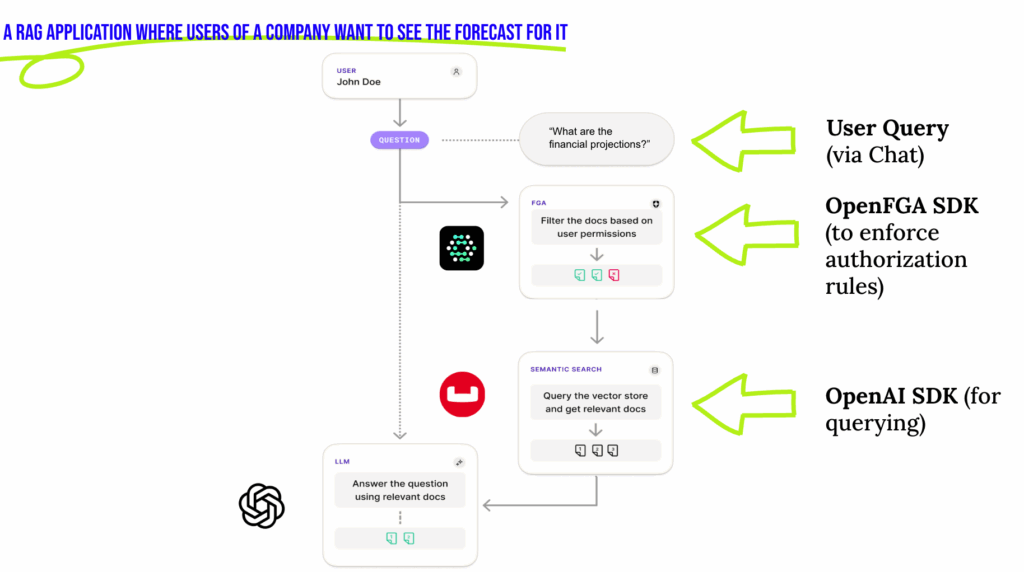

Implementing FGA in a RAG AI pipeline

RAG is a framework designed to overcome the limitations of LLMs and provide more accurate and detailed responses. Although LLMs are trained on vast data sets, they often struggle with specialized knowledge, up-to-date information and generating factually incorrect outputs, also known as “hallucinations.” RAG mitigates these problems by dynamically retrieving relevant data from external sources in real time.

Instead of relying purely on pre-trained knowledge, a RAG system retrieves domain-specific data. This is great when the data is public or freely shareable. But what to do if some of that data is restricted or confidential? This raises a significant challenge: ensuring that each user only accesses the information they are authorized to see. A secure RAG system needs to enforce fine-grained access control without sacrificing speed or scalability. Roles might change, projects can be reassigned, and permissions could evolve over time. Handling all this efficiently is key to building a truly secure and robust RAG application.

And this is exactly where OpenFGA comes in. By integrating OpenFGA with a RAG pipeline, we can decouple access control logic from the core RAG application. We can enforce authorization models in real time, and ensure that retrieved context is always filtered according to user permissions before being sent to the LLM for generating a response.

When integrating with a vector database like Couchbase, there are two main strategies to implement OpenFGA for RAG:

1. Post-filtering

-

- Retrieve documents from Couchbase Vector Search

- Pass results to OpenFGA to remove unauthorized docs

- Send filtered results to the AI model

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 |

def search_authorized_documents(self, query: str, user_id: str, top_k: int = 5) -> List[Dict[str, Any]]: """Search for documents using the pre-query filtering pattern""" try: # Step 1: Get authorized document IDs from OpenFGA authorized_docs = self.get_authorized_documents(user_id) if not authorized_docs: print(f"No authorized documents found for user: {user_id}") return [] # Step 2: Generate embedding for search query query_embedding = self.generate_embeddings(query, "text-embedding-ada-002") # Step 3: Perform vector search with metadata filter for authorized documents search_req = SearchRequest.create(MatchNoneQuery()).with_vector_search( VectorSearch.from_vector_query( VectorQuery("embedding", query_embedding, num_candidates=top_k * 2) ) ) # Execute search result = self.scope.search(self.search_index_name, search_req) rows = list(result.rows()) # Step 4: Filter results to only include authorized documents authorized_results = [] for row in rows: try: # Get the full document doc = self.collection.get(row.id) if doc and doc.value: doc_content = doc.value doc_source = doc_content.get("source", "") # Check if this document is in the authorized list if doc_source in authorized_docs: authorized_results.append({ "id": row.id, "text": doc_content.get("text", ""), "source": doc_source, "score": row.score, "metadata": doc_content.get("metadata", {}) }) # Stop if we have enough results if len(authorized_results) >= top_k: break except Exception as doc_error: print(f"Could not fetch document {row.id}: {doc_error}") return authorized_results |

2. Pre-filtering

-

- Call OpenFGA to remove the unauthorized docs

- Add a pre filter for the vector search query to limit the search scope

- Only retrieve embeddings for documents the user can access

Example of FGA with RAG

Let’s say you as a developer want to use an AI-assistant to get the forecast of the company. The system must ensure you only see the public forecast data and not any private financial reports that are restricted to the Finance team. Without the right safeguards, this becomes a Sensitive Information Disclosure risk, exactly the kind of issue highlighted by the OWASP Top 10 for LLM applications.

Here’s how Fine-Grained Authorization (FGA) solves it:

Step 1 – Permissions Check: OpenFGA checks the access rights. If the access doesn’t belong to the Finance team, private financial documents are excluded.

Step 2 – Filtering: OpenFGA (via its SDK) filters out any results the user shouldn’t see.

Step 3 – Document Retrieval: Perform vector search with the applied filter to only retrieve documents permissible to be seen by the user.

Step 4 – Answer Generation: LLM generates a response only from the authorized subset of documents.

Real world applications

There are a lot of benefits to applying Fine-Grained Authorization in AI applications. Let’s explore some of the popular use cases:

-

- Multi-Tenant SaaS: One tenant’s AI queries never retrieve another tenant’s data

- Healthcare: Patient record retrieval that is restricted to only authorized practitioners

- Finance: Sensitive forecasts and regulatory data accessible only to relevant teams

- Legal: Case documents restricted based on client-attorney assignments

Final thoughts: security without sacrificing speed

Without the right security, you risk adding a whole new attack surface to your application with agentic AI. AI applications now handle sensitive user data and are not just processing the information; they are interacting with APIs, automating decisions, and acting on users’ behalf

The agents need to have least privileged access to user data, non-static access credentials, and fine grained access control. OpenFGA provides a way to secure AI in apps while also enabling the applications to scale hundreds of millions active users seamlessly as the agent ecosystem grows.

Thus, Fine-Grained Authorization, powered by OpenFGA and integrated with Couchbase Vector Search, ensures AI systems are both powerful and safe, thus delivering AI innovation without compromising security.