Tag: LLMs

Unlocking the Power of AWS Bedrock with Couchbase

The explosion of generative AI has made vector databases a crucial part of modern applications. As businesses seek scalable and efficient solutions for AI-powered search, recommendation, and knowledge retrieval, AWS Bedrock and Couchbase emerge as a compelling combination. AWS Bedrock...

Introducing Model Context Protocol (MCP) Server for Couchbase

The cornerstone of autonomous AI Agentic systems and GenAI applications is an “Augmented LLM”, which is defined as an LLM enhanced with augmentations from various data sources and knowledge bases. Since its introduction, there has been a lot of buzz...

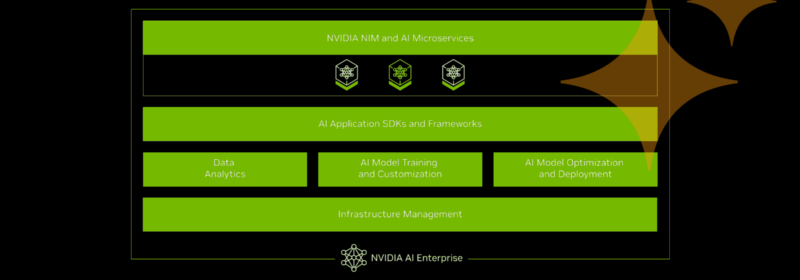

Couchbase and NVIDIA Team Up to Help Accelerate Agentic Application Development

Capella AI Services alongside NVIDIA AI Enterprise Couchbase is working with NVIDIA to help enterprises accelerate the development of agentic AI applications by adding support for NVIDIA AI Enterprise including its development tools, Neural Models framework (NeMo) and NVIDIA Inference...

DeepSeek Models Now Available in Capella AI Services

Unlock advanced reasoning at a lower TCO for Enterprise AI Today, we’re excited to share that DeepSeek-R1 is now integrated into Capella AI Services, available in preview! This powerful distilled model, based on Llama 8B, enhances your ability to build...

A Tool to Ease Your Transition From Oracle PL/SQL to Couchbase JavaScript UDF

What is PL/SQL? PL/SQL is a procedural language designed specifically to embrace SQL statements within its syntax. It includes procedural language elements such as conditions and loops, and can handle exceptions (run-time errors). PL/SQL is native to Oracle databases, and...

Integrate Groq’s Fast LLM Inferencing With Couchbase Vector Search

With so many LLMs coming out, a lot of companies are focusing on enhancing the inference speeds for large language models with specialized hardware and optimizations to be able to scale the inference capabilities of these models. One such company...

Capella Model Service: Secure, Scalable, and OpenAI-Compatible

Couchbase Capella has launched a Private Preview for AI services! Check out this blog for an overview of how these services simplify the process of building cloud-native, scalable AI applications and AI agents. In this blog, we’ll explore the Model...

Couchbase + Dify: High-Power Vector Capabilities for AI Workflows

We’re excited to announce the new integration between Couchbase and Dify.ai, bringing Couchbase’s robust vector database capabilities into Dify’s streamlined LLMops ecosystem. Dify empowers teams with a no-code solution to build, manage, and deploy AI-driven workflows efficiently. Now, with Couchbase...

Couchbase Introduces Capella AI Services to Expedite Agent Development

TLDR: Couchbase announces new AI Services in the Capella developer data platform to efficiently and effectively create and operate GenAI-powered agents. These services include the Model Service for private and secure hosting of open source LLMs, the Unstructured Data Service...