Check out the blogs that falls under the category Vector Search. Learn more about the vector search best practices – interacting with AI LLMs and more

Category: Vector Search

Enhancing Performance Using XATTRs for Vector Storage and Search

Couchbase XATTRs store vector data efficiently, improving performance by keeping bulky content out of query paths. Here's how XATTRs work with search.

AI in Action: Enhancing and Not Replacing Jobs

Build a Ruby on Rails app integrating Vonage, Couchbase, and OpenAI for customer support, improving agent workflows with vector search and WhatsApp.

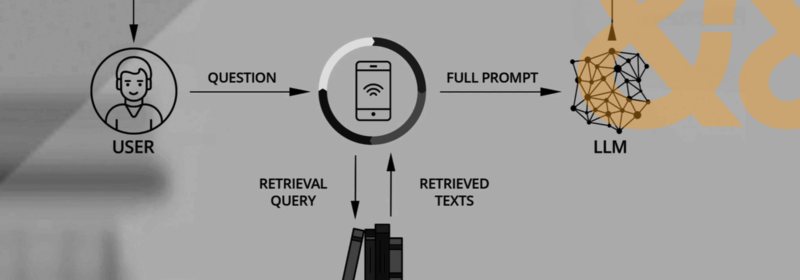

From Concept to Code: LLM + RAG with Couchbase

Learn how to build a generative AI recommendation engine using LLM, RAG, and Couchbase integration. Step-by-step guide for developers.

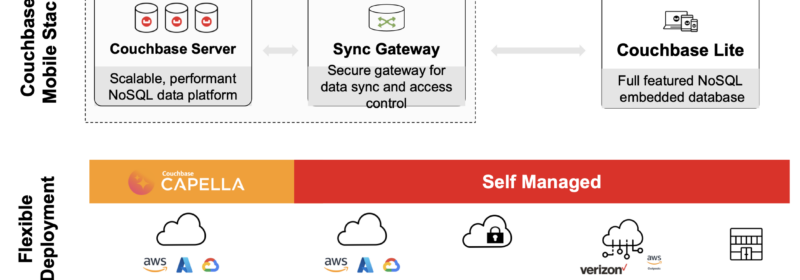

Building a Path to Edge AI for Vector Search, Image, and Data Focused Applications

Couchbase integrates AI, vector search, and edge computing to enhance customer experiences with fast, reliable, and real-time data processing at the edge.

Your Alternative To MongoDB Atlas Device Sync & Atlas Device SDKs (formerly Realm): Couchbase Mobile

While MongoDB ends mobile support, Couchbase Mobile offers a reliable, scalable solution for offline-first, AI-powered apps.

Couchbase Shell (cbsh) Reaches v1.0: Unlocking the Power of Vector Search & Beyond

Couchbase releases Couchbase Shell (cbsh) with advanced vector search for GenAI and improved database interactions

New Couchbase Capella Advancements Fuel Development

Fuel AI-driven development with Capella’s latest updates: real-time analytics, vector search at the edge, and a free tier to start quickly.

Vector Search at the Edge with Couchbase Mobile

Couchbase Lite isthe first database platform with cloud-to-edge support for vector search powering AI apps in the cloud and at the edge. Learn more here.

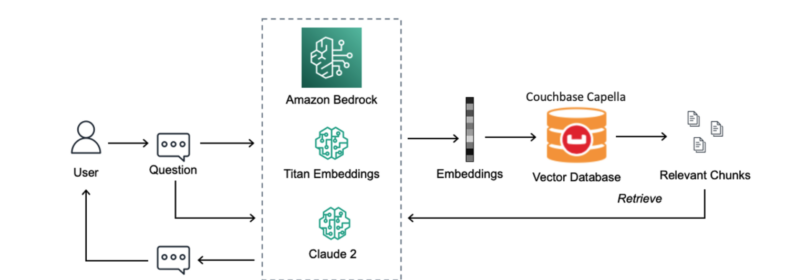

Build Performant RAG Applications Using Couchbase Vector Search and Amazon Bedrock

Enhance generative AI with Retrieval-Augmented Generation using Couchbase Capella and Amazon Bedrock for scalable, accurate results.

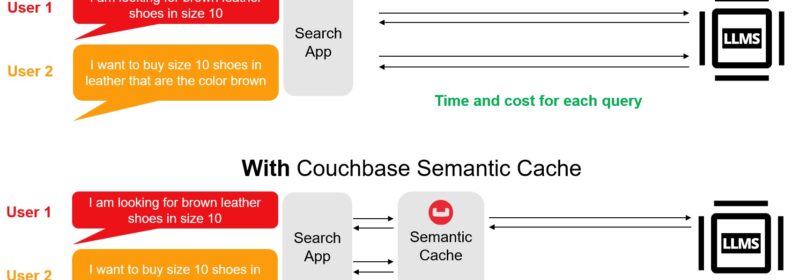

Build Faster and Cheaper LLM Apps With Couchbase and LangChain

The LangChain-Couchbase package integrates Couchbase's vector search, semantic cache, conversational cache for generative AI workflows.

Get Started With Couchbase Vector Search In 5 Minutes

Vector search and full-text search are both methods used for searching through collections of data, but they operate in different ways and are suited to different types of data and use cases.

Top Posts

- Couchbase 8.0: Unified Data Platform for Hyperscale AI Applicatio...

- Data Modeling Explained: Conceptual, Physical, Logical

- What are Embedding Models? An Overview

- Data Analysis Methods: Qualitative vs. Quantitative Techniques

- What Is Data Analysis? Types, Methods, and Tools for Research

- App Development Costs (A Breakdown)

- High Availability Architecture: Requirements & Best Practice...

- Couchbase Introduces Capella AI Services to Expedite Agent Develo...

- Column-Store vs. Row-Store: What’s The Difference?