Couchbase Capella’s Cluster On/Off feature allows users to pause and resume their clusters seamlessly, without permanently deleting the data, helping optimise cloud expenses and improve operational efficiency.

In this blog, we’ll explore how this feature works and how you can automate it using Terraform.

Users have two options for managing cluster availability:

-

- On-Demand On/Off – Manually pause and resume clusters as needed.

- Scheduled On/Off – Automate pausing/resuming clusters at specific dates and time zones.

Turning off (pausing) a cluster

-

- Stops all cluster services.

- Persists data, configuration, and metadata.

- Frees compute resources to reduce costs while keeping storage intact.

Turning on (resuming) a cluster

-

- Restores the cluster from a paused state.

- Restarts services and makes data accessible again.

- Allows clients to reconnect and resume operations seamlessly.

Why use the cluster on/off feature?

- Saves computational costs and optimises based on usage:

- When a cluster is turned off, compute resources (e.g., CPU, RAM) are not billed, reducing infrastructure costs significantly.

- When a cluster is OFF, you only pay the OFF amount (mostly storage and management costs).

- Helps align costs with actual usage instead of paying for idle resources.

- Reduces operational overhead:

- Eliminates the need for manual cluster provisioning and teardown.

- Users can easily resume a paused cluster without reconfiguring or redeploying resources.

- Persistence of data and cost-efficient development/testing:

- Data, schema (buckets, scopes, collections), indexes, users, allow lists, and backups remain intact when a cluster is paused.

- Ensures no loss of configuration when resuming operations.

- Developers can pause non-production environments outside of working hours. This prevents unnecessary costs in CI/CD pipelines, staging, or UAT environments.

Cost optimization

One of the simplest and most effective ways to reduce operational costs is by turning off clusters when they are not in use. We will consider the use case of an AWS cluster with 3 nodes (Data, Index and Query) using a Developer Pro Plan in Couchbase Capella, with the configuration of 2 vCPUs, 16 GB RAM, disk size of 50GB, disk type GP3, and IOPS 3000.

When this cluster is kept online 24/7, it incurs a monthly compute cost of $1,497.60 under the pay-as-you-go model. However, many development clusters are only needed during working hours. By simply shutting down the cluster for 12 hours each day, such as overnight, teams can save $705.60 per month, amounting to a 47% reduction in compute costs. This makes a strong case for automating cluster downtime to optimise cloud spending without impacting developer productivity.

In environments with multiple clusters or development environments that do not require round-the-clock availability, by scheduling downtime for non-essential clusters, teams can reallocate their budgets more effectively, investing in areas that directly impact business outcomes.

Getting started: deploy an on/off schedule using the Terraform provider

The Capella Terraform Provider allows users to manage Capella deployments programmatically, automating resource orchestration. Let’s go through a simple tutorial to deploy an On/Off schedule in Capella through the Terraform Provider.

To learn more about how to turn off or turn on the cluster on demand using Terraform, please refer to the examples and README provided in this repository. There is a step-by-step overview of how to switch the cluster on/off on demand.

In addition to the cluster on/off feature, an App Service can also be turned on/off on demand. Please refer to the following documentation and the Terraform provider examples for more information.

What you’ll learn

-

- The steps to configure the files and understand the commands required to run the Terraform scripts.

- A step-by-step tutorial on deploying a Cluster On/Off schedule in Capella using the provider.

Prerequisites

Before you begin, ensure you have:

-

- Terraform version >= 1.5.2

- Go version >= 1.2.0

- Capella paid account

- Capella cluster – You can either create a Couchbase cluster using the provider, the Capella UI, or the Capella Management API and use the corresponding organization_id, project_id, and cluster_id to create the on/off schedule.

- Authentication APIKey – You can create the API key using the Capella UI or the Capella Management API. You must create an API key that has the right set of roles associated with it, depending on the scope of the resources managed by the provider.

For information on setting the authorization and authentication, please refer to the Terraform repository and this blog post.

Step 1: Configuration

Create the variables

- Create a file named variables.tf and add the following variable definitions. We will use these variables within our config file.

123456789101112131415variable "organization_id" {description = "Capella Organization ID"}variable "project_id" {description = "Project Name for Project Created via Terraform"}variable "cluster_id" {description = "Capella Cluster ID"}variable "auth_token" {description = "Authentication API Key"} - Create a file named terraform.template.tfvars and add the following lines. Here, we specify the values of key variables associated with the deployment.

1234auth_token = "<replace-with-v4-api-key-secret>"organization_id = "<replace-with-the-oid-of-your-tenant>"project_id = "<replace-with-the-projectid-of-your-project>"cluster_id = "<replace-with-the-clusterid-of-your-cluster>" - Create a file named capella.tf and add the following configuration to create the On/Off schedule for an existing Capella cluster in a project:

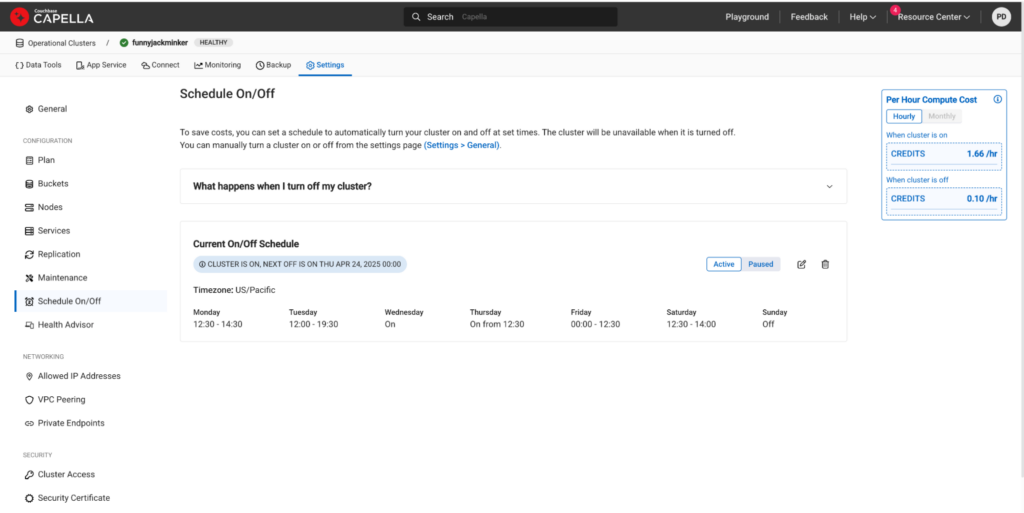

1234567891011121314151617181920212223242526272829303132333435363738394041424344454647484950515253545556575859606162636465666768697071727374757677787980818283848586878889terraform {required_providers {couchbase-capella = {source = "registry.terraform.io/couchbasecloud/couchbase-capella"}}}# Configure the Couchbase Capella Provider using predefined variablesprovider "couchbase-capella" {authentication_token = var.auth_token}# Create an on off schedule for the clusterresource "couchbase-capella_cluster_onoff_schedule" "new_cluster_onoff_schedule"{organization_id = var.organization_idproject_id = var.project_idcluster_id = var.cluster_idtimezone = "US/Pacific"days = [{day = "monday"state = "custom"from = {hour = 12minute = 30}to = {hour = 14minute = 30}},{day = "tuesday"state = "custom"from = {hour = 12}to = {hour = 19minute = 30}},{day = "wednesday"state = "on"},{day = "thursday"state = "custom"from = {hour = 12minute = 30}},{day = "friday"state = "custom"from = {}to = {hour = 12minute = 30}},{day = "saturday"state = "custom"from = {hour = 12minute = 30}to = {hour = 14}},{day = "sunday"state = "off"}]}# Stores the cluster onoff schedule details in an output variable.# Can be viewed using `terraform output cluster_onoff_schedule` commandoutput "cluster_onoff_schedule" {value = couchbase-capella_cluster_onoff_schedule.new_cluster_onoff_schedule}

Step 2: Deploy and Manage the Schedule

Initialize the Terraform provider

Terraform must be initialized the very first time you use the provider:

|

1 |

terraform init |

Review the Terraform plan

Use the following command to review the resources that will be deployed:

|

1 |

terraform plan -var-file terraform.template.tfvars |

You should see output similar to the following while creating an on/off schedule for an existing cluster:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 |

Terraform used the selected providers to generate the following execution plan. Resource actions are indicated with the following symbols: + create Terraform will perform the following actions: # couchbase-capella_cluster_onoff_schedule.new_cluster_onoff_schedule will be created + resource "couchbase-capella_cluster_onoff_schedule" "new_cluster_onoff_schedule" { + cluster_id = "1cd2a882-ddc2-497a-a9f9-60bd8da5488f" + days = [ + { + day = "monday" + from = { + hour = 12 + minute = 30 } + state = "custom" + to = { + hour = 14 + minute = 30 } }, + { + day = "tuesday" + from = { + hour = 12 + minute = 0 } + state = "custom" + to = { + hour = 19 + minute = 30 } }, + { + day = "wednesday" + state = "on" }, + { + day = "thursday" + from = { + hour = 12 + minute = 30 } + state = "custom" }, + { + day = "friday" + from = { + hour = 0 + minute = 0 } + state = "custom" + to = { + hour = 12 + minute = 30 } }, + { + day = "saturday" + from = { + hour = 12 + minute = 30 } + state = "custom" + to = { + hour = 14 + minute = 0 } }, + { + day = "sunday" + state = "off" }, ] + organization_id = "7a99d00c-f55b-4b39-bc72-1b4cc68ba894" + project_id = "9f837bbd-d1f3-476d-ac62-ba65a6548215" + timezone = "US/Pacific" } Plan: 1 to add, 0 to change, 0 to destroy. Changes to Outputs: + cluster_onoff_schedule = { + cluster_id = "1cd2a882-ddc2-497a-a9f9-60bd8da5488f" + days = [ + { + day = "monday" + from = { + hour = 12 + minute = 30 } + state = "custom" + to = { + hour = 14 + minute = 30 } }, + { + day = "tuesday" + from = { + hour = 12 + minute = 0 } + state = "custom" + to = { + hour = 19 + minute = 30 } }, + { + day = "wednesday" + from = null + state = "on" + to = null }, + { + day = "thursday" + from = { + hour = 12 + minute = 30 } + state = "custom" + to = null }, + { + day = "friday" + from = { + hour = 0 + minute = 0 } + state = "custom" + to = { + hour = 12 + minute = 30 } }, + { + day = "saturday" + from = { + hour = 12 + minute = 30 } + state = "custom" + to = { + hour = 14 + minute = 0 } }, + { + day = "sunday" + from = null + state = "off" + to = null }, ] + organization_id = "7a99d00c-f55b-4b39-bc72-1b4cc68ba894" + project_id = "9f837bbd-d1f3-476d-ac62-ba65a6548215" + timezone = "US/Pacific" } |

Execute the Terraform plan

Deploy the Couchbase Capella resources using the following command:

|

1 |

terraform apply -var-file terraform.template.tfvars |

Type “yes” if the plan looks good.

Note: It will take a few minutes to deploy the resources if you are creating a project, deploying a cluster and creating an on/off schedule for it.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 |

Do you want to perform these actions? Terraform will perform the actions described above. Only 'yes' will be accepted to approve. Enter a value: yes couchbase-capella_cluster_onoff_schedule.new_cluster_onoff_schedule: Creating... couchbase-capella_cluster_onoff_schedule.new_cluster_onoff_schedule: Creation complete after 0s Apply complete! Resources: 1 added, 0 changed, 0 destroyed. Outputs: cluster_onoff_schedule = { "cluster_id" = "1cd2a882-ddc2-497a-a9f9-60bd8da5488f" "days" = tolist([ { "day" = "monday" "from" = { "hour" = 12 "minute" = 30 } "state" = "custom" "to" = { "hour" = 14 "minute" = 30 } }, { "day" = "tuesday" "from" = { "hour" = 12 "minute" = 0 } "state" = "custom" "to" = { "hour" = 19 "minute" = 30 } }, { "day" = "wednesday" "from" = null /* object */ "state" = "on" "to" = null /* object */ }, { "day" = "thursday" "from" = { "hour" = 12 "minute" = 30 } "state" = "custom" "to" = null /* object */ }, { "day" = "friday" "from" = { "hour" = 0 "minute" = 0 } "state" = "custom" "to" = { "hour" = 12 "minute" = 30 } }, { "day" = "saturday" "from" = { "hour" = 12 "minute" = 30 } "state" = "custom" "to" = { "hour" = 14 "minute" = 0 } }, { "day" = "sunday" "from" = null /* object */ "state" = "off" "to" = null /* object */ }, ]) "organization_id" = "7a99d00c-f55b-4b39-bc72-1b4cc68ba894" "project_id" = "9f837bbd-d1f3-476d-ac62-ba65a6548215" "timezone" = "US/Pacific" } |

It would look like this on the Capella UI:

Destroy the schedule

|

1 |

terraform destroy -var-file terraform.template.tfvars |

References

-

- Check out Cluster On/Off Schedule using Terraform Provider.

- Check out the On-demand Cluster On/Off using Terraform Provider.

- Check out the On-demand App Service On/Off using Terraform Provider.

- Couchbase official docs for On-demand Cluster On/Off – ref

- Couchbase official docs for Cluster On/Off Schedule – ref

- Couchbase official docs for App Service On/Off Schedule – ref