The explosion of generative AI has made vector databases a crucial part of modern applications. As businesses seek scalable and efficient solutions for AI-powered search, recommendation, and knowledge retrieval, AWS Bedrock and Couchbase emerge as a compelling combination. AWS Bedrock simplifies access to powerful foundation models, while Couchbase’s vector store capabilities provide the storage and retrieval efficiency needed to build high-performance AI applications.

In this blog, we explore how Couchbase’s vector store, when integrated with AWS Bedrock, creates a powerful, scalable, and cost-effective AI solution. We’ll also showcase some key implementation details to help you get started.

Why AWS Bedrock + Couchbase?

Let’s dive deeper into the unique value each component provides and how they work together to power modern AI applications.

Seamless access to foundation models

AWS Bedrock offers access to multiple foundation models without the need for extensive infrastructure management. This makes it easy for businesses to experiment, deploy, and scale AI-powered applications without worrying about the underlying complexity of model hosting and fine-tuning.

Scalable and cost-effective vector storage

Couchbase’s vector store capabilities provide a fast and efficient way to store, retrieve, and search embeddings. With built-in indexing and high-performance queries, Couchbase ensures AI-driven applications can handle large-scale vector search efficiently.

Bridging the gap between LLMs and enterprise data

By combining AWS Bedrock’s model inference capabilities with Couchbase’s vector database, businesses can create intelligent applications that understand and respond to user queries with high accuracy. Whether it’s chatbot applications, enterprise search, or personalized recommendations, this integration enables AI to work seamlessly with real-world business data.

Seamless integration with AWS Ecosystem

Bedrock integrates effortlessly with other AWS services like Lambda, S3, and API Gateway, enabling low-latency, serverless AI workflows.

The need for serverless

Serverless architectures provide a range of benefits that will naturally assist AI-powered solutions, including:

-

- Zero infrastructure management – No need to provision, scale, or maintain servers, reducing operational complexity and accelerating AI deployment.

- Auto-scaling & cost efficiency – Dynamically scales based on demand, ensuring optimal performance while following a pay-as-you-go model to minimize costs.

- Seamless integration & low latency – Easily connects with AWS services (Lambda, API Gateway) and provides real-time AI inference with minimal latency.

Now that we’ve covered the benefits and architecture, let’s look at a practical example that brings it all together.

A RAG PDF chat application example

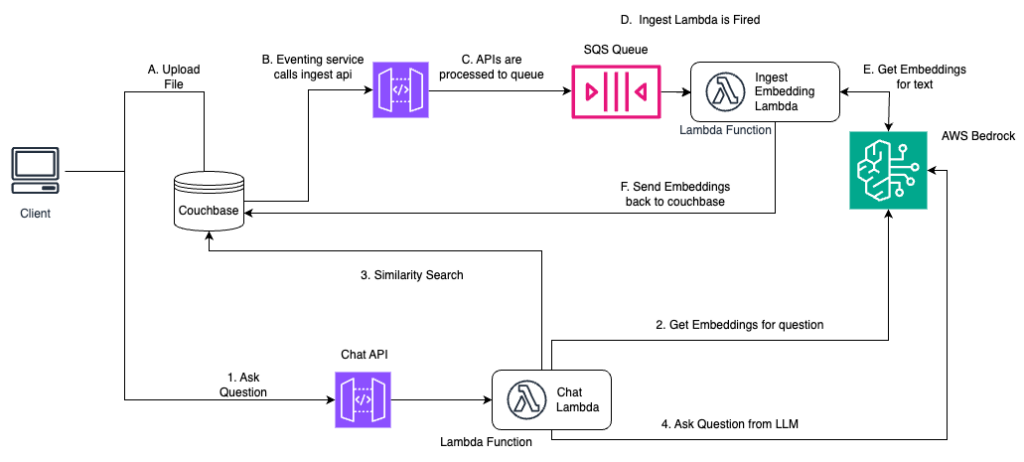

We show a demonstration of how to integrate AWS Bedrock with Couchbase with an LLM chat application. This app allows you to upload your own PDFs, and a RAG pipeline built with Couchbase enables the LLM to extract data from your PDFs to answer your questions.

-

- Find the code in this GitHub repository.

A detailed discussion of the diagram can be found in this PDF chat using AWS Bedrock serverless tutorial. In short, there are two flows: one involving the ingestion of PDF data, and the other pertaining to the user interacting with the chat application. Along the way, we use a couple key Couchbase services which are critical to the application: eventing and vector search.

Couchbase Eventing

The Eventing Service handles data changes that happen when applications interact and has these features that help AI applications thrive:

-

- Real-time Data Processing – Couchbase Eventing allows you to execute business logic in response to data mutations (inserts, updates, deletes) within a Couchbase bucket.

- Asynchronous & scalable – The Eventing service runs asynchronously and scales independently, ensuring efficient handling of high-throughput workloads.

- Integration with external systems – You can trigger external APIs, call microservices, or interact with message queues using eventing functions.

- Low latency & high performance – Designed for minimal overhead with direct memory access to data.

Couchbase Vector Search

Vector Search builds on Couchbase Capella’s Search Service to provide vector index support. You can use these new Vector Search indexes for Retrieval Augmented Generation (RAG) with an existing Large Language Model (LLM), with these architectural benefits:

-

- Hybrid search – Combines semantic search with traditional keyword and metadata filtering for more relevant results.

- Low-latency & high-performance – Optimized for real-time AI applications, ensuring fast retrieval of similar items.

- Seamless integration with Couchbase – Runs natively within Couchbase, allowing efficient vector storage, indexing, and retrieval alongside structured and unstructured data.

We also use other AWS services which complement our application in various ways, as discussed below:

AWS Lambda

-

- Zero infrastructure management – just upload code and it runs

- Pay-per-use pricing

- Auto-scaling to handle traffic spikes

Amazon SQS

-

- High data throughput for handling large volumes of traffic

- High reliability – does not lose a message

Amazon ECR

-

- Easy to implement, secure home for container images

- Easy integration between AWS services

- Ensures consistent behavior between development and production environments

For further reading

-

- Explore AWS Bedrock

- Learn more about AWS Lambda, Amazon SQS, Amazon ECR

- Check out Couchbase Capella, a managed database, and start using it today for free!