Welcome to the season of AI. Generative AI. With this blog we will explain how generative AI works with Couchbase. We know generative AI is going to be a huge issue and opportunity for nearly every data-enabled enterprise application. In this blog, we’ll dive into what generative AI is, how generative AI works, and where Couchbase fits in the world of generative AI.

What is Generative AI?

Generative AI (Artificial Intelligence) is the categorical term used to describe content creation algorithms that use Large Language Models (LLMs) to construct new content based on similarity-based knowledge that the LLM has accumulated. The content generated can be text, code, graphics, pictures, audio, speech, music or video. The LLM is interrogated using prompts that suggest the topic and format of the desired output by a user or a program. The resulting content is generated and refined by the model, thus the term “Generative AI.”

How does Generative AI work?

Generative AI was born from advancements in natural language processing (NLP) and image processing, which helped identify the intended meaning of a phrase, and recognize objects like trees and rocks within an image. Each of these examples is built using algorithms that detect similarities. For example, an algorithm could identify that both tigers and tabby’s are similar types of cats. In many cases, these similarities are expressed along a large number of dimensions, and the algorithm will create numerical representations as vectors that describe how closely similar an item or phrase is to another. See our blog on vector search.

The challenge with these algorithms is that they must be trained to recognize when an item is similar to another, already recognized, item. This training requires feeding the models vast amounts of data allowing the model to compound and expand its field of recognition. Some LLMs have been trained by scanning the entire contents of the internet in order to build its knowledge base and understanding.

Examples of Generative AI Tools

Key players in building and accessing generative AI models include AWS, Open.AI, Microsoft, Google, Meta and Anthropic. Each has released their own models in both free and enterprise versions. We believe that these vendors will create a centralizing gravitational force with their LLMs, similar to what they have done with their cloud and social services, as managing LLMs is incredibly resource-intensive.

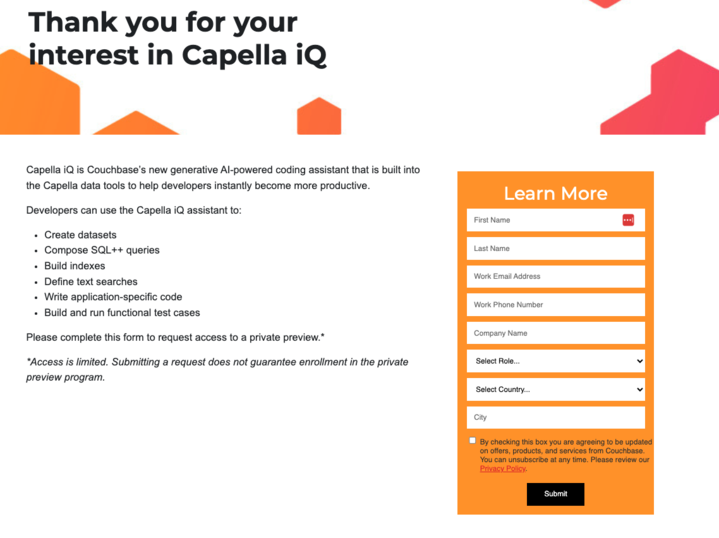

We are also seeing an emergence of thousands of generative AI browser plug-ins, as exemplified in this ZDNet article. These plug-ins are helping end users with content creation of all kinds. For Couchbase, we have announced our private preview of our own generative AI-powered coding assistant, Couchbase iQ.

Why Couchbase is a player in Generative AI-powered Applications

Couchbase is already being used as a data platform for AI-powered applications. There are a number of reasons for this, including the value and flexibility of the JSON data format, the versatility of data access patterns afforded by Couchbase, and the introduction of our newest capability, Couchbase Capella iQ.

JSON is an ideal data format for AI-oriented data

Prompt engineering is the process of building generative AI prompts that contain the proper amount of contextual data in order to receive a relevant and accurate response from an LLM. Prompt engineering is a fast-growing field of expertise and JSON is an ideal format for storing prompt data. Consider that:

-

- A JSON document can contain both data and metadata about that data within a single document in order to feed prompts.

- JSON is incredibly popular for storing account profile information, including attributes intended for personalization and user experience.

- JSON can also contain full prompt strings, which can help maintain conversational context from session to session. This is important because most LLMs are stateless and do not maintain context regarding the conversation they are conducting.

Each of these observations point to why JSON is the ideal data format for generative AI.

Data complexity is the enemy of Generative AI

We recognize that generative AI deals in similarities, and not specifics. Accordingly, the derived information delivered from generative AI conversations may suffer from hallucinations. A generative AI hallucination may look like a real fact, but cannot be traced back to one. This highlights the imprecise nature of generative AI. In order to minimize hallucinations and improve AI’s accuracy, we believe that data architectures must be simplified. Yet many applications are powered by multiple databases, each of which performs its own discrete operation, such as caching sessions, updating user profiles in JSON, managing relational transactions, searching text and locations, triggering events, scanning time-series logs, or searching vector embeddings for AI.

However the complexity of using multiple databases for each of these access patterns can confuse LLMs when prompts are derived from multiple sources. We expect that data architects will realize that their generative AI prompts will need a clean, simple data architecture and a single pool of prompt data in order to improve the accuracy of LLM results.

This is why a Couchbase-powered application, that enjoys all the abovementioned access patterns, will not only help reduce architectural cost, but also create cleaner, more accurate data to inform AI models.

Generative AI LLMs are centralized, but AI utilization is decentralized at the Edge

Generative AI interactions with large language models are a centralizing gravitational force for the major CSPs because of their extremely high processing and infrastructure requirements. But AI data creation and LLM results consumption happen at the edge and on mobile devices. This is especially true when the application is end-user facing, and providing hyper-personalized content. Mobile applications are distributed and frequently disconnected. We explain this phenomenon in our recent blog on The New Stack.

Couchbase can bridge the Generative AI accuracy gap

Generative AI, while powerful, lacks precision in many of its answers. This may create new challenges for developers:

-

- How to bridge the gap between the precision of traditional DBMS features like transactions and SQL queries with the imprecision of AI-created results.

- Using Couchbase Capella iQ, developers can lean on the precision of SQL++ and multi-document, distributed, ACID transactions to help improve the accuracy of an AI-powered application built with Couchbase Capella.

Couchbase is the data platform for AI-powered applications

Couchbase customers are already building AI-powered applications because their applications need the fundamental capabilities of Couchbase including:

Application performance

-

-

- High-speed in-memory caching for responsiveness

- Low latency, even at the edge where AI-targeted data is created and consumed

- Performance tuning to isolate and optimize workloads on the cluster

-

Versatility

-

-

- JSON for storing operational data, its metadata, its search arrays, and prompt sequences

- Multimodel access services for Key/Value, text, and geographic search, document changes, Time Series data, and event capture and streaming, recursive (graph traversal) queries, and operational analytic processing

- Predictive queries in Couchbase Lite.

- Precision and Ease of SQL++ query language.

- Edge processing, hierarchical data synchronization, and peer-to-peer sync among locally-embedded mobile database instances (Couchbase Lite).

-

Enterprise scalability

-

-

- Distributed, geographic clustering

- Support for multi-cloud, DBaaS, Kubernetes, and self-managed deployments

- Reliable (Patented), distributed multi-document ACID transactions when needed.

- Enterprise-grade security and certifications for PCI, HIPAA, and SOC 2 Type II.

-

Will Couchbase use AI in managing and operating the Capella Database as a Service?

Yes, Couchbase is exploring ways to use AI to support operational activities like cluster sizing, scaling, rebalancing, edge automation and more.

Conclusion: Couchbase is ready for Generative AI

Couchbase will continue to incorporate AI capabilities across its product line by specifically focusing on:

-

- Improving developer productivity by introducing capabilities like Capella iQ

- Optimizing AI processing within the Couchbase platform

- Enabling AI-driven applications anywhere, including the Edge

- Building a vibrant AI partner ecosystem

How do I try Capella iQ?

Couchbase is offering private technology previews to the Couchbase community.

Simply sign-up to be added to our waitlist, and we will prepare a preview session for you.