Tag: langchain

Integrate Groq’s Fast LLM Inferencing With Couchbase Vector Search

Integrate Groq’s fast LLM inference with Couchbase Vector Search for efficient RAG apps. Compare its speed with OpenAI, Gemini, and Ollama.

From Concept to Code: LLM + RAG with Couchbase

Learn how to build a generative AI recommendation engine using LLM, RAG, and Couchbase integration. Step-by-step guide for developers.

New Couchbase Capella Advancements Fuel Development

Fuel AI-driven development with Capella’s latest updates: real-time analytics, vector search at the edge, and a free tier to start quickly.

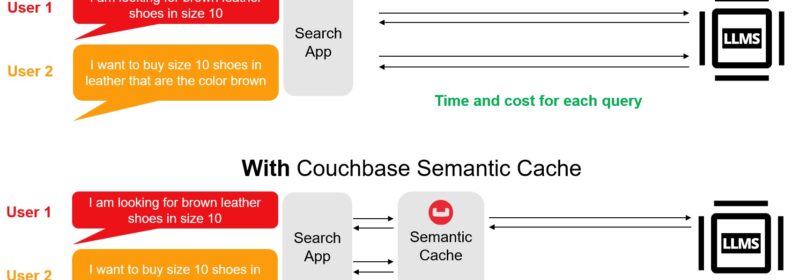

Build Faster and Cheaper LLM Apps With Couchbase and LangChain

The LangChain-Couchbase package integrates Couchbase's vector search, semantic cache, conversational cache for generative AI workflows.

Get Started With Couchbase Vector Search In 5 Minutes

Vector search and full-text search are both methods used for searching through collections of data, but they operate in different ways and are suited to different types of data and use cases.

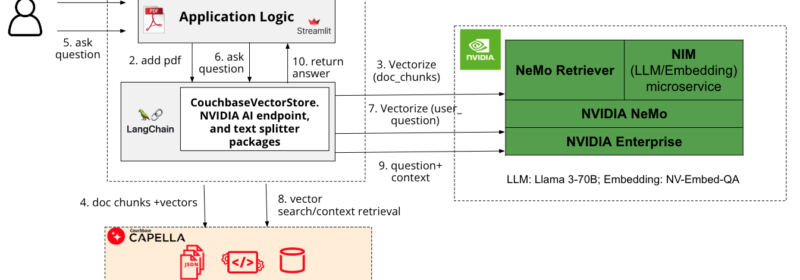

Accelerate Couchbase-Powered RAG AI Application With NVIDIA NIM/NeMo and LangChain

Develop an interactive GenAI application with grounded and relevant responses using Couchbase Capella-based RAG and accelerate it using NVIDIA NIM/NeMo

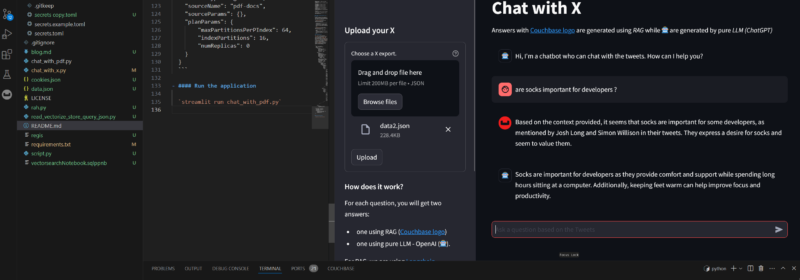

Twitter Thread tl;dr With AI? Part 2

Build a Streamlit app that uses LangChain and Vector Search for JSON data taken from Twitter and indexed in Couchbase NoSQL database for interactive Chat.

Top Posts

- Data Modeling Explained: Conceptual, Physical, Logical

- Six Types of Data Models (With Examples)

- What are Embedding Models? An Overview

- Application Development Life Cycle (Phases and Management Models)

- What Is Data Analysis? Types, Methods, and Tools for Research

- Capella AI Services: Build Enterprise-Grade Agents

- Data Analysis Methods: Qualitative vs. Quantitative Techniques

- 5 reasons SQLite Is the WRONG Database for Edge AI

- What are Vector Embeddings?