Scalable AI search

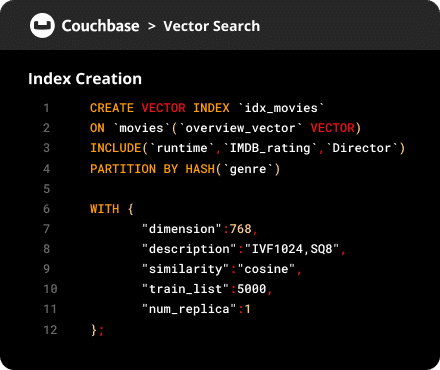

Hyperscale vector index for billion-scale data and is ideal for RAG, agents, and recommendations applications.

Couchbase 8.0 lets developers move from concept to production AI faster than ever. They can build fast AI-powered applications with huge datasets at low TCO, get started quickly with natural language queries, and ensure quick queries with new troubleshooting tools.

Use natural language to query with SQL++ extensions.

Search with developer-defined synonyms to have a smarter search.

Built-in workload repository and performance insights.

Compatible with popular AI frameworks.

Improving operational excellence with smarter cluster management, advanced security capabilities, dynamic rebalancing, and faster failover for continuous service availability.

Out-of-the-box native encryption at rest makes data safer and life simpler.

Dynamically adjust non-KV services without adding or removing nodes, eliminating rebalance delays.

Aggregate information from your SDK client telemetry for improved end-to-end monitoring and faster troubleshooting.

Auto-failover of non-responsive data nodes to improve application uptime. Serve requests while caches warm up.

Get quick answers to the most common topics regarding our latest server version.

It supports billions of vectors with millisecond retrieval speeds using a DiskANN-based design.

Encryption at rest, KMIP key management, and event monitoring ensure data integrity and compliance.

Yes. Use the search vector index for hybrid vector + lexical queries.

Automatic failover, rebalancing, and faster startup ensure continuous operations.

By reviewing the Couchbase 8.0 announcement blog.

Hyperscale helps RAG-style use cases when you cannot anticipate what a prompt is going to ask an LLM, while Composite vector indexes use prefiltering parameters to narrow the vectors to include in a prompt. Both offer millisecond response so RAG workflows do not slow down.

Achieve high performance and accuracy at billion-scale vector for AI agents, RAG workflows, contextual memory and recommendation systems – on premises or in Capella.