Couchbase Announces Vector Search

Couchbase announces vector search across its entire product line including Capella, Enterprise Server, and Mobile to enable building AI-powered adaptive applications that run anywhere. Happy Leap Day! And congratulations to the entire Couchbase team for delivering these incredible products.

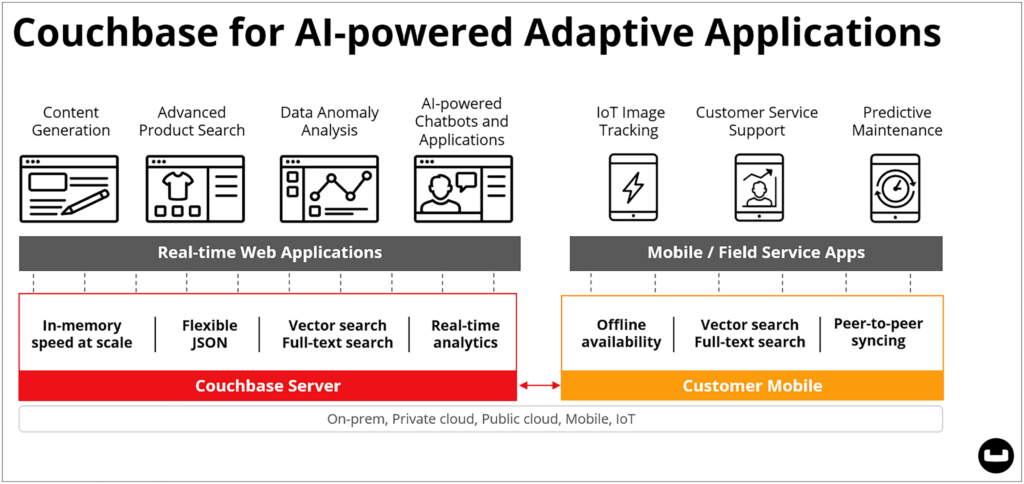

Yes, Couchbase adds vector search to all its products and supports retrieval-augmented generation (RAG) techniques through large language models (LLMs) via LangChain and LlamaIndex interfaces. This enables the construction of applications that are both hyper-personalized and contextualized in real time. Your applications will be able to immediately adapt to real-time conditions and situations–even in your mobile apps. We refer to these as adaptive applications.

TLDR: Adding Vector Search to Edge, Cloud, and Server Applications

-

- Couchbase adds vector search to our native natural language search service in Couchbase Enterprise Edition Server 7.6, Capella our fully-managed database, and Couchbase Lite, our embeddable mobile database. We’re taking vector search to the edge and on devices, and this is very exciting.

- Couchbase announces LangChain and LlamaIndex support across our language-specific SDKs to boost developer productivity in using whatever GenAI models they choose within their application.

- Using RAG techniques with vector search helps improve GenAI response accuracy, reducing anxiety about models hallucinating and providing untrustworthy responses back to an AI-powered application.

- Vector search also helps remove enterprise anxiety about oversharing sensitive or proprietary data with LLMs as training data.

- Developers will have full vector search support in REST API, SDK and SQL++. They can exploit their longstanding familiarity with SQL to develop GenAI applications quickly and effectively.

- This also addresses the common need for “hybrid search”–combining similarity and semantic search with range, spatial, and text searches in a single request.

- We are the first vendor to put vector search into a mobile database.

We’ve also added a myriad of other new capabilities to Capella and Enterprise Server 7.6 (now available for download and free Capella trial) including:

-

- Support for graph-like relationship traversals using recursive common table expressions in SQL++, so now you can navigate org charts and hierarchies made of collections and documents.

- File-based index rebalancing. Index rebuilds during a data rebalance were not fun, so we’ve made this 80% faster and easier.

- Couchstore to Magma one-step upgrade without downtime. Enjoy the capacity, speed, and compression benefits of Magma.

- Faster failover times to improve high availability and lower minimum reaction times: 200ms for the heartbeat frequency and one second for auto-failover activation.

- Simplified query building during development by eliminating the requirement for indexes before query execution.

Check out the announcement video:

What Does Announcing Vector Search Mean to Couchbase Customers?

It’s been quite a year for Couchbase’s journey in adding support for Generative Artificial Intelligence (GenAI) across our products. Like the rest of the world, we could see from the initial release of ChatGPT that GenAI was going to transform application development. We projected that almost every existing and future application will be examined to see if GenAI can be applied for particular features. We even speculated that the scope and scale of this re-examination of applications was akin to the Y2K problem twenty-five years ago (without the looming deadline).

If vector search is a new concept to you, watch our introduction video to get up to speed quickly. Continue reading below as we dive into the details of why we see vector search as a core application requirement.

We placed our bets on AI-powered adaptive applications

Last year, we called our shots about the following key principles in building AI-powered applications. Lara Greden from IDC captured it best.

JSON is the Data Format for AI

This was based on the understanding that AI-powered applications were going to deliver hyper-personalized and contextual experiences. We reasoned that JSON was the perfect format for dynamically updating account profiles that drive personalization, without requiring major schema changes. In November, OpenAI declared that they support JSON mode as a data format.

It was also convenient for us that we are a high-performance, distributed JSON database whose customers happen to manage their dynamic account profiles in our system.

We also argued that JSON would be a great format to persist and provide prompt data variables that would be programmatically assembled for interactions with AI. We started by introducing Capella iQ, our GenAI-enabled coding assistant to help developers build Couchbase-powered applications.

Our Challenge: Address Fears of GenAI in the Enterprise

When we started talking to analysts, prospects, and customers about their fears regarding GenAI, two concerns (aptly illustrated by Deloitte) jumped out immediately to us:

Oversharing data with language models

The first was the concern about getting in trouble for offering sensitive or proprietary data to a model which then trains itself on that knowledge. Countless customers are worried about losing their competitive advantage if an LLM learns and remembers their secret sauce data. We characterized this as “Oversharing your data with GenAI.” That’s worrisome. It could have legal implications, too. Vector search helps solve this problem.

AI models might be wrong or crazy

The second fear was the concern that GenAI would hallucinate and return inaccurate or untruthful information. Databases prefer data to be valid, true, accurate, trustworthy, transactional, etc. Databases have a sense of right or wrongness that cannot be avoided: down to the penny, or down to the bit. This realization opened a Pandora’s box of potential issues, including:

-

- Do you trust the data you feed to GenAI?

- What if you build GenAI prompts that use multiple data sources for different variables in the prompt? Do you trust that?

- What if the language model hallucinates, and you can’t figure out which prompt variable created the confusion?

- What if you intended to use your data warehouse, your operational systems, your real-time feeds, metadata catalogs, etc., as feeder systems to AI prompts and models?

AI Hates Data Complexity

We realized that considering the appropriate uses of GenAI would also force a re-examination of the complexity of your overall data architecture. If you’ve followed the data-design principles of the last decade, you know that you have a lot of databases, data movement, and APIs to examine.

Using any combination of purpose-built databases will become an obsolete practice because the risks of feeding AI models inconsistent or incorrect data will be too high. Consolidation of data access patterns is inevitable. This is why using a purpose-built vector database is shortsighted.

This is why we declared that AI hates complexity.

Data Architecture Complexity Must Be Eliminated

It’s a good thing that Couchbase is an exceptional multipurpose database that can help you clean up your architecture, speed up your applications, and provide a path to adding AI to them. Even when your applications are mobile!

As of this release, Couchbase supports the following data access patterns: key/value, JSON, SQL, Search (text, geo, vector), Time-series, Graph, Analytics, and event streaming all in the same distributed platform. Each access service is independently scalable so you can performance-tune your application for optimal use of infrastructure resources to reduce costs and keep them down. You can also deploy the system as a fully-managed service (Couchbase Capella), self-managed cloud, on-premises, at the edge, and on-device.

Complexity will shift to which and how many AI models to use

Complexity is going to shift from the data layer to the AI model layer as we see a future explosion of specialized models. It’s already happening. Tomorrow there will be thousands of purpose-built AI models available, and we will start using them in combination, like we were doing with databases in our data architecture.

AI-Powered Adaptive Applications Will Need Real-Time Analytics

In the fall, we announced Capella columnar for real-time, large-scale aggregations. The results of which you can insert into your application and even use that data for AI prompts. We resolved an age-old problem of writing back an analytic insight into the application without human intervention. This will be important when you are building your AI-powered application, you’re going to want real-time context like, “How much inventory is there right now?”

Adaptive Applications Will Be Mobile

So, if you are going to build a GenAI-powered application, you’re going to want to offer it where your users happen to be looking. On their mobile devices. All of the capabilities, including vector search, can be stored and accessed locally on devices. This is where your users live, where their technology interactions happen, and where your real-time context originates. Including context like location, time of day, weather, news, proximity to friends, etc. Vectors will be stored, secured and synchronized to devices so you can use similarity and hybrid search at the edge.

To our knowledge, vector search in an embeddable mobile device is a first-of-its-kind-feature. The benefits here will be huge–direct conversations with LLMs from the device without talking to the main server, better privacy, lower latency, offline-availability, and happier users. All of which will be necessary in your future AI-powered adaptive application.

Customers who want to try the beta of vector search in Couchbase Lite can sign up here.

Your Data Architecture Must Be Fast, Available, and Everywhere

When you think about building your first adaptive application, you must remember that the old application rules still apply. They must be fast, run anywhere, be reliable, be scalable, and be available when the user wants. There’s no compromising on your foundational requirements.

Summary

Couchbase announces vector search and opens a new era in application development. Today we announced that we support vector search across our entire product line, including supporting vector similarity search on mobile devices. But it is much more than that. We have updated Couchbase Server to version 7.6 which supports graph relationship traversals, auto upgrade from Couchstore to Magma, faster index rebalances, easier query execution, and faster failover in HA environments.

Couchbase is ready to help you build your adaptive applications. Are you ready to begin?

Enjoy the following resources

-

- Vector Search Press Release

- Download Couchbase Server 7.6

- Free Capella trial sign up

- What’s new in Capella and Server 7.6 or watch New Release video

- Read the Release Notes when available

- Learn more about Couchbase Vector Search

- Sign up: Couchbase Mobile Vector Search Beta

- More background on Vector Search, Vector Embeddings, and RAG

- Watch our new videos on Vector Search and Couchbase Lite Vector beta

- Register for: Couchbase Webcast on AI Services