Introdução

Couchbase Server 8.0 introduces a new Eventing function handler called OnDeploy that allows customers to run business logic during Eventing function deployment or resumption without requiring any external mutation to trigger it.

Earlier, customers with use cases that needed to run any logic before an Eventing function deployed or resumed were left with a few choices, such as:

- Manually perform the required setup on their own.

- Automate the setup via an external script before triggering the Eventing function deployment or resumption.

Both these methods are cumbersome and depend on external or manual intervention.

The “deployment” and “resumption” events in the Eventing function lifecycle mark points where it is about to start processing mutations. This makes the OnDeploy handler suitable for injecting logic that requires any of the following activities to be performed:

- Perform pre-flight checks to ensure that the environment is configured correctly.

- Set up caches (e.g., look-up tables) to improve efficiency.

- Send, gather, and process data from different Couchbase and external services.

- “Self-trigger” the Eventing function after it deploys/resumes by modifying at least one document, in its source keyspace.

- This mutation will trigger its

Sobre a atualizaçãoe/ouOnDeletehandler. - This is an advanced use case of

OnDeploybecause, traditionally, Eventing function execution had been restricted to trigger only when changes occur in its source keyspace by entities other than the Eventing function itself or by timer expiry.

- This mutation will trigger its

Rate Limiter

In this post, we will build a robust rate limiter using the token-bucket algorithm and Couchbase’s Eventing service. Along the way, you’ll get hands-on experience with the new OnDeploy handler and discover how Eventing simplifies integration with other Couchbase services.

High-Level Design

Dimensionamento multidimensional

The 6-node cluster must have the following services to node mappings:

table {

border-collapse: collapse;

width: 60%;

}

th, td {

border: 1px solid #000;

padding: 8px;

text-align: left;

}

| S.No. | Node Number | Service(s) |

|---|---|---|

| 1. | 0 | Dados |

| 2. | 1 | Dados |

| 3. | 2 | Dados |

| 4. | 3 | Eventing, Query |

| 5. | 4 | Eventing, Query |

| 6. | 5 | Indexação |

A few points to note about the cluster setup:

- We use 3 Data service nodes to ensure redundancy via data replication.

- We run the Eventing service on 2 nodes to increase the Eventing function parallelism.

- This is done in addition to having multiple workers for our Eventing function.

- CPU-intensive services like Data and Eventing must be kept on separate cluster nodes.

- We need the Query service because certain operations, such as deleting all documents in a keyspace, can be conveniently performed via the Query service.

- We need the Indexing service to create primary indices for the Ephemeral bucket.

Keyspaces

Our cluster must have the following keyspaces:

table {

border-collapse: collapse;

width: 100%;

margin: 20px 0;

}

th, td {

border: 1px solid #000;

padding: 10px;

text-align: left;

vertical-align: top;

}

th {

background-color: #d9d9d9;

font-weight: 600;

}

.group-header {

background-color: #fbce90;

font-weight: bold;

}

| S.No. | Nome do balde | Tipo de caçamba | Escopo | Coleção | Descrição |

|---|---|---|---|---|---|

| 1 | padrão | Couchbase | Padrão | Padrão |

|

| _system | _mobile | – | |||

| _system | _query | – | |||

| 2 | rate-limiter | Efêmera | Padrão | Padrão | – |

| _system | _mobile | – | |||

| _system | _query | – | |||

| my-llm | limites | Store the document containing the tier-to-rate limit mapping. | |||

| my-llm | tracker | Store counter documents to keep track of individual users’ usage. | |||

| 3 | my-llm | Couchbase | Padrão | Padrão | – |

| _system | _mobile | – | |||

| _system | _query | – | |||

| usuários | contas | Store the user account details, including their “tier”. | |||

| usuários | eventos | Store the user events that must be rate-limited based on the user’s “tier”. |

Observação:

- O

rate-limiterbucket isEfêmerabecause we don’t need to persist that data. We use the data in that bucket to track per-user rate-limit usage and to cache the tier-to-rate-limit mapping.

External REST API Endpoints

The Eventing function interacts with external API endpoints that provide the following functionalities:

- Provide the latest tier-to-rate-limit mapping.

- Accept modifications to the tier-to-rate-limit mapping.

- Accept incoming requests that are within the user’s rate limit by our Eventing function.

- In this project, our endpoint will maintain a count of these incoming requests.

This count will help us verify whether our rate limiter application works as expected.

- In this project, our endpoint will maintain a count of these incoming requests.

- Provide the number of incoming requests that our Eventing function has deemed to be within the user’s rate limit.

The link to the OpenAPI specification of the above API endpoints can be found aqui.

Note: The Go program that hosts these REST API endpoints is linked in the Appendix.

Low-Level Design

Eventing Function Setup

Below is a list of all the changes we need to make to the Eventing function’s default settings.

Keyspaces

table {

border-collapse: collapse;

width: 60%;

}

th, td {

border: 1px solid #000;

padding: 8px;

text-align: left;

}

| S.No. | Campo | Valor |

|---|---|---|

| 1. | Function scope | default._default |

| 2. | Source Keyspace | my-llm.users.events |

| 3. | Eventing Storage Keyspace | default._default._default |

Configurações

table {

border-collapse: collapse;

width: 60%;

}

th, td {

border: 1px solid #000;

padding: 8px;

text-align: left;

}

| S.No. | Campo | Valor |

|---|---|---|

| 1. | Nome | my-llm-rate-limiter |

| 2. | Deployment Feed Boundary | A partir de agora |

| 3. | Descrição | This Eventing function acts as a rate limiter. |

| 4. | Workers | 10 |

Bucket Bindings

table {

border-collapse:collpase;

width:100%;

max-width:900px;}

th,td {

border: 1px solid #000;

padding: 8px 12px;

text-align:center;

}

thead th {

background-color: #d9d9d9;

font-weight:bold;

}

.left {

text-align:left;

}

| S.No. | Balde Apelido |

Espaço-chave | Acesso | ||

|---|---|---|---|---|---|

| Balde | Escopo | Coleção | |||

| 1. | UserAccounts | my-llm | usuários | contas | Read-only |

| 2. | rateLimiter | rate-limiter | my-llm | tracker | Read & Write |

| 3. | tierLimits | rate-limiter | my-llm | limites | Read & Write |

URL Bindings

table {

border-collapse:collapse;

width:880px;

}

.left {

text-align:left;

}

.nowrap {

white-space: nowrap;

}

| S.No. | URL Alias | URL | Auth | Nome de usuário | Senha |

|---|---|---|---|---|---|

| 1. | llmEndpoint | http://localhost:3054/my-llm | Básico | Eventos | Eventing123 |

| 2. | tiersEndpoint | http://localhost:3054/tiers |

Observação: Both “allow cookies” and “validate SSL certificate” options are disabled.

Complete Application Flow Diagram

This diagram shows the interactions among the Eventing function handlers, external REST API endpoints, and keyspaces to behave as a rate limiter based on the token bucket algorithm.

In the following sections, we will implement the rate limiter step by step.

OnDeploy Configuração

Get and Store the Tiers From the External REST API Endpoint

Quando o OnDeploy handler starts executing, it must first get the tiers-to-rate-limit mapping from the external REST API endpoint represented by the tiersEndpoint URL binding.

The response from the /tiers external REST API will be a JSON value that contains the mapping from tier name (of type Cordas) to a per-hour rate limit represented by the total count of requests allowed per hour (i.e., total_request_count) (of type número).

We store the tiers-to-rate-limit mapping in the rate-limit.my-llm.limits keyspace.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 |

function OnDeploy(action) { // ... // GET the tiers from the `tiersEndpoint` const response = curl('GET', tiersEndpoint); if (response.status != 200) { throw new Error("Error(Cannot get tiers): " + JSON.stringify(response)); } const tiers = response.body; log("Successfully retrieved the tiers: " + JSON.stringify(tiers)); // Write the tiers to the `tierLimits` keyspace, in the document with ID `limits` tierLimits["limits"] = tiers; // ... // Create a timer to run every 24 hours to refresh the tiers let timeAfter24hours = new Date(); timeAfter24hours.setDate(timeAfter24hours.getDate() + 1); log("Time after 24 hours is: " + timeAfter24hours); createTimer(updateTierCallback, timeAfter24hours, "tier-updater", {}); // ... } // Function to update the user tiers every 24 hours function updateTierCallback(context) { log('From updateTierCallback: timer fired'); // GET the tiers from the `tiersEndpoint` const response = curl('GET', tiersEndpoint); if (response.status != 200) { log("Error(Cannot get tiers): " + JSON.stringify(response)); } else { const tiers = response.body; log("Successfully retrieved the tiers: " + JSON.stringify(tiers)); // Write the tiers to the `tierLimits` keyspace, in the document with ID `limits` tierLimits["limits"] = tiers; } // Create a timer to run every 24 hours to refresh the tiers let timeAfter24hours = new Date(); timeAfter24hours.setDate(timeAfter24hours.getDate() + 1); log("Time after 24 hours is: " + timeAfter24hours); createTimer(updateTierCallback, timeAfter24hours, "tier-updater", {}); } |

Reset All Rate Limit Trackers When Deploying

![]()

In our application, we model Eventing function undeployment as a hard shutdown; hence, during its deployment, we delete all documents that track users’ rate limit usages. We model pause as a temporary suspension of rate-limiting activities; hence, we do not clear those documents in case of Eventing function resumption.

Notice how we treat deployment and resumption operations separately? OnDeploy makes such use cases possible because Eventing also passes a razão no campo ação object to the OnDeploy handler to specify whether the Eventing function is deploying or resuming.

|

1 2 3 4 5 6 7 8 9 10 11 12 |

function OnDeploy(action) { // ... // If we are deploying, then we should delete all the existing document in the keyspace `rateLimiter` if (action.reason === "deploy") { let results = N1QL("DELETE FROM `rate-limiter`.`my-llm`.tracker"); results.close(); log("Deleted all the documents in the `rate-limiter`.`my-llm`.tracker keyspace as we are deploying!"); } // ... } |

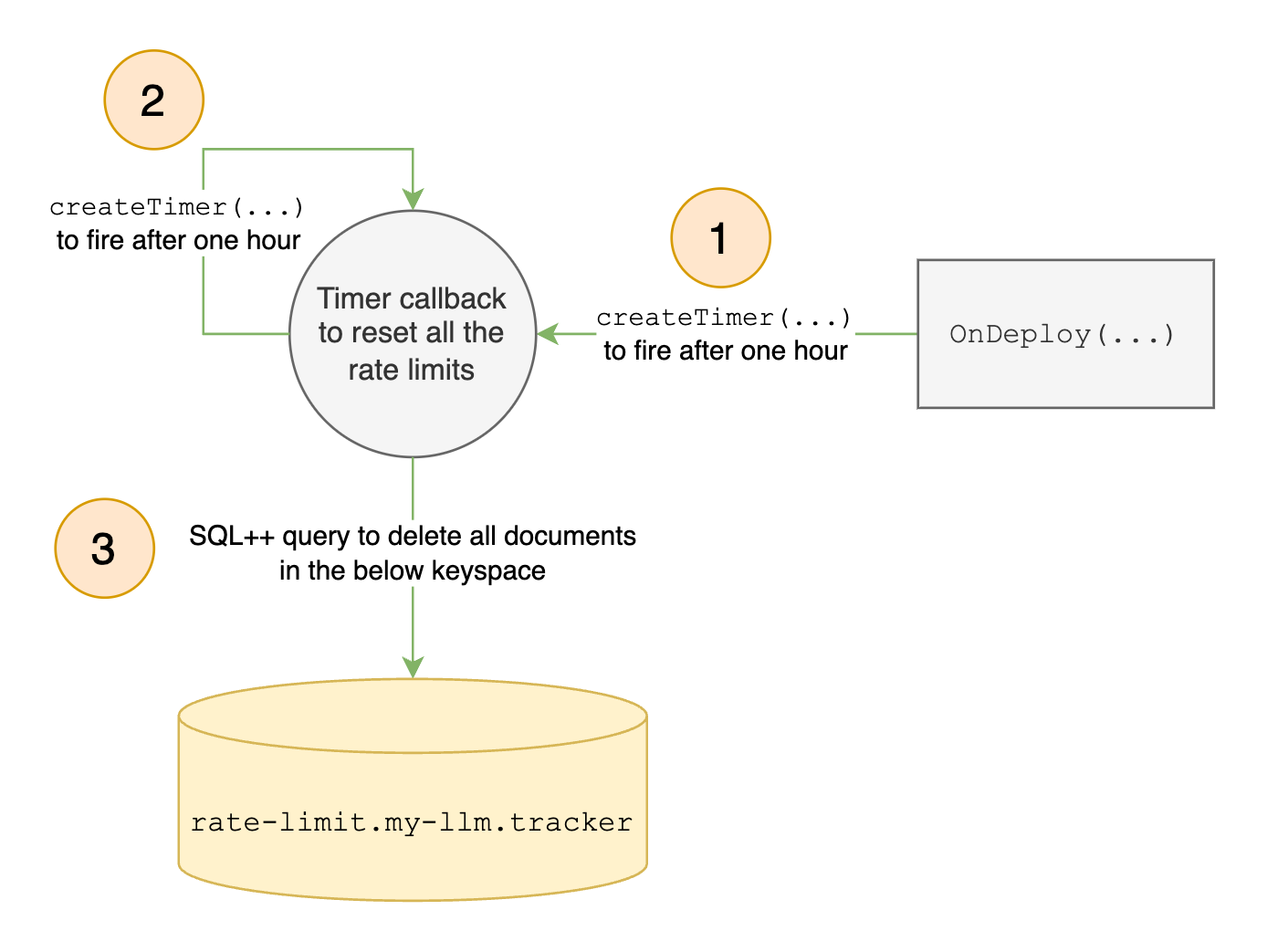

Reset the Users’ Rate Limits Every Hour

Given that we are implementing a token-bucket algorithm, we reset the users’ rate limits every hour using timers – an Eventing functionality that is critical for our use case. We create the first timer in the OnDeploy handler to fire after one hour. Once the timer-callback fires, it will create a new timer to fire after one hour, and so on, creating a self-recurring timer that fires every hour as long as the Eventing function is deployed.

Observe that this timer did not require any external mutation to trigger the Eventing function to create it. This was all done during deployment/resumption in the OnDeploy handler.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 |

function OnDeploy(action) { // ... // Create a timer to run every 1 hour to reset user rate limits let timeAfter1Hour = new Date(); timeAfter1Hour.setHours(timeAfter1Hour.getHours() + 1); log("Time after 1 hour is: " + timeAfter1Hour); createTimer(resetRateLimiter, timeAfter1Hour, "rate-limit-resetter", {}); // ... } // Function to reset the rate limits for all users every 1 hour function resetRateLimiter(context) { log('From resetRateLimiter: timer fired'); let results = N1QL("DELETE FROM `rate-limiter`.`my-llm`.tracker"); results.close(); // Create a timer to run every 1 hour to reset user rate limits let timeAfter1Hour = new Date(); timeAfter1Hour.setHours(timeAfter1Hour.getHours() + 1); log("Time after 1 hour is: " + timeAfter1Hour); createTimer(resetRateLimiter, timeAfter1Hour, "rate-limit-resetter", {}); } |

Refresh Tier Rate Limits Daily

We model our application to allow rate limit changes every 24 hours; hence, our Eventing function must pull the latest tier-to-rate-limit mapping from the external REST API endpoint every 24 hours to ensure the correct rate limits are applied to our users.

Again, we use self-recurring timers to get the latest tier-to-rate-limit mapping every 24 hours.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 |

function OnDeploy(action) { // ... // Create a timer to run every 24 hours to refresh the tiers let timeAfter24hours = new Date(); timeAfter24hours.setDate(timeAfter24hours.getDate() + 1); log("Time after 24 hours is: " + timeAfter24hours); createTimer(updateTierCallback, timeAfter24hours, "tier-updater", {}); // ... } // Function to update the user tiers every 24 hours function updateTierCallback(context) { log('From updateTierCallback: timer fired'); // GET the tiers from the `tiersEndpoint` const response = curl('GET', tiersEndpoint); if (response.status != 200) { log("Error(Cannot get tiers): " + JSON.stringify(response)); } else { const tiers = response.body; log("Successfully retrieved the tiers: " + JSON.stringify(tiers)); // Write the tiers to the `tierLimits` keyspace, in the document with ID `limits` tierLimits["limits"] = tiers; } // Create a timer to run every 24 hours to refresh the tiers let timeAfter24hours = new Date(); timeAfter24hours.setDate(timeAfter24hours.getDate() + 1); log("Time after 24 hours is: " + timeAfter24hours); createTimer(updateTierCallback, timeAfter24hours, "tier-updater", {}); } |

Sobre a atualização Configuração

Handling User Events

Our application will listen to incoming request documents from the my-llm.users.events keyspace. These documents have a unique ID and contain data in the format:

|

1 2 3 4 5 6 |

{ "user_id": String, "respond_to": String, "payload": String, "header": String } |

If the user’s request is within their rate limit, all the data in the document except the user_id will be sent to the endpoint protected by our rate limiter.

Reading the User’s Tier

Quando o Sobre a atualização handler is triggered by an incoming user event document from the previous step, we must extract the user_id field from it.

Usando o user_id field, we’ll retrieve the user’s account details document from the my-llm.users.accounts keyspace. From this document, we’ll extract the value of the tier campo.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

function OnUpdate(doc, meta, xattrs) { // ... const user_id = doc.user_id; let done = false; while (!done) { // Get the tier of the `user_id` let userAccountsMeta = { "id": user_id }; let userAccountsResult = couchbase.get(userAccounts, userAccountsMeta, { "cache": true }); if (!userAccountsResult.success) { throw new Error("Error(Unable to get the user's details): " + JSON.stringify(userAccountsResult)); } const tier = userAccountsResult.doc.tier; // ... } // ... } |

Reading the Tier’s Rate Limits

We get the rate limits for the user’s tier from the document that contains the tiers-to-rate-limits mapping, located in the rate-limit.my-llm.limits keyspace.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 |

function OnUpdate(doc, meta, xattrs) { // ... while (!done) { // ... // Get the rate limit for the tier let tierLimitsMeta = { "id": "limits" }; let tierLimitsResult = couchbase.get(tierLimits, tierLimitsMeta, { "cache": true }); if (!tierLimitsResult.success) { throw new Error("Error(Unable to get the tier limits): " + JSON.stringify(tierLimitsResult)); } const rateLimit = tierLimitsResult.doc[tier]; // ... } // ... } |

Decide Whether to Rate Limit the Request & Update the User’s Rate Limit Usage

Given the user’s rate limit, we now check their current usage to decide if they can make a request. The rate limiter tracks each user’s usage with a counter document in the rate-limit.my-llm.tracker keyspace. We create this counter document on demand for each user_id to store that user’s request count for the current window, before the token bucket limit is refreshed. If a user’s usage meets or exceeds their tier’s limit, we block their request. Otherwise, we forward it to the protected endpoint. Finally, we update the user’s rate-limit usage in their corresponding counter document in the rate-limit.my-llm.tracker keyspace.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 |

function OnUpdate(doc, meta, xattrs) { // ... while (!done) { // ... // Try to get the rate limit count for the `user_id` const userIDMeta = { "id": user_id }; const result = couchbase.get(rateLimiter, userIDMeta); // If the rate limit count for the `user_id` does not exist. Try to create it. while (!result.success) { couchbase.insert(rateLimiter, userIDMeta, { "count": 0 }); result = couchbase.get(rateLimiter, userIDMeta); } // Assign the counter document's `count` and `meta` to local variables for convenience const counterDocCount = result.doc.count; const counterDocMeta = result.meta; // Check if the counter has hit the rate limit // We use >= instead of == to handle the edge case where the tier limits have reduced // but the tier tracker documents have not yet been deleted. if (counterDocCount >= rateLimit) { log("User with ID '" + user_id + "' hit their rate limit of " + rateLimit + "!"); done = true; continue; } // Update the count in the document let res = couchbase.mutateIn(rateLimiter, counterDocMeta, [ couchbase.MutateInSpec.replace("count", counterDocCount + 1), ]); // ... } // ... } |

Send the “within the limit” Request to the Desired Endpoint

User requests, within their corresponding tier’s rate limits, are sent to the REST API endpoint protected by our rate limiter.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

function OnUpdate(doc, meta, xattrs) { // ... while (!done) { // ... done = res.success; if (done) { // POST the request to the `llmEndpoint` delete doc.user_id; const response = curl('POST', llmEndpoint, doc); if (response.status != 200) { throw new Error("Error(MyLLM endpoint is not working): " + response.status); } } } // ... } |

Testing Our Application

Now that we have implemented our rate limiter, we can create the environment to run and test it:

- Run the Go program to load a sample set of 100 users.

- Run the Go program to start the HTTP server that provides the external REST APIs that our Eventing function interacts with.

- Deploy the Eventing function.

- To trigger the Eventing function, we must run the Go program to load user event documents into its source keyspace, i.e.,

my-llm.users.events. - To get the number of user requests that reach the external REST API endpoint protected by our rate limiter, you must send a

OBTERrequest to the/my-llmponto final.

Conclusão

This post showed how to use the new Couchbase Eventing handler, OnDeploy, to build a token-bucket rate limiter – highlighting the power and flexibility of Couchbase Eventing for developing integrated, standalone solutions.

More broadly, it demonstrates a shift in application development: building applications from the database itself. This enables tailored solutions to diverse requirements – all within the Couchbase platform.

Apêndice

Complete Eventing code: Click Here

Server Go Code: Click Here

Client Go Code: Click Here

User Loader Go Code: Click Here