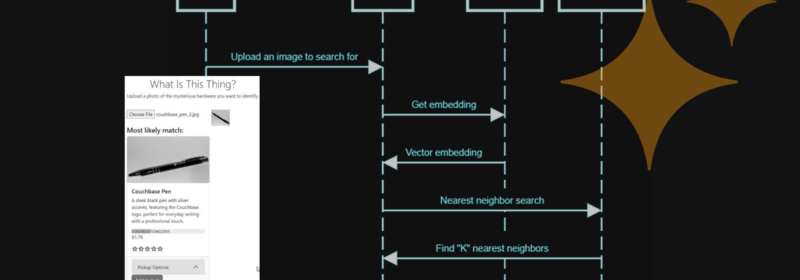

Check out the blogs that falls under the category Vector Search. Learn more about the vector search best practices – interacting with AI LLMs and more

Category: Vector Search

Extending RAG capabilities to Excel with Couchbase, LLamaIndex, and Amazon Bedrock

Extend Retrieval Augmented Generation (RAG) capabilities to Excel using Couchbase, LlamaIndex, and Amazon Bedrock. Make spreadsheets searchable.

Chat With Your Git History, Thanks to RAG and Couchbase Shell

Turn your Git history into a chat-ready knowledge base using RAG and Couchbase Shell.

DeepSeek Models Now Available in Capella AI Services

DeepSeek-R1 is now in Capella AI Services! Unlock advanced reasoning for enterprise AI at lower TCO. 🚀 Sign up for early access!

Integrate Groq’s Fast LLM Inferencing With Couchbase Vector Search

Integrate Groq’s fast LLM inference with Couchbase Vector Search for efficient RAG apps. Compare its speed with OpenAI, Gemini, and Ollama.

2025 Enterprise AI Predictions: Four Prominent Shifts Reshaping Infrastructure and Strategy

Discover four key AI predictions for 2025 that will shape enterprise strategy, including the rise of hybrid AI models and evolving data architectures. Read more!

What is Semantic Search? The Definitive Guide

Learn how semantic search delivers relevant results by understanding user intent, context, and relationships between words in the comprehensive guide.

Introducing Couchbase as a Vector Store in MindsDB

Combine Couchbase and MindsDB to unlock AI-driven applications with high-performance vector storage and seamless integration.

Top Posts

- Data Modeling Explained: Conceptual, Physical, Logical

- Six Types of Data Models (With Examples)

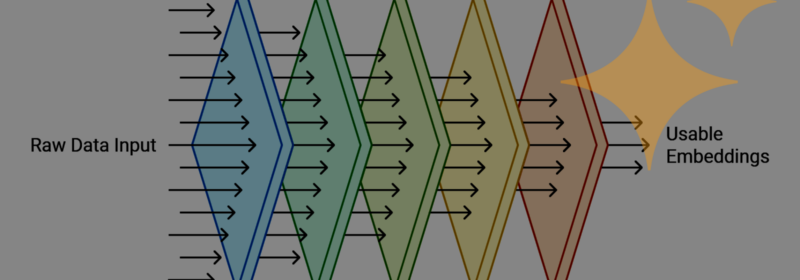

- What are Embedding Models? An Overview

- Application Development Life Cycle (Phases and Management Models)

- What Is Data Analysis? Types, Methods, and Tools for Research

- Capella AI Services: Build Enterprise-Grade Agents

- Data Analysis Methods: Qualitative vs. Quantitative Techniques

- 5 reasons SQLite Is the WRONG Database for Edge AI

- Build a Rate Limiter With Couchbase Eventing