LangChain overview

LangChain is a powerful platform designed to equip developers with essential tools for creating applications driven by large language models (LLMs). It simplifies the complex processes involved in working with LLMs, enabling the development of sophisticated applications like chatbots, content generators, and automated text processors.

LangChain offers a robust and flexible framework that makes integrating and deploying large language models significantly easier for application developers. It is especially useful for developers seeking to harness LLM capabilities without grappling with the intricacies of model management and data processing.

This guide will cover LangChain’s workings, key components and features, real-world use cases, and its benefits. We’ll also provide instructions on getting started with LangChain, discuss its integration with Couchbase, and summarize key takeaways with additional resources. Finally, we’ll address frequently asked questions to give you a comprehensive understanding of LangChain.

- How does LangChain work?

- Components of LangChain

- LangChain use cases

- LangChain benefits

- How to get started with LangChain

- Couchbase LangChain integration

- Key takeaways and additional resources

- FAQ

How does LangChain work?

LangChain simplifies the process of working with large language models by providing a user-friendly framework for developers. For instance, a developer building an e-commerce recommendation system can use LangChain to integrate various large language models like OpenAI’s GPT-4 and Anthropic’s Claude.

Using LangChain’s connectors, the recommendation system can seamlessly access data from multiple sources, such as:

- Document databases: Connect to Couchbase or MongoDB to fetch product catalog and user activity data.

- Database systems: Integrate with SQL databases like MySQL or PostgreSQL for order history and transaction records.

- APIs: Pull data from e-commerce platforms like Shopify or WooCommerce for real-time inventory and sales data.

- Cloud storage: Access user-generated content stored in cloud services like AWS S3 or Google Cloud Storage for personalized recommendations.

- Hybrid search: Utilize Elasticsearch or Solr for a hybrid search approach that combines keyword search with vector-based semantic search to enhance the accuracy and relevance of search results.

This seamless integration ensures that the recommendation system can effortlessly pull relevant data from diverse sources, providing users with personalized and accurate product suggestions.

Moreover, LangChain’s support for multiple LLMs allows the developer to utilize GPT-4 for generating natural language recommendations and Claude for analyzing user behavior patterns. By leveraging the strengths of each model and the power of hybrid search, the developer can ensure that the recommendation system delivers highly relevant and timely product suggestions, enhancing the user shopping experience and boosting sales.

Components of LangChain

LangChain’s architecture comprises several essential components that facilitate the development of LLM applications. By leveraging these components, LangChain simplifies the process of building and deploying advanced LLM-based applications:

- Data connectors: These enable integration with various data sources, ensuring smooth data ingestion and processing from databases, APIs, and cloud storage.

- Model integration: LangChain supports multiple LLMs, including popular models like GPT-4 and BERT, allowing developers to choose the best fit for their needs.

- Processing pipelines: These tools help create and manage workflows for tasks such as data cleaning, transformation, and model training, ensuring efficient data processing and preparation.

- Deployment modules: LangChain offers tools for automating the deployment of applications, simplifying scaling and maintenance in production environments.

- Monitoring and logging: The platform provides real-time monitoring and logging tools that offer insights into application performance, helping ensure smooth and efficient operations.

LangChain features

LangChain is equipped with various features designed to make the development and deployment of LLM applications seamless and efficient. It supports multiple language models, providing the flexibility to choose the most suitable one for your project. The platform’s flexible data integration capabilities enable easy connection to diverse data sources, ensuring smooth data flow within your applications.

LangChain’s robust pipeline management tools facilitate the creation and management of complex data processing workflows, ensuring efficient handling of tasks like data cleaning and transformation.

LangChain Expression Language (LCEL) makes it easy to compose different components and chains together: “LCEL was designed from day 1 to support putting prototypes in production, with no code changes, from the simplest “prompt + LLM” chain to the most complex chains (we’ve seen folks successfully run LCEL chains with 100s of steps in production).”

Automated deployment features simplify bringing applications to production, making scaling and maintenance easier. The platform is also designed for scalability, allowing applications to handle increasing volumes of data and user interactions. Real-time monitoring tools offer valuable insights into application performance, helping optimize and maintain efficiency.

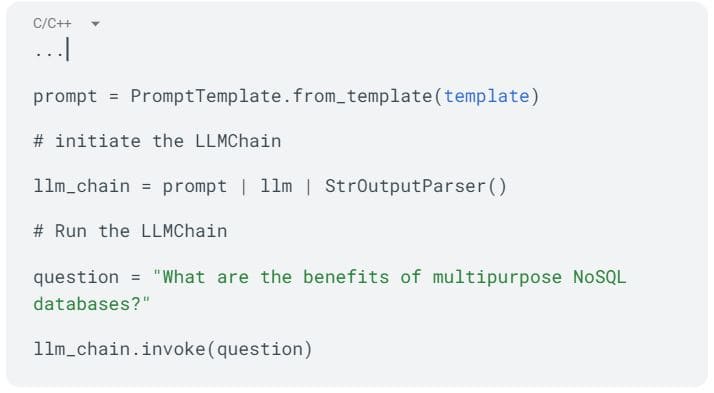

LangChain code example

To illustrate the simplicity of the framework, here’s a short code snippet that shows how a pipeline in LangChain chains different stages together:

LangChain use cases

LangChain’s versatility makes it applicable to a variety of scenarios. It is particularly effective for creating chatbots and conversational agents capable of understanding and responding to user queries in natural language, thus enhancing customer interaction and support.

Other notable use cases include:

- Content generation: Automate the creation of high-quality content for blogs, articles, and marketing materials, significantly reducing the time and effort required for content production.

- Sentiment analysis: Analyze text data to gauge customer sentiment and inform business decisions.

- Document summarization: Quickly extract key information from large documents, enabling efficient information retrieval.

- Language translation: Develop applications that translate text between different languages in real time, ideal for creating multilingual support systems.

- Customer support automation: Create systems that handle customer queries and support tickets automatically, improving response times and customer satisfaction.

LangChain benefits

Adopting LangChain offers numerous benefits that make it an attractive choice for developers and organizations. One of the primary advantages is simplified development; LangChain abstracts the complexities of working with large language models, making development faster and easier. This leads to cost efficiency, as it reduces the time and resources needed to build and maintain LLM applications.

LangChain also offers enhanced flexibility, supporting a wide range of models and data sources, which allows for the creation of tailored solutions to meet specific needs. The platform’s scalability enables applications to handle increasing volumes of data and user interactions, ensuring they can grow with your business requirements. Built-in tools for monitoring and optimization ensure that your applications run efficiently, leading to improved performance.

Additionally, LangChain’s streamlined development and deployment processes facilitate quicker delivery of applications, providing a competitive edge.

How to get started with LangChain

To get started, refer to the LangChain documentation for detailed guides and examples. Install the required LangChain modules so you are ready to write some code. The documentation covers various topics, including setting up language models, connecting to data sources, and constructing pipelines.

Start by exploring the basic examples to understand how to create a simple pipeline. The docs also provide API references and advanced usage scenarios, helping you leverage the full power of LangChain for your specific needs. With the SDK and documentation, you can quickly build and deploy scalable AI applications.

Couchbase LangChain integration

LangChain integrates seamlessly with Couchbase, a high-performance NoSQL database, enhancing the handling and processing of large volumes of data. This integration allows developers to leverage Couchbase’s robust data management capabilities to store and manage data efficiently, which can then be accessed and processed by LangChain applications.

Combining LangChain with Couchbase is especially beneficial for applications requiring fast data retrieval and real-time processing, such as chatbots and recommendation systems. Couchbase’s scalable data management allows for efficient handling of large datasets, while real-time data access ensures that data can be retrieved and processed quickly for responsive applications. This integration also contributes to enhanced performance, with Couchbase’s high throughput and low latency improving the overall efficiency of your applications.

Key takeaways and additional resources

In summary, LangChain is a versatile and powerful platform that simplifies the development of applications using large language models. Its integrated environment and robust features make it an ideal choice for developers seeking to build scalable and efficient solutions. LangChain’s tools for data connectivity, model integration, and deployment streamline the development process, allowing for quick and easy application creation.

To get started with LangChain, sign up on the official website and explore the available documentation and tutorials. For additional resources, consider the following links:

These resources provide valuable information and support as you begin working with LangChain. To learn more about concepts related to LLM and AI, you can visit our blog and concepts hub.

FAQ

What is the purpose of LangChain?

LangChain provides a cohesive and flexible framework to simplify the development of applications that use large language models.

What is LangChain used for?

LangChain is used to develop applications such as chatbots, content generation tools, sentiment analysis systems, and more, all leveraging the power of large language models.

What is a LangChain agent?

A LangChain agent is an autonomous component within the platform that performs specific tasks, such as querying data, processing text, or interacting with other services to achieve a given goal.

What is the difference between LangChain and LlamaIndex?

LangChain focuses on providing a comprehensive platform for integrating and deploying large language models, whereas LlamaIndex is more focused on indexing and searching large-scale text data.