This is the second blog post of a multi-part series exploring composite vector indexing in Couchbase, check out the first post aquí.

The series will cover:

- Why composite vector indexes matter, including concepts, terminology, and developer motivation. A Smart Grocery Recommendation System will be used as a running example.

- How composite vector indexes are implemented inside the Couchbase Indexing Service.

- How ORDER BY pushdown works for composite vector queries.

- Real-world performance behavior and benchmarking results.

Implementation of Composite Vector Indexes

GSI uses the FAISS index factory under the hood. An index string like the one shown below will be constructed from the description field given in the DDL.

|

1 |

IVF{nlist}_HNSW,{PQ{M}x{N}|SQ{n} |

Example: With a description string like “IVF10000,PQ32x8”, an FAISS index factory string like “IVF10000_HNSW,PQ32x8” will be constructed.

However, this factory string is used only as a building block during index training and configuration. GSI does not rely on FAISS as a monolithic in-memory index over the full dataset. Instead, FAISS is applied selectively on sampled data to learn centroids and quantization codebooks, while the full index layout, storage, mutation handling, and query execution are managed by Couchbase. This allows Couchbase to scale vector search efficiently, integrate scalar filtering and query semantics, and support continuous updates - capabilities that extend well beyond invoking a standalone FAISS index.

Incrustaciones

The embedding layer takes the relevant text fields, sends them through a transformer model, and produces semantic vectors that represent each product. These vectors power the ANN search. The application must store these embeddings alongside the product data so the vector index can use them and ensure the stored embeddings match the vector index definition (for example, fixed dimension and numeric type) expected by Couchbase.

|

1 2 3 4 5 6 7 8 |

{ "product_name": "almond butter", "sugar_100g": 15, "proteins_100g": 20, "descripción": "almond butter with chocolate chips", "text_vector": [0.12, -0.04, 0.33, 0.25, ...] ... } |

Index Creation and Build

|

1 2 3 4 5 6 7 8 9 10 11 12 |

CREAR ÍNDICE idx_vec_food EN alimentos ( text_vector VECTOR, sugars_100g, proteins_100g, producto_nombre ) CON { "dimension": 384, "similitud": "L2", "descripción": "IVF,SQ8" }; |

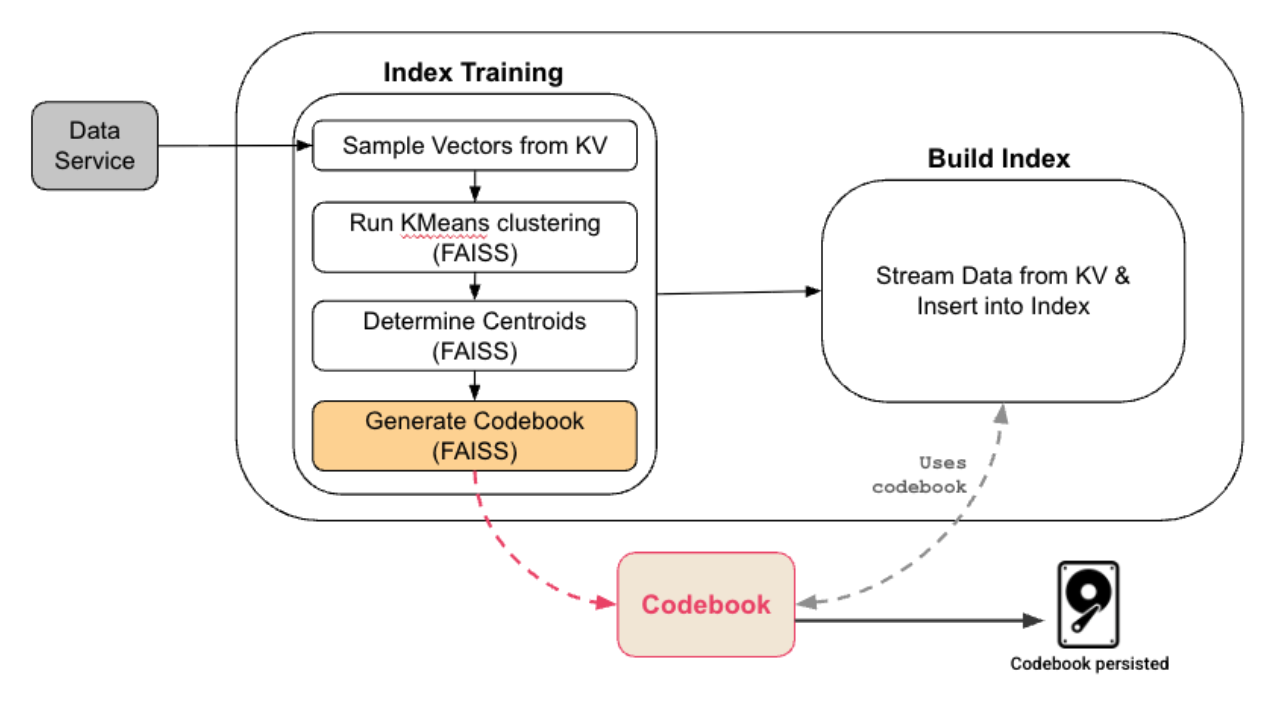

Create and Build Flow

- Given the user has a sufficient number of vectors stored, GSI randomly samples data to create a representative dataset.

- This representative dataset is used to train the FAISS index. After training, GSI persists the codebook which includes an IVF inverted list, PQ codebooks:

- IVF splits the vector space in nlist partitions and each partition is represented by a centroid

- PQ or SQ quantization is used per partition to compress the vectors

- The HNSW graph of the centroids is created; it is useful when number of centroids is very large

- Data is streamed from the Data Service using the DCP protocol and for each document received.

- The document is assigned to the partition whose centroid is nearest to the vector, i.e., the centroid is C1

- During indexing, each document’s vector is assigned to its nearest centroid, stored in a compact encoded form optimized for fast distance computation, and tracked efficiently so future updates only reprocess the vector if it actually changes.

Nota

If the underlying vector distribution changes significantly, the index must be rebuilt to retrain the index. This can happen due to:

- A change in the embedding model (e.g., switching models or dimensions)

- Major shifts in the data distribution (new product categories, language changes, or domain drift)

- Re-embedding existing documents with updated embeddings

How Many Documents Are Needed to Train a Vector Index?

When building a vector index, Couchbase automatically samples vector data to train the index for efficient and accurate ANN search.

In general, the collection must contain at least as many documents as the number of centroids (nlist) configured for the index, since each centroid requires training data.

When product quantization (PQ) is used, this minimum becomes max(nlist, 2^nbits) to ensure sufficient data for quantizer training where nbits is N from PQ{M}x{N}.

Couchbase handles training as follows:

- Small datasets (up to ~10,000 documents):

- All vectors are used for training, no sampling is required.

- Larger datasets:

- By default, Couchbase selects a training set by taking the larger of the following, while capping the final sample size at 1 million vectors:

- 10% of the dataset, and

- 10x the number of centroids (nlist)

- This approach balances training quality with index build time

- By default, Couchbase selects a training set by taking the larger of the following, while capping the final sample size at 1 million vectors:

Buenas prácticas: For stable, high-quality training, aim for at least 10 vectors per centroid, which allows the index to learn the underlying vector distribution effectively.

Advanced control: If needed, you can explicitly control the number of training vectors by specifying the train_list parameter when creating the index.

Couchbase manages the sampling and training process automatically. As a user, the key requirement is to ensure that a sufficient number of embedded documents exist before building the index.

Index Scan

|

1 2 3 4 5 |

SELECCIONE product_name DESDE alimentos DONDE sugars_100g < 20 Y proteins_100g > 10 PEDIR POR DISTANCIA_VECTOR_APROX(text_vector, [query_embedding], 'L2') LÍMITE 10; |

Scan Flow

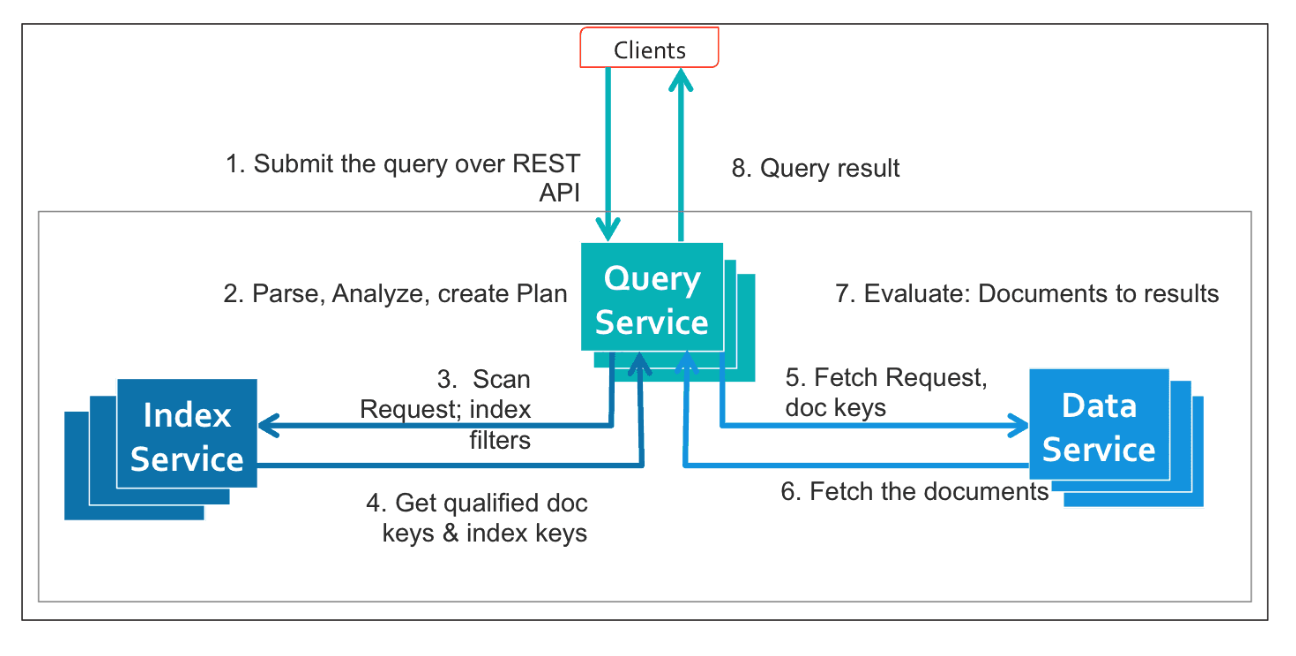

When a query containing a vector search arrives, Couchbase follows a well-defined sequence to route it through the right components and prepare the scan.

If sargable vector indexes were created and are active, the Query Service can use them to serve the Filtered ANN queries faster

The scan coordinator in the indexer process receives the below mentioned inputs along with index name and consistency parameters:

- Scalar filters on indexed fields

- Equality or range predicates like sugars_100g < 20

- The query vector

- Embedding of the user’s search intent

- The result size k

- How many similar items the user wants back

- This is given in the LIMIT

- The number of nearest centroids n

- How much of the vector space we want to search

- nprobes value n is parameter to DISTANCIA_VECTOR_APROX

After getting the above information, a scan request is formed, which goes through the following steps:

- Index snapshot is fetched based on the consistency parameters given.

- If the user needs session consistency, the indexer fetches the latest timestamp from the Data Service and waits for the indexer to catch up and generate consistent snapshots that can serve the query.

- If the user is fine with any consistency, the indexer uses the snapshot that it has cached without waiting.

- The indexer waits for the storage readers needed to serve the query.

- As the indexer’s resources are limited it is configured with the max number of readers that can be run; hence, if a system is busy, scans must wait for the readers to become free.

- The indexer fetches the codebooks for the partitions that are getting scanned for the given query.

- Note that the codebook (IVF Inverted list and PQ codebooks) does not change after the initial training phase, hence there are no snapshots for codebook.

- Using the codebooks fetched above, the nearest nprobe centroids of the query vector in every partition needed are fetched for a given query vector.

- For a given index partition, scalar filters and nearest centroids are combined to form non-overlapping scan ranges to read data from the index.

- Now the indexer sets up a dedicated scan pipeline. Think of this pipeline as a small, short-lived assembly line built specifically for that query. The pipeline stages (Read, Accumulate, and Write) run in parallel to accelerate the query process.

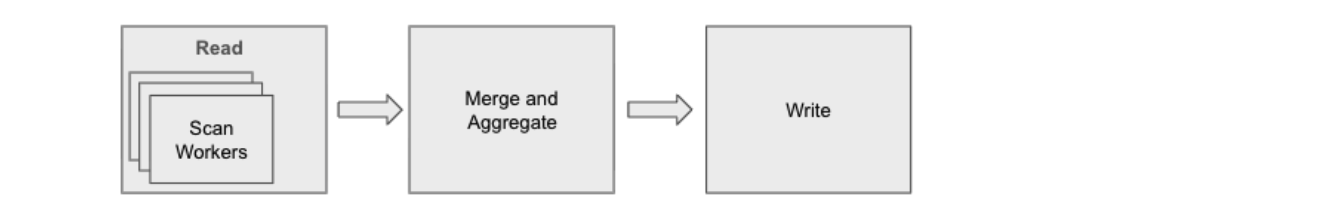

Scan Pipeline

The scan pipeline itself has three stages: read, aggregate, and write. Like any pipeline, each stage runs in parallel and streams its output into the next.

- Read Stage => Parallel Scanning + Scalar Filtering

- The read stage fans out across multiple scan workers.

- Each worker:

- Scans and reads its assigned index range.

- Applies scalar filters early to prune out irrelevant items.

- Forwards only the qualifying documents to the next stage.

- This early pruning is crucial; it prevents unnecessary vector distance calculations later.

- Aggregate Stage => Ordering + Vector Distance Computation

- The aggregate stage collects results from all workers and performs the “heavy lifting.”

- It merges and orders items if required.

- It substitutes centroid-based approximate distances with actual computed distances.

- It maintains a top-k heap to track the best candidates so far.

- This stage is where ANN logic meets scalar filtering.

- Write Stage => Streaming Results Back to the GSI Client in Query Service

- Finally, the write stage streams the aggregated results to the Query Service.

- If the index is distributed across multiple indexer nodes, results from each node are merged.

- From there, the query processor may perform additional operations such as:

- Coarse-grained filtering

- Se une a

- Projections or final sorting

The user ultimately receives a clean, ordered list of the nearest neighbors that satisfy all scalar conditions.

Advanced Query Behavior in Composite Vector Index Scans

Once you understand how a vector scan pipeline works, there are several deeper concepts that influence how efficiently your queries run. These behaviors are tied to how the index is defined and how scalar predicates interact with vector partitions.

Scan Parallelism

Each query has a natural limit on how much parallelism it can exploit. This inherent parallelism comes from how many non-overlapping index ranges can be generated from the scalar predicates and nprobes. More disjoint ranges means more opportunities to scan in parallel.

But scalar constraints aren’t the only factor. The actual number of workers the system uses is the minimum of:

- How much inherent parallelism the query can expose

- How many centroids the query needs to search

- How many centroids the index contains

- The configured maximum workers per scan per partition

This prevents the system from oversubscribing CPU or creating unnecessary threads when there is no additional parallel work to do.

Leading Key Predicates - Where Parallelism Begins

The predicates on the leading index keys (the first fields listed in the composite index definition) determine how much parallelism your query can exploit.

If a leading scalar key has multiple equality predicates, each becomes an independent scan range, allowing the system to fan out and execute them in parallel.

If the leading key uses a range predicate, the entire query collapses into one large scan range, which reduces parallelism.

In short:

- Equality on leading keys → maximum parallelism

- Range on leading keys → one big sequential scan

Choosing the right leading keys in the index definition has a direct impact on latency.

Vector predicates effectively behave like equality filters on centroid IDs, selecting a fixed set of clusters to scan.

Scalar Selectivity - How Much Work You Don’t Have to Do

Scalar selectivity measures how many data points can be eliminated by a scalar filter.

A highly selective filter removes a large portion of the dataset before vector distance calculations even begin.

Por ejemplo:

- sugars_100g < 20 may eliminate 70% of items

- proteins_100g > 10 may eliminate 90% of items

The more selective the scalar, the fewer vectors the index must compare.

Composite vector index scan throughput increases proportionally with scalar selectivity, because the pipeline spends less time reading documents and computing distances.

Paginación - Ordered Vector Results

Composite vector index scans produce a global order for the result set.

This happens because the index substitutes centroid IDs with actual vector distances, giving each candidate a precise distance metric.

You can use LIMIT and OFFSET to paginate through results as long as enough qualifying items exist within the selected n centroids.

This makes composite vector indexes suitable for UIs that require infinite scroll or page-by-page product browsing.

Flexibility in Index Definition - Tailor the Index to the Workload

Composite vector index DDL gives you full control over the ordering of index keys.

This order directly affects how filtering is performed, which in turn impacts query performance.

This flexibility enables multiple pruning strategies:

- Vector-centric pruning

- Start the index with the vector key, followed by scalars:

- CREATE INDEX idx ON food(text_vector, sugars_100g, proteins_100g)

- Good for workloads driven primarily by vector similarity.

- Start the index with the vector key, followed by scalars:

- Scalar-centric pruning

- Place highly selective scalar fields first, then the vector key:

- CREATE INDEX idx ON food(sugars_100g, proteins_100g, text_vector)

- This is the most common and most efficient pattern because selective scalars prune aggressively before vector evaluation begins.

- Place highly selective scalar fields first, then the vector key:

- Vector-only pruning

- Create an index only on the vector key:

- CREATE INDEX idx ON food(text_vector)

- This is useful when scalar fields have poor selectivity and won’t help prune the search space.

- Create an index only on the vector key:

- Scalar-only pruning

- Create a traditional non-vector index for queries that don’t use vector similarity.

Scalar-Only Query Support and Covering Scans

When the scalar predicates in a query are sargable against the scalar keys of a composite vector index, the index can be used to serve purely scalar queries - even when no vector similarity condition is present. In such cases, the composite vector index behaves like a traditional secondary index and can act as a covering index, allowing the query to be satisfied entirely from the index without fetching documents from the Data Service.

The scalar-only query:

|

1 2 3 |

SELECCIONE product_name DESDE alimentos DONDE sugars_100g < 20 Y proteins_100g > 10; |

Is sargable for:

|

1 2 |

CREAR ÍNDICE idx_vec_food EN alimentos(sugars_100g, proteins_100g, text_vector VECTOR, product_name); |

And not for:

|

1 2 |

CREAR ÍNDICE idx_bad EN alimentos(text_vector VECTOR, sugars_100g, proteins_100g); |

This is because:

- The leading key is a vector

- GSI cannot seek or range-scan on it

- The index cannot be used efficiently for scalar predicates

Limitations as of the Couchbase 8.0.0 Release

- If the distribution of vectors changes due to changes in the model that is being used - for example, data drift, feature drift, or quantization drift - users must drop and create the index again.

- GSI needs a minimum number of vectors in the dataset to create a representative dataset for training. Index creation during DDL or rebalance will fail if this criteria is not met.

- Only one vector field can be indexed.

- Array index is not supported with Vector.

Up to this point, we’ve seen how composite vector indexes combine scalar pruning with vector similarity efficiently. But similarity alone is rarely sufficient in real applications. What happens when you want results that are both semantically close to a query and ordered according to application-specific signals such as nutrition, price, or freshness, without pulling large result sets back to the query layer?

In the next part of this series, we’ll dive into Filtered ANN Search With Composite Vector Indexes and show how Couchbase pushes complex ORDER BY and LIMIT semantics directly into the indexer, allowing distance-based similarity and scalar ordering to be evaluated together in a single, efficient execution path.