Building real-time applications often requires connecting operational databases like Couchbase with data streaming platforms such as Confluent. But managing these integrations can be complex, requiring teams to deploy connectors, configure networking, manage schemas, and monitor performance. These challenges slow development and increase operational overhead.

We’re excited to announce the fully managed Couchbase Connector for Confluent Cloud, now available in both Sink and Source configurations. This launch eliminates the need to manage connector infrastructure, enabling seamless, bi-directional data movement between Confluent Cloud and Couchbase. It’s a significant step forward in reducing complexity and accelerating time to value for developers and platform teams building real-time, event-driven applications.

Why fully managed connectors matter

Managing your own Apache Kafka® connectors can be complex and time-consuming. It requires much more than downloading a plugin and pointing it at a topic. Users must:

-

- Deploy and scale Kafka Connect clusters.

- Monitor performance, errors, and retries.

- Ensure schema compatibility via Schema Registry.

- Maintain secure authentication and networking.

- Scale infrastructure to meet variable load conditions.

All of this adds up to operational overhead that distracts from core business goals. Fully managed connectors on Confluent Cloud eliminate these challenges. Just configure via the UI or API, and Confluent takes care of the rest, including deployment, scaling, error handling, and lifecycle management, enabling you to focus on building real-time apps.

Use cases

With real-time data movement now simplified, developers can unlock powerful use cases like:

-

- Retail: Stream clickstream or inventory updates into Couchbase to power user profile enrichment for real-time personalization.

- IoT: Ingest edge data into Couchbase for real-time analytics and dashboarding.

- Finance: Persist Kafka events from fraud detection or transaction systems into Couchbase for instant lookup and alerting.

- AI: By combining Confluent’s real-time data streaming with Couchbase vector store, organizations can seamlessly ingest and operationalize data to drive intelligent, real-time agentic and RAG applications.

Couchbase Sink Connector

The Couchbase Sink Connector allows you to stream data from Kafka topics directly into your Couchbase collections. It supports a wide variety of data formats, including AVRO, JSON, JSON Schema, PROTOBUF, and BYTES, whether you’re running a schemaless microservice architecture or have a strongly typed schema pipeline.

Key features

-

- At least once delivery semantics: Ensures reliability even during transient failures

- Granular topic-to-collection mapping: Route Kafka topics to specific Couchbase collections using

couchbase.topic.to.collection - Custom document ID logic: Override how document keys are generated via

couchbase.topic.to.document.id - Flexible handlers: Customize how records are transformed using built-in or custom sink handlers

- Secure authentication: Connects securely with password-based authentication

Step-by-step setup: Couchbase Sink Connector

Prerequisites

Before configuring the Couchbase Sink Connector in Confluent Cloud, ensure the following prerequisites are met:

-

- Confluent Cloud: A running Kafka cluster in Confluent Cloud (on AWS, Azure, or GCP). Not yet a Confluent customer? Start your free trial of Confluent Cloud today—new signups receive $400 to spend during their first 30 days.

- Couchbase Database: A Couchbase Server or Capella cluster accessible from Confluent Cloud.

- Networking:

- Kafka and Couchbase clusters should reside in the same cloud region for the lowest cost and best latency.

- Ensure network connectivity via Public IP, VPC Peering, or Private Endpoints such as AWS PrivateLink.

- Authentication Credentials:

- Couchbase username and password with write access to the target bucket and collection.

- Kafka service account or API key/secret for the connector.

- Schema Registry (if using typed formats):

- Enable Schema Registry for AVRO, JSON Schema, or PROTOBUF message formats.

- Kafka Topics:

- At least one existing Kafka topic to stream from, or create a new one during setup.

Setup in Confluent Cloud Console

Step 1: Launch a Confluent Cloud Kafka cluster

Create a Kafka cluster in the cloud provider and region that aligns with your Couchbase deployment.

Step 2: Navigate to the Connectors tab

Go to the Confluent Cloud Console and click on the Connectors tab. Click + Add connector.

Step 3: Select the Couchbase Sink Connector

Search for and select the Couchbase Sink connector card from the catalog.

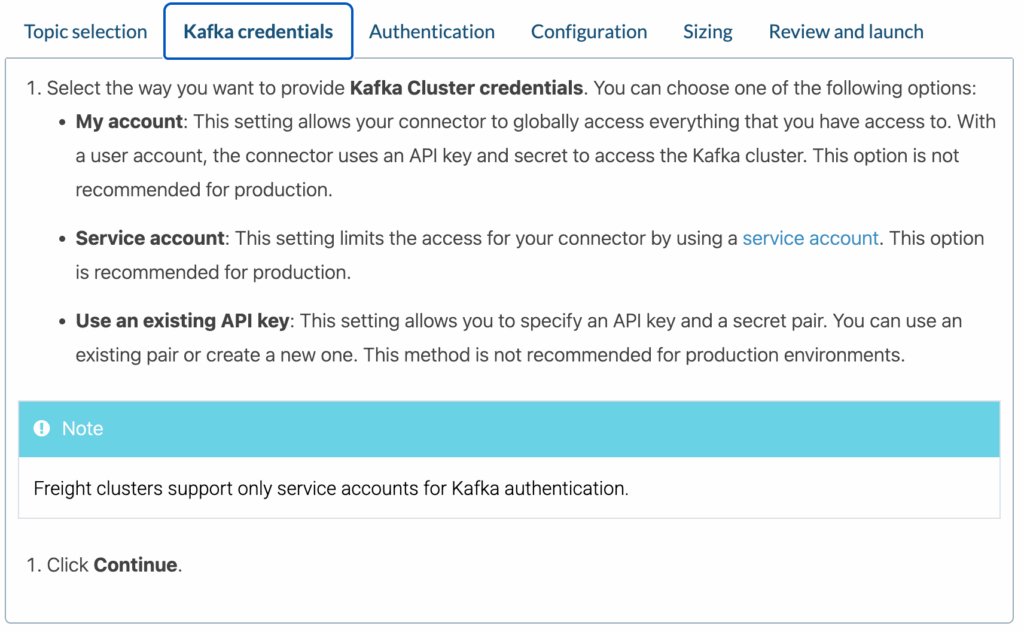

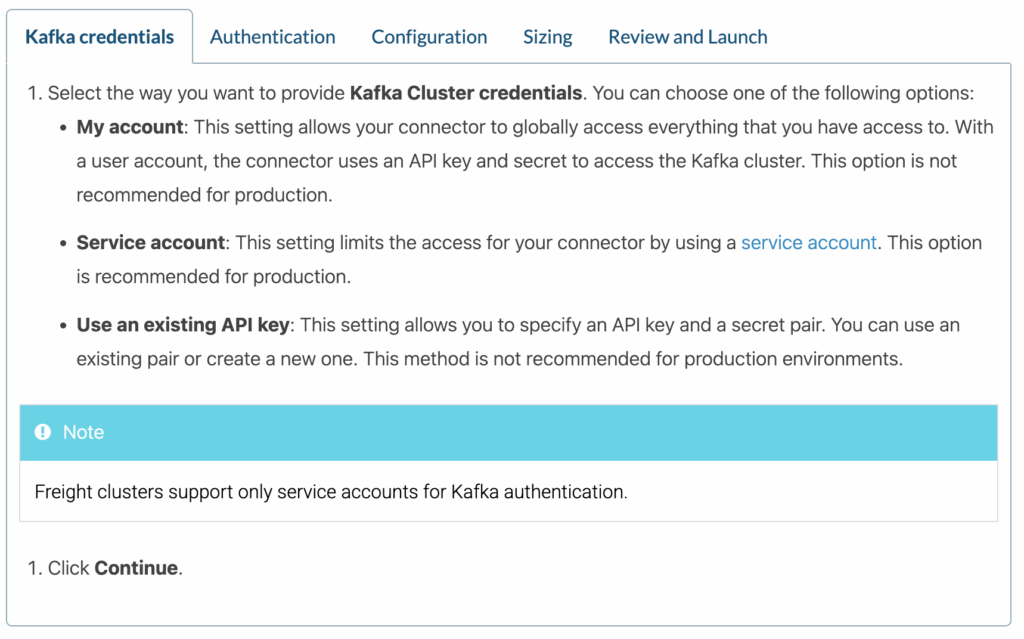

Step 4: Configure connector settings

You’ll be prompted to enter the following:

-

- Kafka topics to consume from

- Couchbase connection details:

- Hostname or connection string

- Bucket, scope, and collection names

- Username and password for authentication

- Input data format (AVRO, JSON, etc.)

- Schema Registry configuration (if applicable)

- Sink behavior parameters, such as:

couchbase.topic.to.collectioncouchbase.topic.to.document.idcouchbase.sink.handler

- Delivery semantics and error handling options

- Sizing: Select capacity or auto-scaling options

Step 5: Review and launch the connector

After reviewing all configurations, click Launch. The connector will be deployed and begin ingesting records from Kafka into Couchbase.

Step 6: Verify data ingestion

Once the connector is running, verify that data is landing in your Couchbase collections as expected. Use Couchbase UI or query tools to inspect ingested documents.

Couchbase Source Connector

In addition to writing to Couchbase, the Couchbase Source Connector will allow users to ingest data from Couchbase into Kafka, enabling powerful event-driven architectures and AI/ML pipelines fueled by operational data.

Key features

-

- Change Data Capture (CDC) support: Leverages Couchbase’s Database Change Protocol (DCP) to stream inserts, updates, and deletes from Couchbase collections into Kafka.

- Per-collection to topic mapping: Automatically maps Couchbase collections to Kafka topics using

couchbase.collection.to.topic. - Flexible read strategies: Supports streaming the full bucket, timestamp-based incremental reads, and real-time streaming using DCP.

- Schema-aware serialization: Supports AVRO, JSON, PROTOBUF, and JSON Schema formats with optional Confluent Schema Registry integration.

- Custom filtering and projection: Allows configuring filters to include or exclude documents based on attributes, types, or custom logic.

- Durable offset tracking: Tracks read progress per document and collection, ensuring continuity and minimizing reprocessing on restarts.

- Secure authentication: Uses password-based authentication with support for TLS connections to Couchbase.

- Auto-generated Kafka topics: Dynamically creates Kafka topics based on collection mappings if not predefined.

Step-by-step setup: Couchbase Source Connector

Prerequisites

-

- Confluent Cloud cluster with available source connector slots

- A Couchbase cluster with DCP enabled (Enterprise Edition) or a fully managed Couchbase Capella deployment

- Network accessibility from Confluent Cloud to Couchbase (via public IP or PrivateLink)

- Kafka topics pre-created or dynamically defined via topic mapping

- Schema Registry enabled for typed message formats

Setup in Confluent Cloud Console

Step 1: Launch your Confluent cluster

Ensure your Confluent Cloud cluster is running and in the same region as Couchbase

Step 2: Navigate to the Connectors page

Click + Add connector from the Confluent Cloud UI

Step 3: Select Couchbase Source Connector

Choose the Couchbase Source connector

Step 4: Configure connection parameters

-

- Couchbase host and port

- Bucket, scope, collection settings

- Polling mode: full scan, incremental with timestamp, or DCP-based CDC

- Document filtering options

- Data format: JSON, AVRO, PROTOBUF

- Kafka topic naming strategy (e.g.,

couchbase.collection.to.topic)

Step 5: Authentication & security

-

- Enter credentials for Couchbase access

- Configure any required TLS or encryption settings

- Set up network connectivity (VPC peering, PrivateLink, or public IP)

Step 6: Launch and monitor

After review, deploy the connector. Monitor record flow into Kafka topics using Confluent Cloud’s monitoring tools or topic viewers.

Once live, the connector will stream events from your Couchbase database. Infrastructure is managed, scaled and debugged for you.

Ensure that your Couchbase service and Kafka cluster are in the same cloud region for optimal cost and performance. For even better performance and lower cost consider configuring Private Endpoints or VPC peering as needed.

Watch our tutorial video to learn how to set up and configure the fully managed Couchbase Connector for Confluent Cloud. You’ll see how to connect Kafka topics, configure Couchbase settings, and start streaming data in just a few clicks.

Try it today

With the fully managed Couchbase Connector now available on Confluent Cloud, you can rapidly build modern, event-driven applications with minimal setup and maximum operational efficiency.

Ready to get started?

-

- Log in to Confluent Cloud or start your free trial of Confluent Cloud

- Launch the Couchbase Sink or Source Connector in just minutes

- Follow the guides for configuration: