Eventing Simple Yet Powerful:

Eventing allows small scripts to overcome hard to solve problems.

If you are familiar with both Couchbase and Eventing please feel free to skip the brief overview and skip ahead to the examples.

Overview:

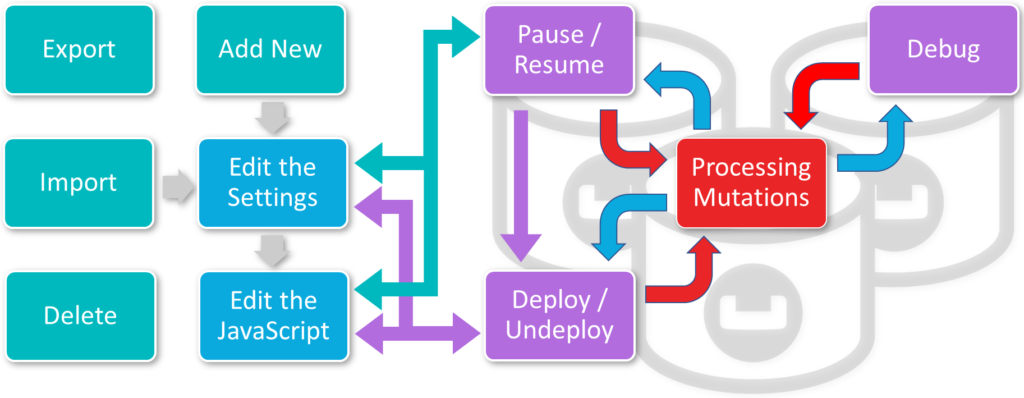

First off, let’s look at the basic Eventing Lifecycle.

The steps below show how easy it is to write and use an Eventing Function

- Add (or import) an Eventing Function via Couchbase Server’s UI.

- Assign a data source, a scratchpad bucket, and some bindings to manipulate documents or communicate with the outside world.

- Implement some JavaScript code to process the received mutation.

- Save your new Function and hit “Deploy”

That’s it you now have a distributed function responding to mutations in your data set in real-time across your entire cluster.

The Eventing service provides an infrastructure-less platform that can scale your Eventing Functions as your business experiences growth whether a one-time spike or a monthly increase in data stores or clients served without concern for the fact that your JavaScript based Eventing functions are running in a robust reliable parallel distributed fashion. To learn more about Couchbase Eventing please refer to the Eventing Overview in our documentation.

The examples in this article below show that in some cases Eventing can act like a drop of oil to needed “free up” the moving parts of your applications.

Couchbase a database you build to order:

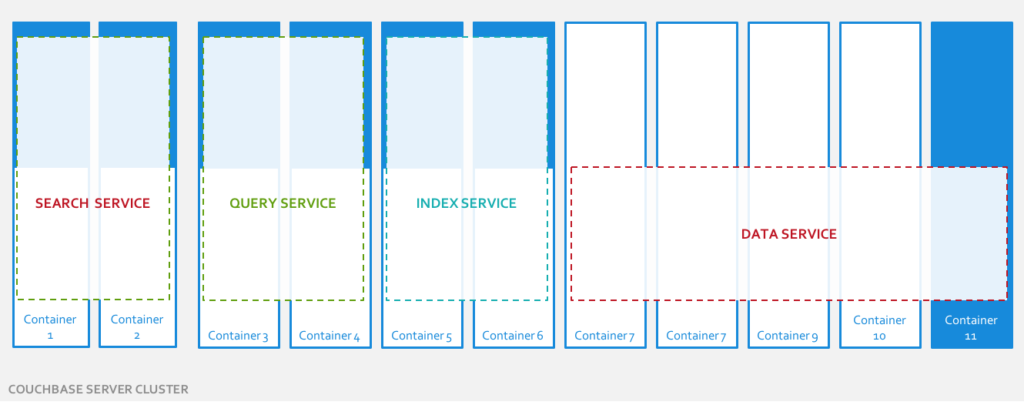

I like to think of a Couchbase cluster as a set of scalable interoperable “micro-services”. These services can be wired together and sized to meet a specific set of operational and business needs.

Couchbase provides Multi-dimensional Scaling, or MDS, across six key services with minimal resource interference between them. Furthermore, each Couchbase service is independently scalable, via simply adding more nodes. This allows customers to create the ideal multi-node cluster at the possible lowest TCO for the task(s) at hand.

- The Data nodes easily scale to provide JSON aware KV operations at-scale for single and multi-record lookups is extremely fast. Did I did just mention that “for single and multi-record lookups it is extremely fast“, yes I didi, but I really want to stress this.

- The Query nodes easily scale to provide indexes and also N1QL, a SQL enhancement that works with natively with JSON documents, that allows programmers get up and running quickly without having to learn a new way of thinking.

- The Index nodes easily scale to provide flexible indexes to speed up some or all N1QL queries.

- The Search nodes easily scale to provide full text search for natural-language querying featuring: language-aware searching, scoring of results, and fast FTS based indexes,

- The Analytics node easily scales to parallel data management capability to efficiently run complex queries: large ad hoc join, set, aggregation, and grouping operations across large datasets.

- The Eventing service easily scale to provide a computing paradigm that developers can use to handle data changes (or mutations) and react to them in real-time.

Like all product lines the newer services in Couchbase Query, Search, and Eventing, and Analytics have a few warts but taken as a whole the complete basket provides a unified suite, or a one-stop shop to solve a myriad of problems. I mean seriously if you don’t care about a unified product and all you are going to do is use FTS you might just consider using Elasticsearch but once you need to integrate your FTS results with N1QL (SQL for JSON) you might have been much better off just starting with Couchbase.

Today we are going to utilize just two services 1) the primary KV service provided by Data nodes 2) the Eventing Service. I will highlight through a few tiny JavaScript functions how you can overcome some hard at-scale problems by leveraging the Eventing Service.

Prerequisites / Learning about Eventing:

In this article we will be using the latest GA version, i.e. Couchbase version 6.5.1

However, if you are not familiar with Couchbase or the Eventing service please walk through GET STARTED and one Eventing example specifically refer to the following:

- Setup a working Couchbase 6.5.1 server as per the directions in Start Here!

- Understand how to deploy a basic Eventing function as per the directions in the Data Enrichment example specifically “Case 2” where we will only use one bucket the ‘source’ bucket.

Enriching Data via Eventing:

A typical customer problem

A live production system has stored billions of documents. A new business need has occurred where the existing data needs to be enriched. This requirement of additional data impacts the complete document set. The operational impact encompasses both old or historic data and also new or mutating data. The business needs to keep the production system running non-stop and continuously respond to new real-time data.

Consider a somewhat contrived example application a GeoIP lookup service. This utility needs a dataset to enable looking up countries by IPV4 address ranges. The initial implementation stored records as follows:

|

1 2 3 4 5 6 |

{ "type": "ip_country_map", "country": "RU", "ip_start": "7.12.60.1", "ip_end": "7.62.60.9" } |

Months or later new business requirements change. Engineering needed the JSON documents to be enriched with new fields. The requirement was to include the numeric representations of the two existing IPV4 address.

|

1 2 3 4 5 6 7 8 |

{ "type": "ip_country_map", "country": "RU", "ip_end": "7.62.60.9", "ip_start": "7.12.60.1", "ip_num_start": 118242305, "ip_num_end": 121519113 } |

Eventing to the rescue

A simple fourteen (14) line JavaScript (2 of which comments) Eventing Function can be written and deployed to solve the issue with minimal resources.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

function OnUpdate(doc, meta) { if (doc.type !== "ip_country_map" ) return; doc["ip_num_start"] = get_numip_first_3_octets(doc["ip_start"]); doc["ip_num_end"] = get_numip_first_3_octets(doc["ip_end"]); // src is a bucket alias to the source bucket in settings, write back to it src[meta.id]=doc; } function get_numip_first_3_octets(ip) { if (!ip) return 0; var parts = ip.split('.'); // IP Number = A x (256*256*256) + B x (256*256) + C x 256 + D return = (parts[0]*(256*256*256)) + (parts[1]*(256*256)) + (parts[2]*256) + parseInt(parts[3]); } |

By deploying the above Function with a “Feed boundary” set to “Everything” all documents of type: “ip_country_map” are processed and enriched.

The Eventing Function is left “deployed” reacting to all new inserts or updates (or mutations) in real-time enriching new items and also updating existing items on changes to “ip_start_num” or “ip_end_num” to the proper “numeric” representations.

Because the documents are enriched (the old fields are still present) the existing production applications will still work. All new or changed data is updated in real-time to the new schema. The GeoIP application components are decoupled via this simple Eventing Function such that they can be upgraded one at a time.

When all production components have been updated the Eventing Function can be undeployed and decommissioned.

Purging Stale Data via Eventing:

A typical customer problem

A live production system has stored over 7 billion of documents. All documents have an automatic expiration (or TTL for time to live) set. The production environment constantly receives new data and constantly expires old data.

An operational mistake was made resulting in 2 billion documents being created without an expiration.

The customer didn’t have the resources (nor desired to pay for the resources) to create a large index to utilize N1QL to identify select and purge the data that lacked an active (non-zero TTL) when it was no longer useful.

Eventing to the rescue

A simple six (6) line JavaScript (2 of which are comments) Eventing Function was deployed and solve the issue with minimal resources.

|

1 2 3 4 5 6 |

function OnUpdate(doc, meta) { if (meta.expiration !== 0) return; // delete all items that have TTL or expiration of 0 // src is a bucket alias to the source bucket in settings, delete from it. delete src[meta.id]; } |

By deploying the above Function with a “Feed boundary” set to “Everything” the entire document set in the source bucket was scanned. All documents with a non-zero TTL (meaning they had no expiration) were ignored. Only the matching documents with a TTL greater than zero are deleted.

Once the source bucket was cleaned the Eventing Function was undeployed as it was used as an administrative tool.

Note we could replace the expiration !== 0 test in our JavaScript to filter out data for any needed purpose.

Pretty easy to guess what we are doing below:

|

1 2 3 4 5 6 |

function OnUpdate(doc, meta) { if (!(doc.type === "customer"&& doc.active === false)) return // archive the customer to the bucket aias arc and remove from the bucket alias src arc[meta.id] = doc; delete src[meta.id]; } |

In fact, we could easily update the above to perform a cascade archive and delete not only of the customer but of any other related information such as orders, returns and shipping addresses. Refer to the example Cascade Delete in the Eventing Documentation.

Stripping sensitive data via Eventing:

A typical customer problem

A company running Couchbase on-premises in production was needed to share customer profile information (150M and growing). Their business partner is also running Couchbase but in a cloud provider, AWS.

Given a typical profile record like the following:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 |

{ "type": "master_profile", "first_name": "Peter", "last_name": "Chang", "id": 80927079070, "basic_profile": { "partner_id": 80980221, "services": [ { "music": true }, { "radio": true }, { "video": false } ] }, "sensitive_profile": { "ssn": "111-11-1111", "credit_card": { "number": "3333-333-3333-3333", "expires": "01/09", "ccv": "111" } }, "address": { "home": { "street": "4032 Kenwood Drive", "city": "Boston", "zip": "02102" }, "billing": { "street": "541 Bronx Street", "city": "Boston", "zip": "02102" } }, "phone": { "home": "800-555-9201", "work": "877-123-8811", "cell": "878-234-8171" }, "locale": "en_US", "timezone": -7, "gender": "M" } |

They couldn’t just share the entire profile since it contained sensitive data on user preferences and payment methods. They only needed to share a limited subset like the following:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

{ "type": "shared_profile", "first_name": "Peter", "id": 80927079070, "basic_profile": { "partner_id": 80980221, "services": [ { "music": true }, { "radio": true }, { "video": false } ] }, "timezone": -7 } |

The customer wanted to replace middle-wear SPARK solution required hours on failure to initialize and only provided slow batch process (hours to reflect updates) and sync up the profile information in real-time.

Eventing to the rescue

A simple nine (9) line JavaScript (3 of which are comments) Eventing Function was deployed and solve the issue with minimal resources.

|

1 2 3 4 5 6 7 8 9 |

function OnUpdate(doc, meta) { // only process our profile documents if (doc.type !== "master_profile") return; // aws_bkt is a bucket alias to the target bucket to replicate to AWS via // XCDR. Write the minimal (non-sensitive) profile doc to the bucket for AWS. aws_bkt["shared_profile::"+doc.id] = { "type": "shared_profile", "first_name": doc.first_name, "id": doc.id, "basic_profile": doc.basic_profile, "timezone": doc.timezone }; } |

By deploying the above Function with a “Feed boundary” set to “Everything” the entire document set in the source bucket was scanned and all documents of type: “master_profile” were processed and only a sub-document from each profile without the sensitive information was copied to the shared destination bucket.

The Eventing Function is always left deployed reacting to all user profile changes (or mutations) in real-time and forwarding each and every mutation to the AWS destination bucket.

In this use case a final bucket to bucket synchronization is performed via the Couchbase XCDR feature at no time does the sensitive data leave the on-premises cluster and it is never transmitted to AWS.

Resources

- Download: Download Couchbase Server 6.5.1

References

- Couchbase Eventing documentation:

https://docs.couchbase.com/server/current/eventing/eventing-overview.html - Couchbase Server 6.5 What’s New:

https://docs.couchbase.com/server/6.5/introduction/whats-new.html - Couchbase blogs on Eventing:

https://www.couchbase.com/blog/tag/eventing/