Now it’s easier than ever to build and deploy microservices and multi-tenant applications on Couchbase. The 7.0 release introduces a new data organization feature called Scopes and Collections.

Scopes and Collections allow logical isolation of different types of data, independent lifecycle management and security control at multiple levels of granularity. Application developers use them to organize and isolate their data. DevOps and admins find the multiple levels of role-based access control (RBAC) – at the Bucket, Scope and Collection level – a powerful option to host microservices and tenants at scale.

The full functionality of this feature is now available in Couchbase Server 7.0. A limited functionality Developer Preview was already available in release 6.5, and some of you may have already kicked the tires on that.

What Are Couchbase Scopes and Collections

Scopes and Collections are logical containers within a Couchbase Bucket.

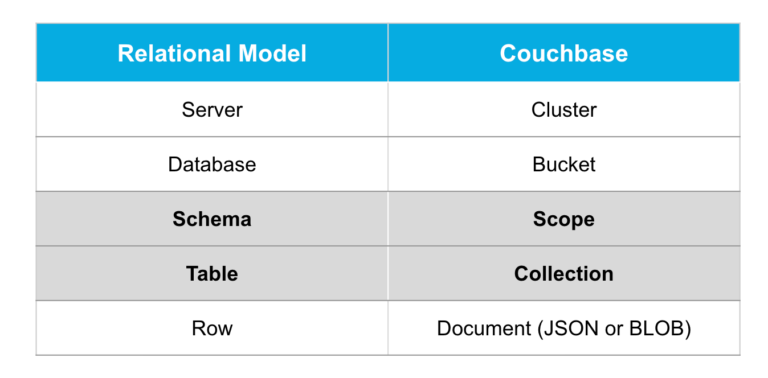

It’s helpful to think of Collections as tables within a relational database but without the data rigidity. Scopes are a set of related Collections, making them similar to an RDBMS schema. But in both cases, Scopes and Collections are more flexible since they store JSON documents.

The table below shows the mapping of familiar RDBMS constructs to Couchbase:

Mapping RDBMS concepts to Couchbase

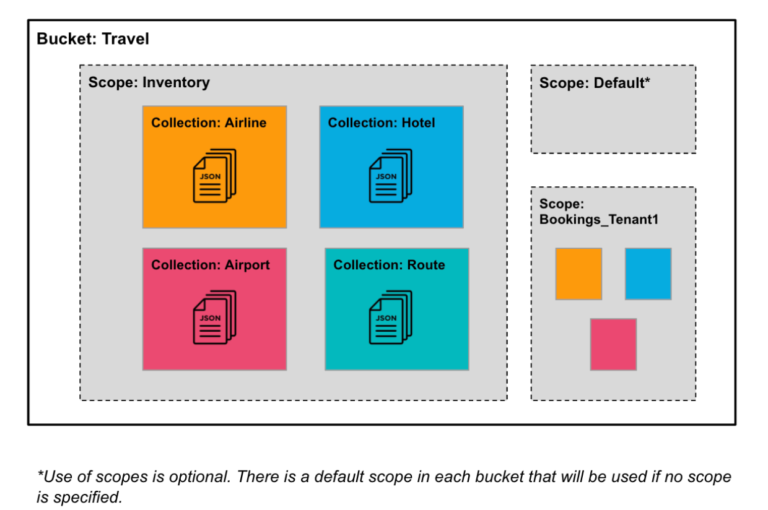

Here is an example of organizing the Couchbase Travel App data with Scopes and Collections:

An example of Scopes and Collections with an example dataset

How to Create and Use Scopes & Collections

You can create Scopes and Collections from any of the Couchbase SDKs, couchbase-cli, REST APIs, N1QL or from the UI.

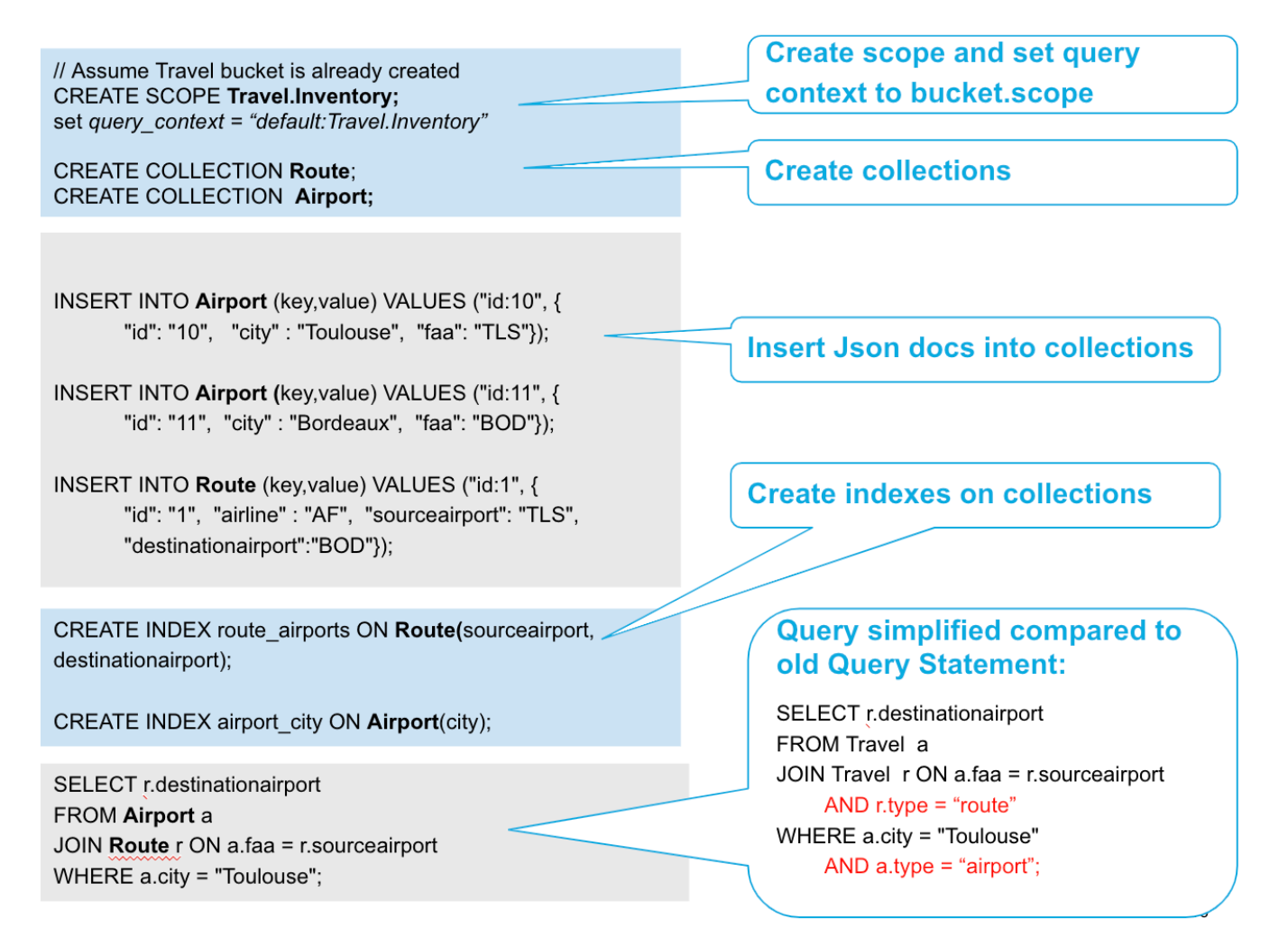

In particular, N1QLN1QL queries become much simpler and syntactically briefer as you no longer need to qualify different document types using where type = xxx. Instead you simply refer to the Collection directly.

In the example shown below, we use N1QL (I executed the N1QL statements using cbq shell) to create a Scope, a couple of Collections and some indexes. Then we run a simple query that joins two Collections.

A N1QL query to create a Scope, Collections and indexes

The Scale of Scopes & Collections

Couchbase currently allows you to have 30 Buckets in a single cluster. Scopes and Collections allow you to create orders-of-magnitude higher numbers of these entities.

Since Collections actually contain the data and Scopes organize related Collections, you typically have fewer Scopes than Collections. Global Secondary Indexes (GSIs) are created on Collections, and a Collection commonly has multiple indexes.

Keeping these requirements in mind, here is the scale of these entities that you can create in Couchbase 7.0 (a lot of them!):

-

- Number of Collections allowed per cluster: 1,000

- Number of Scopes allowed per cluster: 1,000

- Number of Global Secondary Indexes allowed per cluster: 10,000

Enabling Multi-Tenancy at Scale

Modern applications are often written as microservices, with each application consisting of several (and sometimes hundreds) of microservices.

With the advent of SaaS and all its advantages, many of these applications are multi-tenant. For enterprise teams, it’s important to keep the cost low for hosting multiple applications and tenants (i.e., TCO), while providing the required isolation and flexibility.

Couchbase Buckets, Scopes and Collections now provide a three-level containment hierarchy to help you map your applications, tenants and microservices.

Key Features for Multi-Tenancy & Microservice Consolidation

Let’s dive into the capabilities offered by Scopes and Collections that make it possible to consolidate tenants and microservices.

Logical Isolation & Indexing

Collections allow you to isolate your data by type while enjoying the flexibility of a JSON document database model that evolves with your application.

As shown in the Travel example further above, the Airline documents go into the Airline Collection, the Hotel documents go into the Hotel Collection and so forth.

Each Collection can also be indexed individually. Different types of documents typically have different indexing needs, and Collections enable you to define different indexes for each type.

Lifecycle Management at Multiple Levels

Scopes and Collections allow you to manage and monitor your data at two new levels in addition to the existing Bucket level.

The provided functionality includes:

-

- Create and drop individual Collections or Scopes

- Set expiration (maximum time to live, a.k.a., Max TTL) at the Collection level

- Monitor statistics at the Scope and Collection level. Although a full set of statistics are available only at Bucket level, a subset of statistics is available at Collection and Scope levels (e.g., item count, memory used, disk space used, operations per sec).

Fine-Grained Access Control with RBAC

One of the most powerful aspects of Scopes and Collections is the ability to control security at multiple levels – Bucket, Scope, Collection – using role-based access control (RBAC). This is the key to consolidating hundreds of microservices and/or tenants in a single Couchbase cluster.

Now, you can give a user access to a whole Bucket or to a Scope or to a Collection. Several out-of-box user roles are available, and more details are available in the RBAC documentation).

Fine-Grained Replication Control with XDCR

Cross Data Center Replication (XDCR) allows you to control replication at the Bucket, Scope or Collection level.

You can choose to replicate a whole Bucket, a Scope or just a Collection. You can map to a similarly named entity on the destination or remap to a different entity. You also have the flexibility of advanced filtering, which was already introduced for XDCR in release 6.5 of Couchbase.

The flexibility provided by XDCR is crucial to mapping microservices and/or tenants to Collections and Scopes as it allows you to control each microservice and tenant individually.

Backup/Restore at Multiple Levels

Continuing the theme of lifecycle management at multiple levels, Couchbase 7.0 allows you to control backup and restore options at each of these levels as well.

Hence, you can backup (and similarly restore) an individual Bucket, Scope or Collection, along with filtering the data and remapping it during restore.

Watch out for more detailed how-to blogs on each of these above themes (RBAC, XDCR and Backup/Restore) in the coming weeks!

Microservices Deployment Using Collections

A typical microservices architecture recommends that each microservice be simple (ideally single-function), loosely coupled from other microservices (which means have its own data store), and scale independently.

Prior to Couchbase Server 7.0, you could either host each microservice in a separate cluster or a separate Bucket. These deployment options have inherent limits on application density and may not always result in full hardware utilization. Now with Couchbase 7.0, you can map each microservice to one or more Collections. And since you can have up to a thousand Collections, you can have a thousand microservices in a single cluster(!).

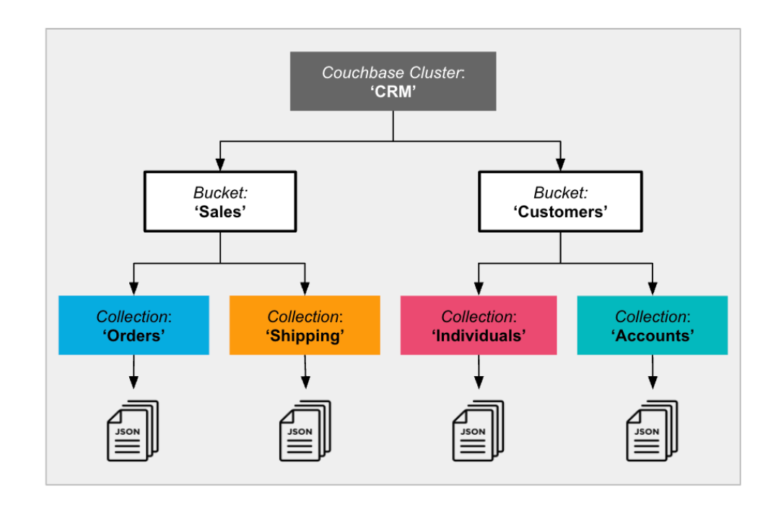

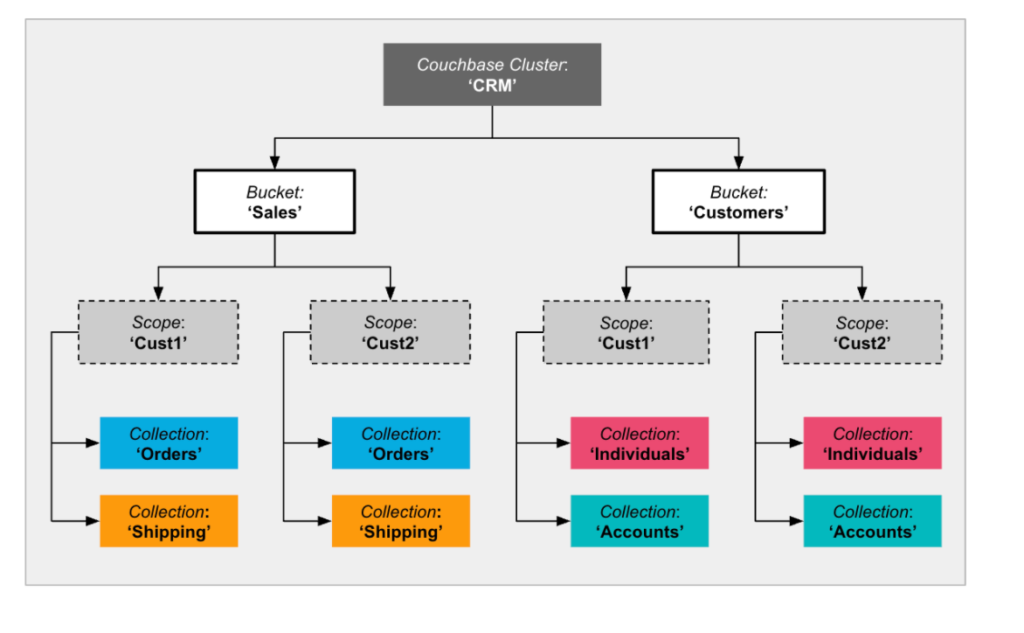

Let’s look at the example microservice deployment shown below:

Microservices consolidation using Couchbase Scopes and Collections

The above example shows two CRM applications (Sales and Customers) deployed in a single cluster. Each of the two applications is mapped to a Bucket allowing you to control the overall resource allocation to each application.

The Sales application has two microservices: Orders and Shipping, and the Customers application has two microservices: Individuals and Accounts. We map each of the microservices to their own Collection. In this example, we didn’t use Scopes. You don’t have to define Scopes if you don’t need the extra level of organization (the default Scope is used instead).

Multi-Tenant Deployment Using Scopes

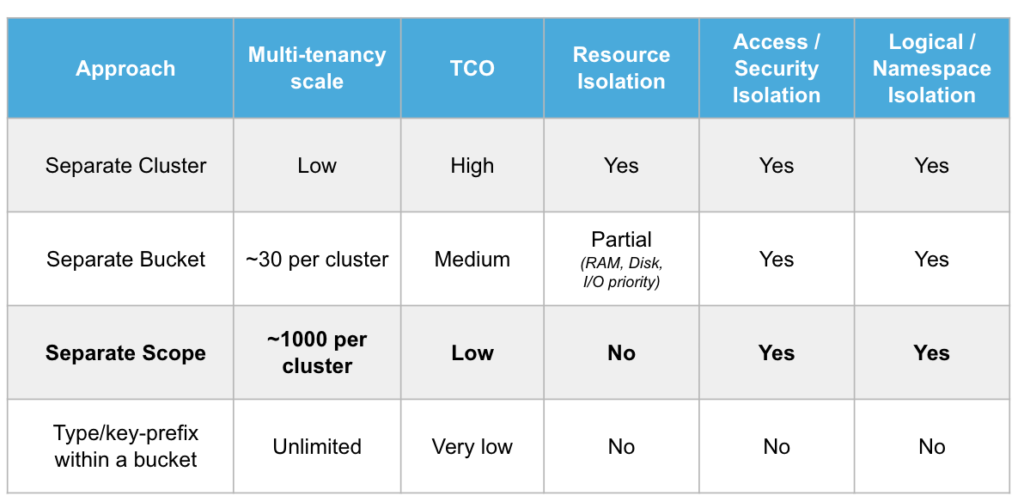

Multi-tenant applications require varying levels of isolation between tenants and varying levels of resource sharing of the underlying infrastructure. The chosen deployment architecture is a trade-off between isolation and total cost of ownership (TCO).

Some tenants may require complete physical isolation in which case separate clusters for each tenant may be the right architecture, albeit at a high TCO. Couchbase Buckets provide partial physical isolation and full security and logical isolation and can be used for separate tenants though with limits of scale and overhead-per-Bucket.

Logical and security isolation across tenants is often sufficient. With Scopes, you get security and logical isolation at more granular levels within a Bucket. You can have thousands of Scopes in a single Bucket, enabling you to host thousands of co-operative tenants (those not requiring physical isolation) in a single cluster.

The trade-offs between TCO and isolation levels between different multi-tenant architectures are captured in the table below.

Multi-tenant architecture choices using Couchbase Scopes and Collections

Imagine that the CRM applications in the example above need to become multi-tenant. You can easily do so by mapping each tenant to a separate Scope as shown below. (Cust1 and Cust2 are the two different tenants in this example.)

Multi-tenancy with Scopes in Couchbase

Achieving multi-tenancy with Scopes is fairly straightforward (without requiring application changes as you can pass a Scope context around). It’s also a scalable and cost-effective solution. Collection names need to be unique only within a Scope. Hence, you can easily deploy multiple Scopes with the same Collection names as shown above.

Next Steps

I hope you enjoy the new Scopes and Collections functionality in Couchbase. Below is a list of resources for you to get started and we look forward to your feedback on the Couchbase Forums.

Documentation & Articles

Great article.. but I’m wondering when Couchbase operator for kubernetes will be ready to try it?

@Shivani, Is the number of scopes limited at the cluster level or limited at the bucket level? Similarly for the collection, is the number limited at the scope level or the cluster level.

If it is at the cluster level, it is upto the application to maintain how to allocate collections & scopes in a given cluster which doesn’t feel right to me.

Kindly confirm.

The maximum number of scopes and of collections is at the cluster level. So if you have 2 scopes in a bucket and each scope has 500 collections, you have reached the limit with a total of 1000 collections in the cluster.

The deployment strategy (whether for single application or for microservices and/or SaaS application) has to take these limits into account. Microservices and/or tenants can be split across multiple clusters of course.

Thanks Shivani for this & the other how-to-migrate article. It is very helpful.

Docs (https://docs.couchbase.com/server/current/developer-preview/collections/collections-overview.html) say that the limit is 1000 collections per bucket. Can you pls check and if that is true, then get this and the article corrected?

Regards

Glad you found the articles helpful. The documentation page incorrectly states the limit as ‘1000 per bucket’. It is indeed 1000 collections per cluster. We will fix the documentation, thanks for pointing out!

Glad you found the articles helpful. The documentation page incorrectly states the limit as ‘1000 per bucket’. It is indeed 1000 collections per cluster. We will fix the documentation, thanks for pointing out!

[…] highlights of Couchbase 7.0 include rounding out ACID transaction processing support; adding a new Scopes construct to add a relational skin to the document database; and various performance […]

[…] highlights of Couchbase 7.0 include rounding out ACID transaction processing support; adding a new Scopes construct to add a relational skin to the document database; and various performance […]

From this article, I understand with the usage of Bucket, Scope and Collections, the number of microservices from different domains can be increased.

But the article started with the logical isolation, does it mean that Couchbase 7.0 or prior version do support the isolation for other couchbase services (index service specially)?

What I mean is let’s say all the domains with in the given business started using the single Couchbase cluster, can I control and assign the resources from index service, data services on a bucket level ?

Couchbase services such as Index service and Data service (via buckets) have always allowed for resource isolation e.g. as you mention you can control the resources for index service separately from data service. That is still the case and does not change with 7.0.

With 7.0, what you get is two additional levels within a bucket called scopes and collections. These provide logical and security isolation but not resource isolation as they share the resources allocated to the bucket. As mentioned for many microservices this is sufficient and hence they can be mapped to collections instead of using up a separate bucket for each microservice.

I could have a 100 or a 1000 node cluster so how do you explain the hard constraint of 30 buckets or a 1000 collection ? Is the limit per couchbase node or a cluster ? Can someone explain

You are right – the limits are the same whether your cluster is 100 nodes or 1000 nodes. As you are hinting, yes there are resources used at every node for each collection and/or bucket. That is why the limit really comes from what we can handle at node level. What each node can handle determines what the cluster can handle as each node in the cluster has to hold all the buckets and collections – Couchbase distributed everything uniformly across all nodes.

Hello Shivani,

Thank you for your article.

I’m working on a feature to turn an app into a multi-tenant app. Whole system has a backend on Spring Boot 2.5.5 and a mobile app. The database is Couchbase 6.6.

With Couchbase 7.0, scopes and collections seem to be the answer to a lot of my questions but (there is always a but … :)), Spring Data Couchbase 4.2.5 is not compatible with named scopes or collections, nor is SyncGateway 2.8 or 3.0 (https://docs.couchbase.com/sync-gateway/3.0/server-compatibility-collections.html#using-collections)

Do you know when Spring Data Couchbase 4.3.0 will be released (version 4.3.0-M3 seems to work) ?

Do you know when a Syncgateway compatible with named scopes or collections will be released ?

Regards,

Matthieu

hello,

How many scope i can create for one bucket ?