Autonomous Operator versions prior to 1.2.0 had limited ability to interact with clients. The Couchbase data platform could only be used by SDKs located in the same Kubernetes cluster. Cross datacenter replication (XDCR) could only be used within the same Kubernetes cluster or over a secure tunnel. A virtual private network (VPN) is one example of such a tunnel. Furthermore, access to the user interface (UI) would have to be with Kubernetes port forwarding.

The Autonomous Operator version 1.2.0 introduces new network features to address these limits. The end goal is to widen our audience with support for more diverse network architectures. Your application may run as a serverless function and therefore needs to talk across the internet to the Couchbase server. This type of use case is exactly what we are supporting.

Kubernetes Network Architecture

The options available for Kubernetes networking are diverse. The interface is all that is provided by Kubernetes meaning third parties provide their own implementation. The result is that each solution has its strengths and weaknesses. The networking choices that we make must work across all providers hence we will describe common network types before looking at solutions.

Routed Networks

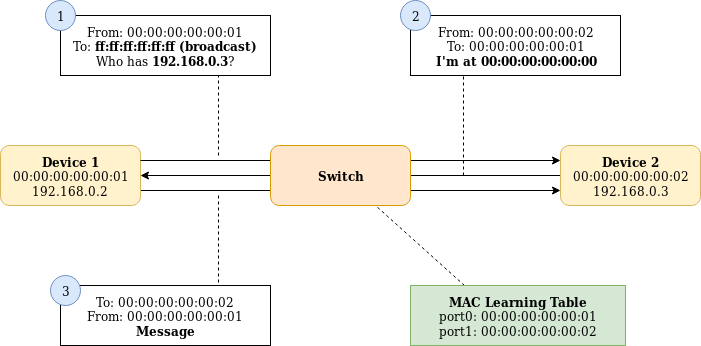

Routed networks are the simplest to describe in terms of Kubernetes. Hosts are able to talk to one another because they run within the same subnet and can directly address their destination hardware address. This is known as layer 2 networking.

Pods usually run in a separate subnet from the hosts they run on. This subnet is further subdivided between each host.

A message from a pod on one host has no way of knowing how to find its destination pod on another host because it doesn’t exist on the same subnet. Messages from one subnet are allowed to move from one subnet to another via a router. Messages from a host are typically sent to a router on a subnet when the destination address is not on a directly connected subnet.

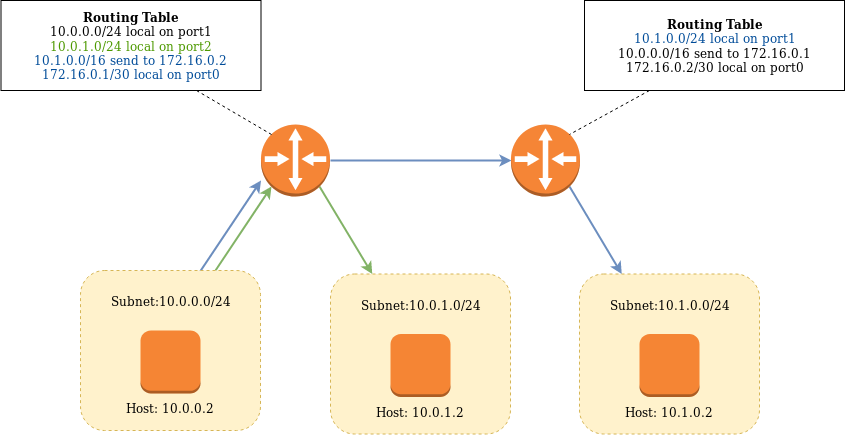

Routing

Routers handle messages in one of two ways. For directly connected subnets messages can just be sent to the correct network port, layer 2 networking handles the rest. For subnets not directly connected, messages can be forwarded to another router that does have the subnet directly connected. This is known as layer 3 networking.

The router manages this routing information in a routing table. A routing table is simply a list of mappings from a subnet to either a physical interface or another router on a directly connected network.

A message to another pod would be caught by the host the pod is running on. The message destination address would be unknown so forwarded to the router. The router does know about the destination address in its routing table so forwards the message to the correct host. Finally, the message is sent to the destination pod. Messages from outside the Kubernetes cluster can reach their destination pod simply by being sent to the router.

Routed networks are very simple. They may also suffer a slight performance penalty by hopping to the router and then onto the destination host. More intelligent solutions exist that use iBGP and route reflectors to remove this extra hop. Isolating pods from one another must be done with firewall rules.

Overlay Networks

Overlay networks encapsulate pod to pod messages in protocols such as GRE or VXLAN. Each host advertises its pod subnet. Hosts can then subscribe to these advertisements and know which host to directly send the message to in order to reach the destination pod. Although faster than sending to a router, the encapsulation overhead may negate any benefits.

Encapsulation also allows different pods to be associated with different virtual networks. This provides a strong security model where groups of pods can be physically segregated. This does however mean you still need routers to cross between overlay networks. These routers will need their own firewall rules.

Addressing a pod from outside the cluster is very difficult. With routed networks, we can simply forward to the router and it will handle the rest. With overlays, we have no such simple mechanism to tunnel into the overlay.

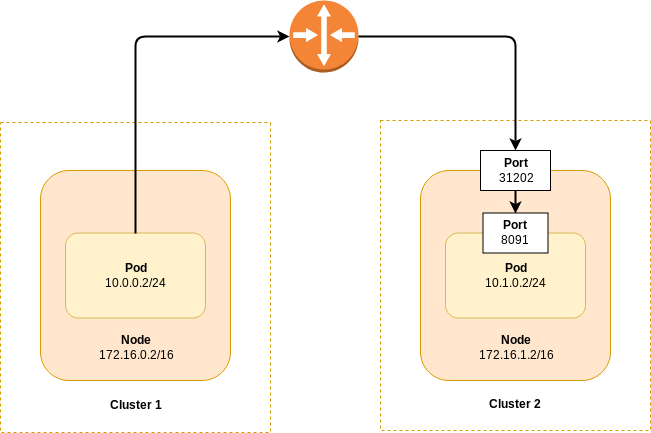

Node Ports

The one common mechanism for externally communicating with pods in both network types is Kubernetes node ports. For every pod the Autonomous Operator creates, we also create a node port service for it. Every port we define for the service will have a random port assigned to the underlying host.

Node ports must be random as two pods may be exposing the same port number. This would lead to a conflict if this port was used as the node port by both pods. We can address a port on a specific pod by addressing the underlying host and host port. The host will handle message redirection to the correct destination.

Node ports give us a generic mechanism to address a pod regardless of whether we are using an overlay network. As we are addressing the underlying node, then connecting to this from outside Kubernetes is simply a case of routing packets onto the routed network the Kubernetes nodes reside on.

Establishing XDCR

Node ports form the basis of establishing an XDCR connection between two Couchbase clusters in two different Kubernetes clusters. When node ports are allocated, the Autonomous Operator informs the Couchbase server. Clients connecting externally connect to the node port and the Couchbase server responds with a map of node IP addresses and node ports per service. The XDCR client uses these maps to access individual vBuckets when streaming documents.

The only thing the Autonomous Operator cannot do is provide layer 3 connectivity between the two Kubernetes host networks. Creating a peering (VPN) between the two networks, adding in routes and firewall rules is all that is required for most cloud providers.

New Autonomous Operator Enhancements

We have a solid foundation, working within the confines of the Kubernetes network model. It is secure, with a VPN, but there are improvements that can be made.

External Client Access

Utilizing a VPN tunnel to access a remote Kubernetes host network may be impossible or undesirable. This is especially so for managed services where you may have no control over the network.

To address this we need to place the pods on the internet. Openshift routes and generic ingresses only work for HTTP traffic and are difficult to configure. Instead, we opt for creating load balancer services per Couchbase server pod. Any TCP connection can be supported and furthermore, as the load balancer IP address is unique per pod, we can use the default port numbers. All major cloud providers provide load balancer services.

Existing node port-based XDCR connections are still supported, and are the default.

External UI Access

The UI, like individual pods, can now be publicly addressed with a load balancer service. This makes managing your Couchbase data platform deployments far simpler.

End-to-End Encryption

Placing anything on the public internet is not without risks. This is especially so with a database, hence we mandate the use of TLS. No plain text ports can be exposed. This keeps your user credentials and data secure from eavesdroppers.

When a server certificate is presented to a connecting client it will verify the address it connected to is valid for that certificate. This is done with subject alternative names. We only support wildcard DNS based alternate names, and not IP based ones. Furthermore, we cannot use IP based addressing of load balancer services as the address is not stable across the recovery.

A valid name for a cluster could be *.my-cluster.example.com. This is a wildcard, it is valid for server0.my-cluster.example.com and server1.my-cluster.example.com. The certificate will be valid for any nodes the Autonomous Operator creates in the cluster lifecycle.

DNS resource records need to be created mapping DNS names to load balancer IP addresses so they are visible to the client. The required DNS names are annotated onto the load balancer services by the Autonomous Operator. They are synchronized to a cloud DNS provider by a third party controller.

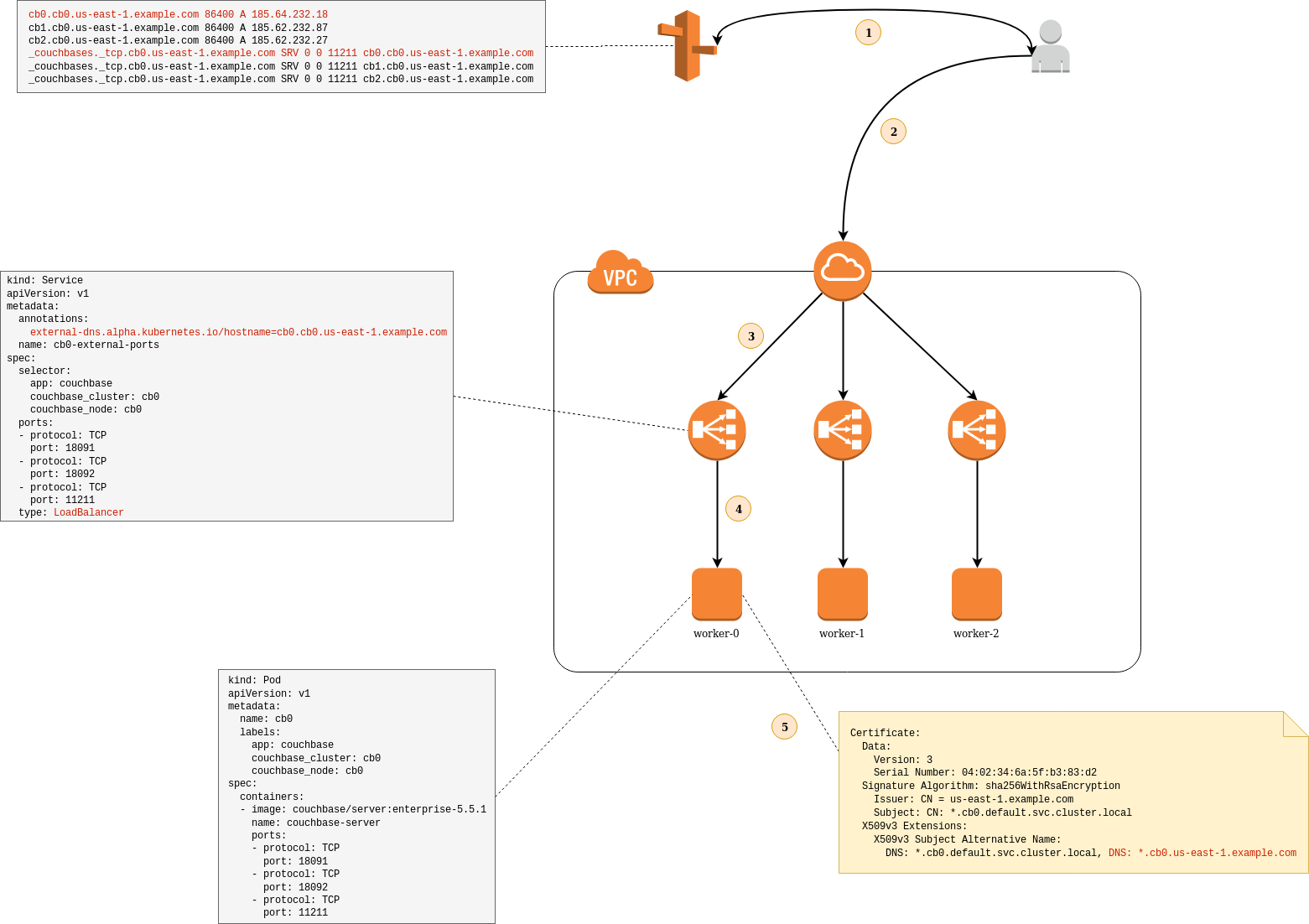

Example

The diagram depicts how all these features are interlinked.

- A client, be it an SDK or XCDR, initializes a connection using the DNS address https://cb0.cb0.us-east-1.example.com:18091. This resolves to the load-balancer IP address at 185.64.232.18. This DNS address is created from the load-balancer service specification and its public IP address.

- The client then opens a TCP connection to the Couchbase cluster. This first makes it across the internet to the customer edge router.

- The packet is then forwarded on to the load-balancer at 185.64.232.18.

- The load-balancer then SNATs the packet to the node port corresponding to port 18091 on the target pod. The node then SNATs the packet to port 18091 on the pod itself.

- Once the TCP connection is established the client initiates a TLS handshake with the server. The server returns its certificate to the client. The client validates the certificate is valid for the intended recipient. The address cb0.cb0.us-east-1.example.com matches the wildcard subject alternative name *.cb0.us-east-1.example.com. Client bootstrap continues as per usual.

While not included on the diagram, it is worth mentioning that the Couchbase server refers to the target pod as cb0.cb0.default.svc, its internal DNS name. Clients cannot resolve this name when it receives a mapping of cluster members to member addresses. The Operator populates alternate member addresses that are resolvable.

Clients must be aware of alternate addresses. Compatible SDKs are documented in the Operator documentation. XDCR is supported in Couchbase server versions 5.5.3+ and 6.0.1+.

Conclusion

The ability to publicly address a Couchbase server instance is a powerful tool. It simplifies network configuration. Setting up a VPN tunnel between cloud providers may be difficult or impossible, this avoids that entirely. It simplifies the process of accessing the UI console, it also allows clients, including XDCR, to connect from anywhere in the world.

This gives rise to exciting new possibilities of integrating your Kubernetes based Couchbase deployments with any external service. Such examples could include function-as-a-service platforms where you have no control over the underlying network architecture.

- Try it out: https://www.couchbase.com/downloads

- Support forums: https://www.couchbase.com/forums/c/couchbase-server/Kubernetes

- Documentation: https://docs.couchbase.com/operator/1.2/whats-new.html

Read More

Autonomous Operator 1.2.0 Deep Dive: https://www.couchbase.com/blog/deep-dive-couchbase-autonomous-operator-1-2-0

Circuit Vpn