Big data analytics uses advanced analytic techniques on massive volumes of complex data to gain actionable insights that can help reduce operating costs, increase revenue, and improve customer engagement within a company.

This page will cover:

- What is big data?

- Types of big data analytics

- Benefits of big data analytics

- Use cases for big data analytics

- Challenges with big data analytics

- Big data analytics tools

- How Couchbase helps with big data analytics

- Conclusion

What is big data?

The term “big data” refers to the collection and processing of large amounts of diverse data that could be structured, semi-structured, or unstructured and, in many cases, is a combination of all three types. Organizations typically collect the data from internal sources like operational business systems and external sources like news, weather, and social media. Because of its diversity and volume, big data comes with inherent complexity.

Types of big data analytics

By examining big data using statistical techniques, trends, patterns, and correlations, you can uncover insights that help your organization make informed business decisions. Machine learning algorithms can leverage the insights further to predict likely outcomes and recommend what to do next. While there are many ways to use big data analytics, generally, you can leverage it in four ways:

Descriptive analytics

Descriptive analytics determines “what happened” by measuring finances, production, and sales. Determining “what happened” is typically the first step in broader big data analytics. After descriptive analytics identifies trends, you can use other types of analytics to discern causes and recommend appropriate action.

Diagnostic analytics

Diagnostic analytics strives to determine “why it happened,” meaning if there is any causal relationship in the data from insights uncovered by descriptive analytics.

Predictive analytics

Predictive analytics techniques leverage machine learning algorithms and statistical modeling on historical and real-time data to determine “what will happen next,” meaning the most likely outcomes, results, or behaviors for a given situation or condition.

Prescriptive analytics

Prescriptive analytics uses complex simulation algorithms to determine “what is the next best action” based on descriptive and predictive analytics results. Ideally, prescriptive analytics produces recommendations for business optimizations.

Benefits of big data analytics

The insights from big data analytics can enable an organization to better interact with its customers, offer more personalized services, provide better products, and ultimately be more competitive and successful. Some of the benefits of using big data analytics include:

- Understanding and using historical trends to predict future outcomes for strategic decision-making

- Optimizing business processes and making them more efficient to drive down costs

- Engaging customers better by understanding their traits, preferences, and sentiment for more personalized offers and recommendations

- Driving down corporate risk through improved awareness of business operations

Use cases for big data analytics

Because of its ability to determine historical trends and provide recommendations based on situational awareness, big data analytics holds tremendous value for organizations of any size in any industry, but especially for larger enterprises with huge data footprints. Some practical use cases for big data analytics include:

- Retailers using big data to provide hyper-personalized recommendations

- Manufacturing companies monitoring supply chain or assembly operations to predict failures or disruptions before they happen, avoiding costly downtime

- Utility companies running real-time sensor data through machine learning models to identify issues and adjust operations on the fly

- Consumer goods companies monitoring social media for sentiment toward their products to inform marketing campaigns and product direction

These are just a few examples of how you can use actionable insights to reduce operating costs, increase revenue, and improve customer engagement within a company.

Challenges with big data analytics

Because it involves immense volumes of data in various formats, big data analytics brings significant complexity and specific challenges that an organization must consider, including timeliness, accessibility of data, and choosing the right approach for goals. Keep these challenges in mind when planning big data analytics initiatives for your organization:

Long time to insight

Getting operational insights as quickly as possible is the ultimate goal of any analytics effort. However, big data analytics typically involves copying data from disparate sources and loading it into an analytic system using ETL processes that take time – the more data you have, the longer it takes. Because of this reason, analysis can’t begin until all data has been transferred to the analytic system and verified, making it nearly impossible to gain insights in real time. While updates after an initial load may be incremental, they still incur delays as changes propagate from source systems to the analytic system, eroding time to insight.

Data organization and quality

Big data should be stored and organized in a way that’s easily accessible. Because it involves high volumes of data in various formats from various sources, organizations must invest significant time, effort, and resources to implement data quality management.

Data security and privacy

Big data systems can present security and privacy issues because of the potential sensitivity of the data elements they contain – and the bigger the system gets, the bigger this challenge becomes. Data storage and transfer must be encrypted, and access must be fully auditable and controlled through user credentials, but you must also consider how the data is analyzed. For example, you might wish to analyze patient data in a healthcare system. However, privacy regulations may require you to anonymize it before copying it to another location or using it for advanced analysis. Addressing security and privacy for a big data analytics effort can be complicated and time-consuming.

Finding the right technologies for big data analytics

Technologies for storing, processing, and analyzing big data have been available for years, and there are many options and potential architectures to employ. Organizations must determine their goals and find the best technologies for their infrastructure, requirements, and level of expertise. Organizations should consider future requirements and ensure their chosen tech stack can evolve with their needs.

Big data analytics tools

Big data analytics is a process supported by various tools that work together to facilitate specific parts of the process to collect, process, cleanse, and analyze big data. A few common technologies include:

Hadoop

Hadoop is an open source framework built on top of Google MapReduce. It was designed specifically for storing and processing big data. Founded in 2002, Hadoop can be considered the elder statesman of the big data tech landscape. The framework can handle large amounts of structured and unstructured data but can be slow compared to newer big data technologies like Spark.

Spark

Spark is an open source cluster computing framework from the Apache Foundation that provides an interface for programming across clusters. Spark can handle batch and stream processing for fast computation and is generally faster than Hadoop because it runs in-memory instead of reading and writing intermediate data to disks.

NoSQL databases

NoSQL databases are non-relational databases that typically store data as JSON documents, which are flexible and schemaless, making them a great option for storing and processing raw, unstructured big data. NoSQL databases are also distributed, running across clusters of nodes to ensure high availability and fault tolerance. Some NoSQL databases support running in-memory, which makes query response times exceptionally fast.

Kafka

Apache Kafka is an open source distributed event streaming platform that streams data from publisher sources like web and mobile apps, databases, logs, message-oriented middleware, and more. Kafka is useful for real-time streaming and big data analytics.

Machine learning tools

Big data analytics systems typically leverage machine learning algorithms to forecast results, make predictions, provide recommendations, or recognize patterns in the data. Machine learning tools often come bundled with a library of algorithms you can use for various analyses, and free, open source options are plentiful, such as scikit-learn, PyTorch, TensorFlow, KNIME, and more.

Data visualization and business intelligence tools

You can communicate insights from big data analytics through data visualizations such as charts, graphs, tables, and maps. Data visualization and business intelligence tools represent results from analyses succinctly, and many specialize in creating dashboards that monitor key indicators and provide alerts for issues.

How Couchbase helps with big data analytics

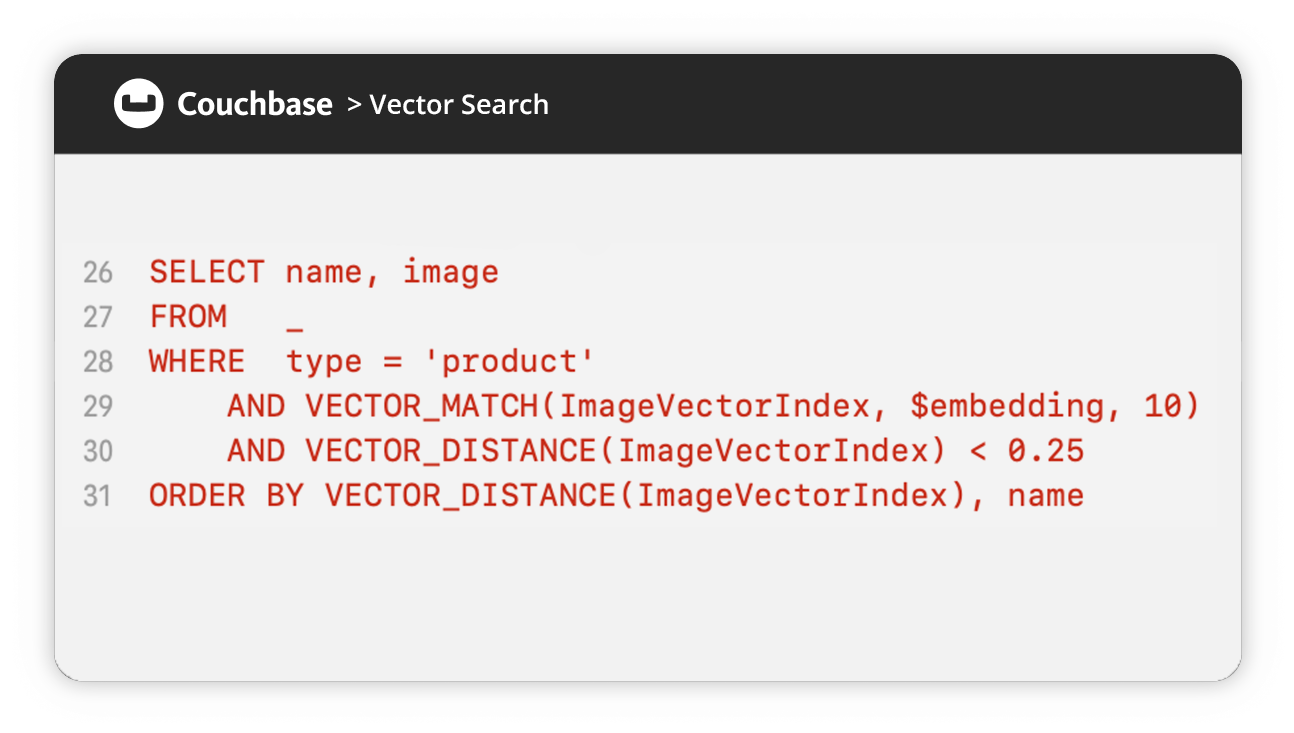

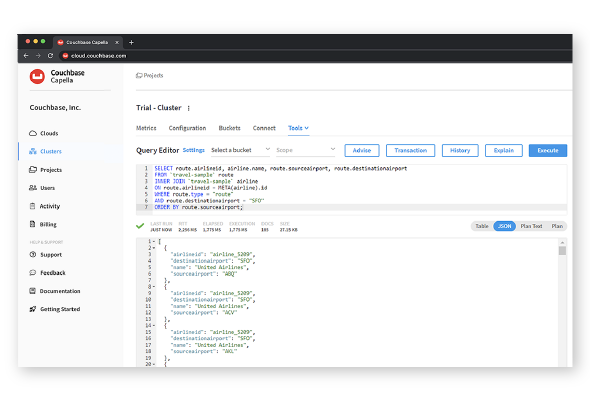

Couchbase Capella™ is a distributed cloud database that fuses the strengths of relational databases such as SQL and ACID transactions with JSON flexibility and scale.

Capella offers multi-model capabilities such as in-memory processing for speed, automatic data replication for high availability and failover, built-in full-text search for adding search to apps, and eventing to trigger actions based on changes in the data. It even comes with an AI-based coding assistant called Capella iQ to help with writing queries and data manipulation, making it easy to adopt.

Thanks to its JSON document storage model and memory-first architecture, Capella is ideal for big data analytics systems because it can store massive amounts of diverse semi-structured and unstructured data and quickly makes queries against the data.

Capella can also natively work with other big data analytics tools using connectors for Spark, Kafka, and Tableau for data visualization, allowing an organization to create highly scalable and efficient analytics data pipelines.

Best of all, Capella includes built-in analytics, a service that allows analysis of operational data without needing to move it via time-consuming ETL processes. By eliminating the need to copy operational data before analyzing it, the analytics service enables near-real-time analysis, and the service can ingest, consolidate, and analyze JSON data from Capella clusters, AWS S3, and Azure Blob Storage.

Conclusion

Big data analytics promises to enable a more efficient, competitive, and customer-centric organization through its ability to uncover problem areas, provide recommendations for improvement, and predict likely behaviors that can inform consumer engagement.

Learn how Domino’s creates personalized marketing campaigns with unified real-time analytics using Couchbase in this customer case study.

And be sure to try Couchbase Capella FREE and check out our Concepts Hub to learn about other analytics-related topics.