This page covers:

How serverless architecture works

Serverless architecture abstracts server management away from developers and relies on cloud providers to handle the underlying infrastructure. Here’s how it usually works:

1. Function creation: Developers write code as individual functions, with each function designed to perform a specific task or service. Serverless architecture is sometimes referred to as Function-as-a-Service or FaaS.

2. Function deployment: The functions are packaged and deployed to a serverless platform provided by a cloud service provider. The most common serverless platforms are AWS Lambda, Azure Functions, and Google Cloud Functions.

3. Event triggers: Functions are configured to execute in response to specific events or triggers. Events can include HTTP requests (e.g., API Gateway), changes in data (e.g., database updates), timers, file uploads, or something else. The cloud provider manages the event sources and automatically invokes the associated functions.

4. Auto-scaling: As events occur, the serverless platform automatically scales the underlying resources to accommodate the workload. If your function experiences a sudden spike in requests, the cloud provider will provision more resources.

5. Execution: When an event triggers a function, the serverless platform initializes a container or runtime environment for that function. The code within the function is executed and can access any required resources or data. After the function completes its task, the container may remain warm for a short period, allowing subsequent requests to execute more quickly.

6. Billing: Billing is based on the actual execution time and resources used by the functions. You’re charged per execution and for the compute resources, such as CPU and memory, that are allocated during execution.

7. Statelessness: Serverless functions are typically stateless, meaning they don’t retain information between invocations. Any required state or data must be stored externally, often in a database or storage service.

8. Logs and monitoring: Serverless platforms usually provide built-in logging and monitoring tools, allowing developers to track performance and troubleshoot issues in their functions.

Key concepts in serverless architecture

Because serverless development is an alternative to traditional development, you should familiarize yourself with the following terms and concepts for a clear understanding of how to design, deploy, and manage serverless applications:

Invocation: An event that triggers the execution of a serverless function. Examples are an HTTP request, database update, or scheduled timer.

Duration: The amount of time a serverless function takes to execute, which is a factor in calculating the cost of execution.

Cold start: The initial execution of a serverless function, where the cloud provider provisions resources and sets up the runtime environment. Cold starts introduce additional latency compared to warm starts.

Warm start: Subsequent executions of a serverless function when the runtime environment is already prepared, resulting in faster response times compared to cold starts.

Concurrency limit: The maximum number of simultaneous function executions allowed by the serverless platform. This limit can impact the ability to handle concurrent requests or events.

Timeout: The maximum allowable duration for a serverless function’s execution. If a function exceeds this limit, it is forcibly terminated, and its result might not be returned.

Event source: The origin of an event that triggers a serverless function. Examples of event sources include Amazon S3 buckets, API gateways, message queues, and database updates.

Statelessness: Serverless functions are typically stateless, meaning they don’t retain data between invocations. Any necessary state should be stored externally in databases or storage services.

Resource allocation: The specification of compute resources such as CPU or memory for a serverless function. These resources are often chosen by developers when defining the function.

Auto-scaling: The automatic adjustment of serverless resources by the cloud provider to accommodate varying workloads and ensure optimal performance.

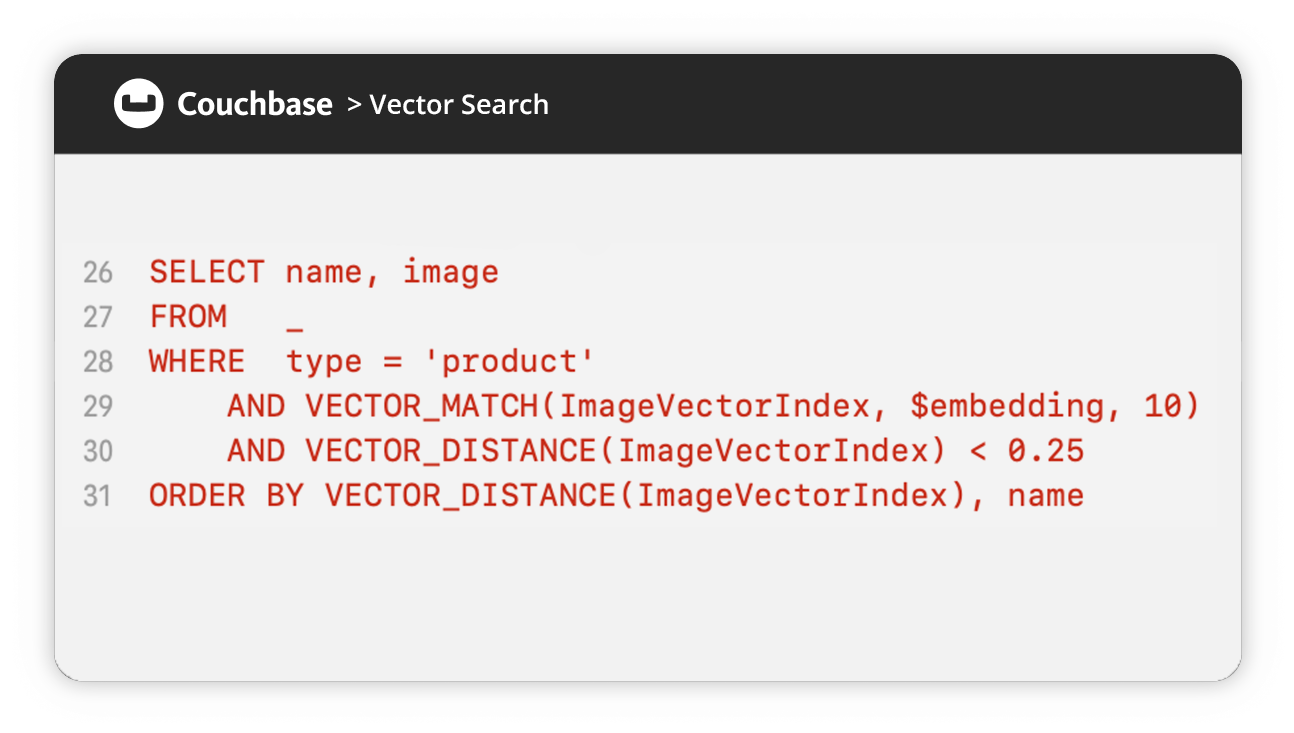

Serverless database: Serverless databases are elastically scaling databases that don’t expose the infrastructure they operate on Couchbase Capella™ DBaaS is an example of a fully managed serverless database.

When to use serverless architecture

Although serverless architecture is versatile, it’s not the best choice for every use case – applications with long-running tasks, high computation requirements, or consistent workloads often benefit more from traditional server-based architectures. Be sure to consider the specific requirements and the unique strengths of serverless when deciding whether it’s the right choice for your application.

Serverless architecture use cases

Some of the most common and best-suited use cases for serverless architecture include:

Web and mobile apps: Handle web and mobile app backends, serve content, process user requests, and manage user authentication.

APIs: Auto-scale your RESTful and GraphQL APIs and easily integrate them with other services.

IoT: Efficiently manage data processing and analysis from IoT devices that trigger events with sensor data.

Real-time data processing: Process real-time data streams such as clickstream analysis, log processing, and event-driven analytics.

Batch processing: Run periodic or on-demand batch jobs like data ETL (extract, transform, load), report generation, and data cleansing.

File and data storage tasks: Interact with cloud storage services to manage file uploads, downloads, and data manipulation.

User authentication and authorization: Identity and access management (IAM) services for user authentication and authorization are a good fit for serverless functions.

Notification services: Send notifications and alerts like email, SMS, or push notifications in response to specific events or triggers.

Chatbots and virtual assistants: Build conversational interfaces where functions process natural language requests and generate responses.

Data and image processing: Perform tasks like image resizing, format conversion, and data transformation that require minimal user interaction.

Scheduled tasks: Automate periodic tasks such as data backups, report generation, and database maintenance.

Microservices: Create and manage individual microservices within a larger application, allowing for easy scaling and independent deployment.

Security and compliance services: Implement security-related functions like intrusion detection, monitoring, and compliance auditing.

Serverless vs. containers

At first glance, serverless architecture is sometimes confused with container architecture or with microservices architecture because it shares certain similarities with each of them. In fact, serverless is quite distinct from both, and we’ll explain what makes them different.

What containers and serverless have in common is that both allow developers to deploy application code by abstracting away the host environment. However, one of the key differences is that serverless abstracts server management entirely, while containers allow developers to manage their own server environments with more control over infrastructure.

As a lightweight form of virtualization, containers package applications and their dependencies in isolated, consistent environments that run as independent instances on a shared operating system. Containers provide a way to ensure that applications work consistently across various environments, from development to production, and they offer a standardized way to package and distribute software. Containers are typically long-running and can include multiple processes within a single container.

In short, serverless computing abstracts away server management and is ideal for event-driven, short-duration tasks, whereas containers provide more control over the server environment and are better suited for long-running processes and consistent workloads. The choice between them depends on the specific requirements of your application and your level of control over the underlying infrastructure. In some cases, a combination of both technologies is used within a single application for different components.

Serverless vs. microservices

Microservices are a software architecture pattern that structures an application as a collection of small, independently deployable services that communicate over APIs and work together to provide complex, modular functionality. The confusion between microservices and serverless architecture often arises due to their shared emphasis on modularity and scalability. Blurring the line further is that they’re often used together, with serverless functions acting as microservices within a larger microservices-based application.

Despite their similarities, serverless and microservices have unique characteristics in the following areas that set them apart:

Infrastructure management

- Microservices – developers retain control over server and container orchestration.

- Serverless – server management is abstracted away entirely, and developers do not deal with the underlying infrastructure.

Execution model

- Microservices – run continuously on dedicated server instances.

- Serverless – functions execute in response to events or triggers. This distinction can lead to a difference in response times as serverless applications may experience cold starts.

Cost model

- Microservices – require you to provision and maintain server resources. This may lead to continuous costs even during periods of low usage.

- Serverless – follows a pay-as-you-go model based on actual function execution. This can be more cost-efficient for sporadic workloads.

Modularity

- Microservices – an application is divided into small independent services.

- Serverless – developers write code as individual units of functionality.

Scalability

- Microservices – allow for independent scaling of each service.

- Serverless – automatically scales individual functions.

Benefits of serverless architecture

Serverless architecture offers a wide range of benefits that make it an attractive choice for many applications and use cases. The most compelling advantages are:

Automatic scaling: Serverless platforms automatically scale resources up or down based on the incoming workload. This ensures that your application can handle varying levels of traffic, providing high availability and performance without manual intervention.

Cost-efficiency: With serverless, you only pay for the actual compute resources used during function execution. There are no costs associated with idle time, making it cost-effective, particularly for workloads with unpredictable or sporadic traffic.

Reduced operational overhead: Serverless abstracts server management tasks, allowing developers to focus on code rather than infrastructure maintenance. This reduces the need for DevOps efforts and simplifies deployment and scaling.

Faster development: Serverless accelerates the development process by eliminating the need to manage servers and infrastructure. Developers can quickly iterate and deploy code, resulting in faster time-to-market for applications.

Resilience: Serverless functions are typically stateless, promoting a design that relies on external storage services or databases for data persistence. This can lead to more resilient and fault-tolerant applications.

Built-in logging and monitoring: Serverless platforms often provide built-in tools for monitoring and logging, enabling developers to track performance, troubleshoot issues, and gain insights into application behavior.

Reduced vendor lock-in: Many functions can be designed to be relatively vendor-agnostic, making it easier to migrate them or to integrate services from different cloud providers. This is not always the case, as you’ll see in the next section on serverless limitations.

High availability: Serverless platforms are designed to be highly available, with redundancy and failover mechanisms built in. This helps ensure that your application remains accessible and responsive even in the face of failures.

Energy and resource efficiency: The automatic scaling and resource management of serverless platforms can lead to improved energy efficiency and resource utilization, reducing environmental impact.

Limitations of serverless architecture

While serverless architecture offers many advantages, it also has its limitations. Certain characteristics of serverless may manifest as benefits or challenges. When evaluating serverless for a specific application, consider your requirements or constraints related to the following:

Cold starts: Serverless functions may experience a delay when the function is first invoked because the cloud provider needs to initialize a new execution environment. This latency can be problematic for applications that require consistently fast response times.

Resource constraints: Serverless platforms impose resource constraints, such as memory and execution time limits. These constraints can be limiting for compute-intensive tasks or applications that require long-running processes.

Statelessness: Serverless functions are typically stateless, meaning they don’t retain data between invocations. While this can help improve resilience (as explained above), using external databases or storage services for data persistence can add complexity to some applications.

Vendor lock-in: While many functions can be designed to be relatively vendor-agnostic, your application may have some platform-specific configurations and integrations that make it challenging to move to a different cloud provider.

Complex debugging: Debugging and troubleshooting serverless applications can be more challenging in a serverless architecture because the distributed nature of functions and the lack of direct server access can make it difficult to identify and resolve issues.

Limited local testing: Developing and testing serverless functions locally can be challenging because local testing may not fully replicate the execution environment in the cloud. Developers often need to deploy functions to the serverless platform for thorough testing.

There are numerous serverless computing platforms and tools that enable developers to build, deploy, and manage serverless applications using their favorite coding languages and cloud service providers. Here are some of the most popular ones:

Platforms

Amazon’s AWS Lambda supports various programming languages and integrates seamlessly with other AWS services. AWS also provides an API gateway for creating RESTful APIs and triggering Lambda functions.

Microsoft’s Azure Functions is a serverless offering within the Azure cloud ecosystem. It supports multiple languages and offers integration with Azure services, making it a strong choice for Windows-based applications.

Google’s Cloud Functions supports multiple programming languages and integrates well with other Google Cloud services, making it suitable for building applications within the Google Cloud ecosystem.

IBM Cloud Functions is based on the Apache OpenWhisk framework and allows you to integrate with IBM Cloud services using various languages.

Alibaba Cloud Function Compute allows developers to build applications in the Alibaba Cloud ecosystem and integrate with other Alibaba Cloud services using multiple languages.

Tools

Netlify is a platform best known for hosting static websites, but it also offers serverless functions for building backend services, APIs, and workflows.

OpenFaaS is an open source serverless framework for container-based functions. It allows you to build and run serverless functions using Docker containers.

Kubeless is an open source Kubernetes-native serverless framework that allows you to deploy functions as native Kubernetes pods.

Fission is another open source Kubernetes-native serverless framework that supports multiple languages and is designed for easy deployment on Kubernetes clusters.

Conclusion

Serverless architecture is popular for web and mobile applications, IoT, real-time data processing, and other common use cases because it allows developers to focus on writing code rather than managing servers. The management responsibility is offloaded to cloud providers like AWS Lambda, Azure Functions, or Google Cloud Functions so they can handle the underlying infrastructure and scale resources automatically to accommodate changes in workload. Serverless is not ideal for all use cases, however, and certain workloads or long-running tasks may be better suited for traditional server-based approaches.

To learn more about serverless architecture and related technologies, check out these resources:

Serverless Architecture With Cloud Computing

Couchbase 2023 Predictions – Edge Computing, Serverless, and More

Capella App Services (BaaS)

Visit our Concepts Hub to learn about other topics related to databases.