Learn about performance testing for durable writes with SDK 3.0+ and setting up infrastructure using Couchbase’s cbc-pillowfight.

Performance-testing durability with any database can be subjective and challenging because there are other factors that influence performance. Durability is the act of ability to write to active, replica, and persisted copies of the data. Couchbase has different levels of durability based on the use case and SLA’s. But ultimately network speed, disk writing speed, and available CPU resources are as sensitive to performance as the database itself.

What defines performance (or high performance) of durable writes? Is it latency of the operation? Is it throughput (operations per second)? It depends on the use case. The popular answer is usually…BOTH!

Durability updates in Couchbase SDK

Earlier versions of the Couchbase SDK (Pre-3.0) do have durable writes with the parameters replicateTo and persistTo. ReplicateTo waits for the write to complete from 1 to 3 replicas. PersistTo waits for the active copy on disk to be written to as well. Developers wanted finer control and improved performance with durable writes to replicas and disk.

Couchbase SDK 3.0 was released about a year ago. A fundamental update to the new SDK version is durability with both latency and throughput in mind.

See the definitions in the documentation:

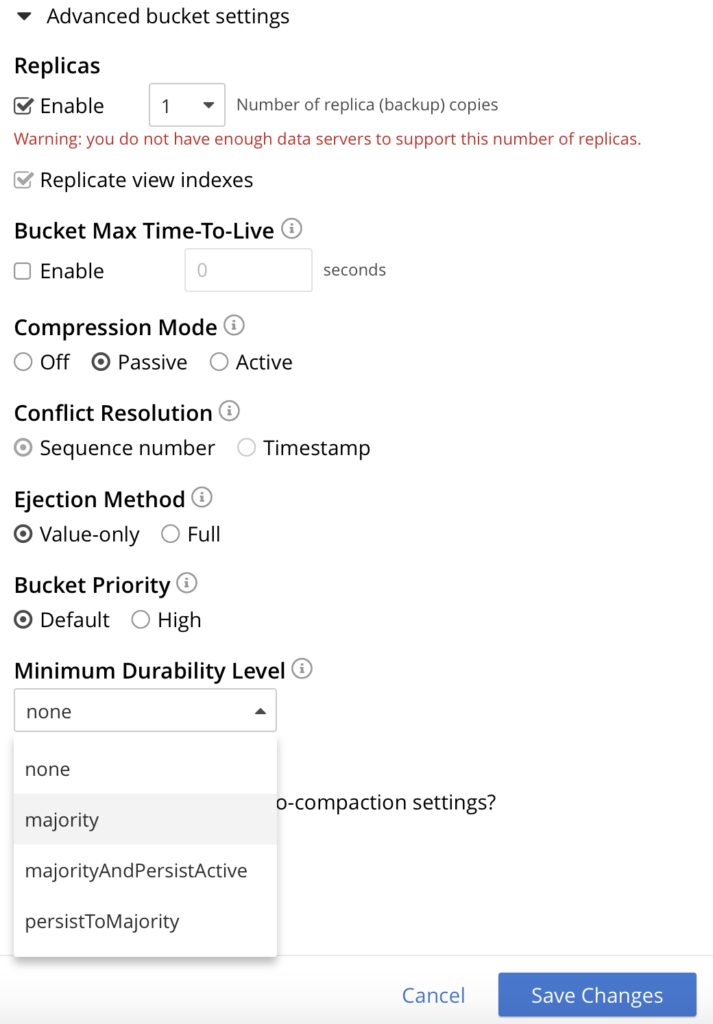

There are three levels of durability:

- Majority – The server will ensure that the change is available in memory on the majority of configured replicas.

- MajorityAndPersistToActive – Majority level, plus persisted to disk on the active node.

- PersistToMajority – Majority level, plus persisted to disk on the majority of configured replicas.

Each durability level has an impact on performance as the SDK will wait for the write to be completed using majority, persistTo, or both.

In my experience working with customers with a relational database background, the knee jerk decision is to go with the highest durability level. But their experience is quite different, as RDBMS have durability built into the architecture to disallow reads before writes are done.

Whereas Couchbase has finer grained control over durability settings than traditional RDBMS users may have expected. For example, Couchbase also has a bucket level durability setting.

Furthermore, the durability choice is specific to the use case, application, and SLAs. If bucket level durability is not enough control, Couchbase supports durability on a write-by-write basis – each individual write can be configured for specific durability or it can be set to the bucket level, i.e., all writes will follow the same durability level.

Server Setup Considerations for Testing

Even more important than the mechanism of durability within the database architecture are the hosting machine specifications to test the performance of durable writes. Couchbase will never place an active and replica copy onto the same disk; we autoshard the data and guarantee that replicas won’t reside with an active thus being on a different node.

This means that writing to replicas is affected by the network speed and latency. The faster the network, the faster the durable write to replica, i.e., to majority. The most influential hosting machine factor for durable writes is disk latency and throughput. Couchbase recommends local SSDs for persistence, not only for speed but for quick and efficient recovery of a node from failure.

Public Cloud Considerations

Today, more customers are conducting testing and POCs in public cloud environments for speed, simplicity, and temporary investment of resources.

Storage/disk selection

In the public cloud, all of the providers have different levels of disk storage for machine instances–from EBS-type storage which is typically the slowest, to high-performance NVRAM SSDs.

Any storage type will work but the evaluation must be aware that the performance of volume types are, arguably, the bigger factor for durable writes no matter what database is being evaluated. For example, this AWS documentation link shows how complicated storage types can be in public cloud storage.

Instance type selection

Customers that have successfully tested Couchbase durability try to match the testing environment to their production hosted environment. However, customers also deploy t3.large or t3.small for testing and then ask why it’s not performing.

Network scenarios

Make sure the client is on the same network as the server environment for best performance. Poor quality tests are often run on a laptop over wireless to a publicly exposed hostname in a public cloud. There are so many network hops from wireless to router, public network, hosted network, etc. that the latencies are huge.

In summary, with event handling for durable writes, Couchbase has very fast writes. Durable writes are done as fast as the hosting environment will allow and/or set up for.

Testing Couchbase Durability

Durability can be tested using any applications that you have already created with the Couchbase SDK, however, to simplify and standardized testing, one of our tools may help.

Included in Couchbase Server is a performance measurement command line tool called cbc-pillowfight. Pillowfight has been a part of Couchbase for years so it is rock solid for performance testing. Pillowfight is based on the Couchbase C SDK so it is highly performant. It is very fast to the point of being too fast! What do I mean about “too fast”? I will get to that in a moment.

Setup and configuration information is presented in this earlier blog regarding testing Couchbase with pillowfight and some sample scenarios are laid out below.

Options for performance testing using pillowfight

The default pillowfight settings may not be optimal for the type of application that you’ll be using with Couchbase. There are many ways to adjust pillowfight to better fit your use cases. For the full list of options, type cbc-pillowfight –help at the command line.

Here are some notable options you might want to try out:

- -I or –num-items with a number, to specify how many documents you want to operate on.

- –json to use JSON payloads in the documents. By default, documents are created with non-JSON payloads, but you may want to have real JSON documents in order to test other aspects of performance while the pillow fight is running.

- -e to expire documents after a certain period of time. If you are using Couchbase as a cache or short-term storage, you will want to use this setting to monitor the effect of documents expiring.

- –subdoc to use the subdocument API. Not every operation will need to be on an entire document.

- -M or –max-size to set a ceiling on the size of the documents. You may want to adjust this to tailor a more realistic document size for your system. There is a corresponding -m and –min-size too

Here’s another example using the above options:

cbc-pillowfight.exe -U couchbase://localhost/pillow -u Administrator -P password -I 10000 –json -e 10 –subdoc -M 1024

This will start a pillowfight using 10000 JSON documents, that expire after 10 seconds, use the sub-document API, and has a max document size of 1024 bytes.

Note: There is a -t –num-threads option. Currently, if you’re using Windows (like me), you are limited to a single thread (see this code).

Pillowfight can be tuned for specific operations, especially durable writes performance testing.

Let’s review this pillowfight command:

cbc-pillowfight -U <hostname>/<bucket> -u <login> -P <password> -I 2000 –set-pct=100 –min-size=100 –max-size=250 -t 10 –durability majority_and_persist_to_active –no-population

What is this command doing?

-I 2000 is number of items, 2000.

–set-pct=100 is 100% of the operations are writes. If set to 50 then 50/50 writes to reads.

–min-size=100 is minimum size document of 100 bytes.

–max-size=250 is maximum size document of 250 bytes. Pillowfight will randomize the documents from 100 to 250 bytes.

-t 10 is 10 threads

–durability majority_and_persist_to_active is the durability setting. The SDK will write to the majority of replicas if more than one and wait for persist to active copy on disk.

–no-population setting commits the write then deletes it.

Managing threads/buffers

This command will keep creating documents and writing into Couchbase as fast as possible. Pillowfight will create a list of keys as an array and recursively write. Once it reaches the end it will go back to the top of the array and start writing the same keys again.

Remember my “too fast” comment earlier? When testing durability, especially in slow hosting environments with slow networks and mass storage (EBS S3), pillowfight will be much faster than the infrastructure. This means that a pillowfight thread will still be waiting to finish while another thread is waiting to do the next write.

How do we create a test that truly tests durability? Make the buffer of keys large and don’t go back to the top.

cbc-pillowfight -U <hostname>/<bucket> -u <login> -P <password> -I 2000000 –set-pct=100 –min-size=100 –max-size=250 -t 10 –durability majority_and_persist_to_active –batch-size 1 –no-population

What’s the difference between these commands? The command above creates a list of 2 million keys and only goes through it once. This will guarantee that one thread won’t be waiting for a previous thread’s write to complete the same document. This is truly testing Couchbase and the hosting environment capability of durable writes. It is possible to remove batch-size, most likely no threads will be waiting to write.

Even if the test doesn’t use pillowfight, the application and test criteria should be aware that if a buffer of documents will be recursively used, there is a likelihood with threads that one write might be waiting for another write to complete.

Conclusions

Couchbase is quite capable of high-performance writes but each use case and conditions are unique to the test. Setting up the right testing environment is critical to any durable write test.

Pillowfight is a great tool for testing Couchbase with many of the features already built-in like setting ratio of reads to writes, threading, document size, and durability levels.

If the test application is being created, it is important to conduct testing that truly tests the database and infrastructure. The results are subjective to not only the database but also the environment in which it was tested.

If you have any questions, reach out to me at james.powenski@couchbase.com I would be more than happy to help.