Hi,

I am using Couchbase Operator to create a Couchbase Cluster on Kubernetes. If I give server size as 1 in default operator.yaml, the cluster is running fine and is creating a bucket. But if I increase the server size greater than 1 (lets say 3), cluster nodes are going in unready state and also the bucket is not being created.

I am attaching the operator logs as well as Couchbase Cluster logs.

Looking forward for your assistance.

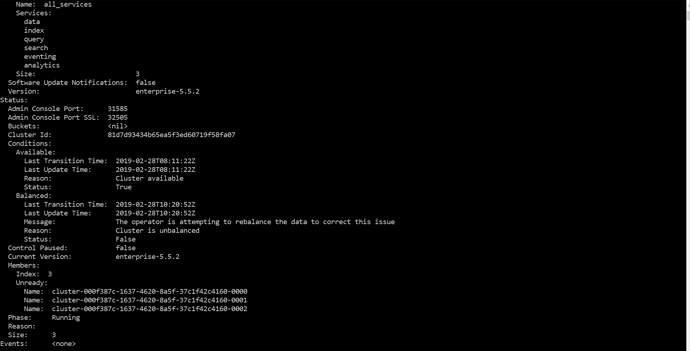

Cluster Logs:

Operator logs:

time=“2019-02-28T07:07:55Z” level=info msg=“couchbase-operator v1.1.0 (release)” module=main

time=“2019-02-28T07:07:55Z” level=info msg=“Obtaining resource lock” module=main

time=“2019-02-28T07:07:55Z” level=info msg=“Starting event recorder” module=main

time=“2019-02-28T07:07:55Z” level=info msg=“Attempting to be elected the couchbase-operator leader” module=main

time=“2019-02-28T07:07:55Z” level=info msg=“I’m the leader, attempt to start the operator” module=main

time=“2019-02-28T07:07:55Z” level=info msg=“Creating the couchbase-operator controller” module=main

time=“2019-02-28T07:07:55Z” level=info msg=“Event(v1.ObjectReference{Kind:“Endpoints”, Namespace:“cb-create”, Name:“couchbase-operator”, UID:“924cf1d9-3b27-11e9-b2ba-0260905e6e12”, APIVersion:“v1”, ResourceVersion:“42394617”, FieldPath:”"}): type: ‘Normal’ reason: ‘LeaderElection’ couchbase-operator-8c554cbc7-r2dc8 became leader" module=event_recorder

time=“2019-02-28T07:07:55Z” level=info msg=“CRD initialized, listening for events…” module=controller

time=“2019-02-28T07:07:55Z” level=info msg="starting couchbaseclusters controller"

time=“2019-02-28T08:10:40Z” level=info msg=“Watching new cluster” cluster-name=cluster-000f387c-1637-4620-8a5f-37c1f42c4160 module=cluster

time=“2019-02-28T08:10:40Z” level=info msg=“Janitor process starting” cluster-name=cluster-000f387c-1637-4620-8a5f-37c1f42c4160 module=cluster

time=“2019-02-28T08:10:40Z” level=info msg=“Setting up client for operator communication with the cluster” cluster-name=cluster-000f387c-1637-4620-8a5f-37c1f42c4160 module=cluster

time=“2019-02-28T08:10:40Z” level=info msg=“Cluster does not exist so the operator is attempting to create it” cluster-name=cluster-000f387c-1637-4620-8a5f-37c1f42c4160 module=cluster

time=“2019-02-28T08:10:40Z” level=info msg=“Creating headless service for data nodes” cluster-name=cluster-000f387c-1637-4620-8a5f-37c1f42c4160 module=cluster

time=“2019-02-28T08:10:40Z” level=info msg=“Creating NodePort UI service (cluster-000f387c-1637-4620-8a5f-37c1f42c4160-ui) for data nodes” cluster-name=cluster-000f387c-1637-4620-8a5f-37c1f42c4160 module=cluster

time=“2019-02-28T08:10:40Z” level=info msg=“Creating a pod (cluster-000f387c-1637-4620-8a5f-37c1f42c4160-0000) running Couchbase enterprise-5.5.2” cluster-name=cluster-000f387c-1637-4620-8a5f-37c1f42c4160 module=cluster

time=“2019-02-28T08:10:49Z” level=warning msg=“node init: failed with error [Post http://cluster-000f387c-1637-4620-8a5f-37c1f42c4160-0000.cluster-000f387c-1637-4620-8a5f-37c1f42c4160.cb-create.svc:8091/node/controller/rename: dial tcp: lookup cluster-000f387c-1637-4620-8a5f-37c1f42c4160-0000.cluster-000f387c-1637-4620-8a5f-37c1f42c4160.cb-create.svc on 172.20.0.10:53: no such host] …retrying” cluster-name=cluster-000f387c-1637-4620-8a5f-37c1f42c4160 module=cluster

time=“2019-02-28T08:10:54Z” level=info msg=“Operator added member (cluster-000f387c-1637-4620-8a5f-37c1f42c4160-0000) to manage” cluster-name=cluster-000f387c-1637-4620-8a5f-37c1f42c4160 module=cluster

time=“2019-02-28T08:10:54Z” level=info msg=“Initializing the first node in the cluster” cluster-name=cluster-000f387c-1637-4620-8a5f-37c1f42c4160 module=cluster

time=“2019-02-28T08:10:54Z” level=warning msg=“node init: failed with error [Post http://cluster-000f387c-1637-4620-8a5f-37c1f42c4160-0000.cluster-000f387c-1637-4620-8a5f-37c1f42c4160.cb-create.svc:8091/node/controller/rename: dial tcp: lookup cluster-000f387c-1637-4620-8a5f-37c1f42c4160-0000.cluster-000f387c-1637-4620-8a5f-37c1f42c4160.cb-create.svc on 172.20.0.10:53: no such host] …retrying” cluster-name=cluster-000f387c-1637-4620-8a5f-37c1f42c4160 module=cluster

time=“2019-02-28T08:10:59Z” level=warning msg=“node init: failed with error [Post htt://cluster-000f387c-1637-4620-8a5f-37c1f42c4160-0000.cluster-000f387c-1637-4620-8a5f-37c1f42c4160.cb-create.svc:8091/node/controller/rename: dial tcp: lookup cluster-000f387c-1637-4620-8a5f-37c1f42c4160-0000.cluster-000f387c-1637-4620-8a5f-37c1f42c4160.cb-create.svc on 172.20.0.10:53: no such host] …retrying” cluster-name=cluster-000f387c-1637-4620-8a5f-37c1f42c4160 module=cluster

time=“2019-02-28T08:11:04Z” level=warning msg=“node init: failed with error [Server Error 400 (cluster-000f387c-1637-4620-8a5f-37c1f42c4160-0000.cluster-000f387c-1637-4620-8a5f-37c1f42c4160.cb-create.svc:8091/node/controller/rename): [error - Could not resolve the hostname: nxdomain]] …retrying” cluster-name=cluster-000f387c-1637-4620-8a5f-37c1f42c4160 module=cluster

time=“2019-02-28T08:11:09Z” level=warning msg=“cluster init: failed with error [Post htt://cluster-000f387c-1637-4620-8a5f-37c1f42c4160-0000.cluster-000f387c-1637-4620-8a5f-37c1f42c4160.cb-create.svc:8091/node/controller/setupServices: dial tcp: lookup cluster-000f387c-1637-4620-8a5f-37c1f42c4160-0000.cluster-000f387c-1637-4620-8a5f-37c1f42c4160.cb-create.svc on 172.20.0.10:53: no such host] …retrying” cluster-name=cluster-000f387c-1637-4620-8a5f-37c1f42c4160 module=cluster

time=“2019-02-28T08:11:14Z” level=info msg=“start running…” cluster-name=cluster-000f387c-1637-4620-8a5f-37c1f42c4160 module=cluster

time=“2019-02-28T08:11:22Z” level=info msg=“server config all_services: cluster-000f387c-1637-4620-8a5f-37c1f42c4160-0000” cluster-name=cluster-000f387c-1637-4620-8a5f-37c1f42c4160 module=cluster

time=“2019-02-28T08:11:22Z” level=info msg=“Cluster status: balanced” cluster-name=cluster-000f387c-1637-4620-8a5f-37c1f42c4160 module=cluster

time=“2019-02-28T08:11:22Z” level=info msg=“Node status:” cluster-name=cluster-000f387c-1637-4620-8a5f-37c1f42c4160 module=cluster

time=“2019-02-28T08:11:22Z” level=info msg=“┌───────────────────────────────────────────────────┬──────────────┬────────────────┐” cluster-name=cluster-000f387c-1637-4620-8a5f-37c1f42c4160 module=cluster

time=“2019-02-28T08:11:22Z” level=info msg=“│ Server │ Class │ Status │” cluster-name=cluster-000f387c-1637-4620-8a5f-37c1f42c4160 module=cluster

time=“2019-02-28T08:11:22Z” level=info msg=“├───────────────────────────────────────────────────┼──────────────┼────────────────┤” cluster-name=cluster-000f387c-1637-4620-8a5f-37c1f42c4160 module=cluster

time=“2019-02-28T08:11:22Z” level=info msg=“│ cluster-000f387c-1637-4620-8a5f-37c1f42c4160-0000 │ all_services │ managed+active │” cluster-name=cluster-000f387c-1637-4620-8a5f-37c1f42c4160 module=cluster

time=“2019-02-28T08:11:22Z” level=info msg=“└───────────────────────────────────────────────────┴──────────────┴────────────────┘” cluster-name=cluster-000f387c-1637-4620-8a5f-37c1f42c4160 module=cluster

time=“2019-02-28T08:11:22Z” level=info cluster-name=cluster-000f387c-1637-4620-8a5f-37c1f42c4160 module=cluster

time=“2019-02-28T08:11:26Z” level=info msg=“Creating a pod (cluster-000f387c-1637-4620-8a5f-37c1f42c4160-0001) running Couchbase enterprise-5.5.2” cluster-name=cluster-000f387c-1637-4620-8a5f-37c1f42c4160 module=cluster

time=“2019-02-28T08:11:35Z” level=warning msg=“add node: failed with error [Server Error 400 (cluster-000f387c-1637-4620-8a5f-37c1f42c4160-0000.cluster-000f387c-1637-4620-8a5f-37c1f42c4160.cb-create.svc:8091/controller/addNode): [error - Prepare join failed. Failed to resolve address for “cluster-000f387c-1637-4620-8a5f-37c1f42c4160-0001.cluster-000f387c-1637-4620-8a5f-37c1f42c4160.cb-create.svc”. The hostname may be incorrect or not resolvable.]] …retrying” cluster-name=cluster-000f387c-1637-4620-8a5f-37c1f42c4160 module=cluster

time=“2019-02-28T08:11:40Z” level=warning msg=“add node: failed with error [Server Error 400 (cluster-000f387c-1637-4620-8a5f-37c1f42c4160-0000.cluster-000f387c-1637-4620-8a5f-37c1f42c4160.cb-create.svc:8091/controller/addNode): [error - Failed to reach erlang port mapper. Failed to resolve address for “cluster-000f387c-1637-4620-8a5f-37c1f42c4160-0001.cluster-000f387c-1637-4620-8a5f-37c1f42c4160.cb-create.svc”. The hostname may be incorrect or not resolvable.]] …retrying” cluster-name=cluster-000f387c-1637-4620-8a5f-37c1f42c4160 module=cluster

time=“2019-02-28T08:11:45Z” level=warning msg=“add node: failed with error [Server Error 400 (cluster-000f387c-1637-4620-8a5f-37c1f42c4160-0000.cluster-000f387c-1637-4620-8a5f-37c1f42c4160.cb-create.svc:8091/controller/addNode): [error - Prepare join failed. Failed to resolve address for “cluster-000f387c-1637-4620-8a5f-37c1f42c4160-0001.cluster-000f387c-1637-4620-8a5f-37c1f42c4160.cb-create.svc”. The hostname may be incorrect or not resolvable.]] …retrying” cluster-name=cluster-000f387c-1637-4620-8a5f-37c1f42c4160 module=cluster

time=“2019-02-28T08:11:50Z” level=warning msg=“add node: failed with error [Server Error 400 (cluster-000f387c-1637-4620-8a5f-37c1f42c4160-0000.cluster-000f387c-1637-4620-8a5f-37c1f42c4160.cb-create.svc:8091/controller/addNode): [error - Failed to reach erlang port mapper. Failed to resolve address for “cluster-000f387c-1637-4620-8a5f-37c1f42c4160-0001.cluster-000f387c-1637-4620-8a5f-37c1f42c4160.cb-create.svc”. The hostname may be incorrect or not resolvable.]] …retrying” cluster-name=cluster-000f387c-1637-4620-8a5f-37c1f42c4160 module=cluster

time=“2019-02-28T08:11:55Z” level=warning msg=“add node: failed with error [Server Error 500 (cluster-000f387c-1637-4620-8a5f-37c1f42c4160-0000.cluster-000f387c-1637-4620-8a5f-37c1f42c4160.cb-create.svc:8091/controller/addNode): []] …retrying” cluster-name=cluster-000f387c-1637-4620-8a5f-37c1f42c4160 module=cluster

time=“2019-02-28T08:12:02Z” level=info msg=“added member (cluster-000f387c-1637-4620-8a5f-37c1f42c4160-0001)” cluster-name=cluster-000f387c-1637-4620-8a5f-37c1f42c4160 module=cluster

time=“2019-02-28T08:12:02Z” level=info msg=“Creating a pod (cluster-000f387c-1637-4620-8a5f-37c1f42c4160-0002) running Couchbase enterprise-5.5.2” cluster-name=cluster-000f387c-1637-4620-8a5f-37c1f42c4160 module=cluster

time=“2019-02-28T08:12:15Z” level=warning msg=“node init: failed with error [Post htt://cluster-000f387c-1637-4620-8a5f-37c1f42c4160-0002.cluster-000f387c-1637-4620-8a5f-37c1f42c4160.cb-create.svc:8091/node/controller/rename: dial tcp: lookup cluster-000f387c-1637-4620-8a5f-37c1f42c4160-0002.cluster-000f387c-1637-4620-8a5f-37c1f42c4160.cb-create.svc on 172.20.0.10:53: no such host] …retrying” cluster-name=cluster-000f387c-1637-4620-8a5f-37c1f42c4160 module=cluster

time=“2019-02-28T08:12:20Z” level=warning msg=“add node: failed with error [Server Error 400 (cluster-000f387c-1637-4620-8a5f-37c1f42c4160-0000.cluster-000f387c-1637-4620-8a5f-37c1f42c4160.cb-create.svc:8091/controller/addNode): [error - Prepare join failed. Failed to resolve address for “cluster-000f387c-1637-4620-8a5f-37c1f42c4160-0002.cluster-000f387c-1637-4620-8a5f-37c1f42c4160.cb-create.svc”. The hostname may be incorrect or not resolvable.]], [Server Error 400 (cluster-000f387c-1637-4620-8a5f-37c1f42c4160-0001.cluster-000f387c-1637-4620-8a5f-37c1f42c4160.cb-create.svc:8091/controller/addNode): [error - Failed to reach otp port 21101 for node [” “,\n <<“Failed to resolve address for \“cluster-000f387c-1637-4620-8a5f-37c1f42c4160-0002.cluster-000f387c-1637-4620-8a5f-37c1f42c4160.cb-create.svc\”. The hostname may be incorrect or not resolvable.”>>].ns_1@cluster-000f387c-1637-4620-8a5f-37c1f42c4160-0002.cluster-000f387c-1637-4620-8a5f-37c1f42c4160.cb-create.svc This can be firewall problem.]] …retrying” cluster-name=cluster-000f387c-1637-4620-8a5f-37c1f42c4160 module=cluster

time=“2019-02-28T08:12:25Z” level=warning msg=“add node: failed with error [Server Error 400 (cluster-000f387c-1637-4620-8a5f-37c1f42c4160-0000.cluster-000f387c-1637-4620-8a5f-37c1f42c4160.cb-create.svc:8091/controller/addNode): [error - Failed to reach erlang port mapper. Failed to resolve address for “cluster-000f387c-1637-4620-8a5f-37c1f42c4160-0002.cluster-000f387c-1637-4620-8a5f-37c1f42c4160.cb-create.svc”. The hostname may be incorrect or not resolvable.]], [Server Error 500 (cluster-000f387c-1637-4620-8a5f-37c1f42c4160-0001.cluster-000f387c-1637-4620-8a5f-37c1f42c4160.cb-create.svc:8091/controller/addNode): []] …retrying” cluster-name=cluster-000f387c-1637-4620-8a5f-37c1f42c4160 module=cluster

time=“2019-02-28T08:12:30Z” level=warning msg=“add node: failed with error [Server Error 400 (cluster-000f387c-1637-4620-8a5f-37c1f42c4160-0000.cluster-000f387c-1637-4620-8a5f-37c1f42c4160.cb-create.svc:8091/controller/addNode): [error - Failed to reach erlang port mapper. Failed to resolve address for “cluster-000f387c-1637-4620-8a5f-37c1f42c4160-0002.cluster-000f387c-1637-4620-8a5f-37c1f42c4160.cb-create.svc”. The hostname may be incorrect or not resolvable.]], [Server Error 400 (cluster-000f387c-1637-4620-8a5f-37c1f42c4160-0001.cluster-000f387c-1637-4620-8a5f-37c1f42c4160.cb-create.svc:8091/controller/addNode): [error - Failed to reach erlang port mapper. Failed to resolve address for “cluster-000f387c-1637-4620-8a5f-37c1f42c4160-0002.cluster-000f387c-1637-4620-8a5f-37c1f42c4160.cb-create.svc”. The hostname may be incorrect or not resolvable.]] …retrying” cluster-name=cluster-000f387c-1637-4620-8a5f-37c1f42c4160 module=cluster

time=“2019-02-28T08:12:37Z” level=info msg=“added member (cluster-000f387c-1637-4620-8a5f-37c1f42c4160-0002)” cluster-name=cluster-000f387c-1637-4620-8a5f-37c1f42c4160 module=cluster

time=“2019-02-28T08:12:38Z” level=info msg=“Rebalance progress: 0.000000” cluster-name=cluster-000f387c-1637-4620-8a5f-37c1f42c4160 module=cluster

time=“2019-02-28T08:12:42Z” level=info msg=“Rebalance progress: 40.000000” cluster-name=cluster-000f387c-1637-4620-8a5f-37c1f42c4160 module=cluster

time=“2019-02-28T08:12:46Z” level=info msg=“Rebalance progress: 40.000000” cluster-name=cluster-000f387c-1637-4620-8a5f-37c1f42c4160 module=cluster

time=“2019-02-28T08:12:50Z” level=info msg=“Rebalance progress: 40.000000” cluster-name=cluster-000f387c-1637-4620-8a5f-37c1f42c4160 module=cluster

time=“2019-02-28T08:12:54Z” level=info msg=“Rebalance progress: 40.000000” cluster-name=cluster-000f387c-1637-4620-8a5f-37c1f42c4160 module=cluster

time=“2019-02-28T08:13:02Z” level=info msg=“reconcile finished” cluster-name=cluster-000f387c-1637-4620-8a5f-37c1f42c4160 module=cluster

time=“2019-02-28T08:16:02Z” level=error msg="failed to reconcile: Unable to create bucket named - bucket-cd7a368e-4dc7-4951-b6a4-7aeee9f58ca7: still failing after 36 retries: " cluster-name=cluster-000f387c-1637-4620-8a5f-37c1f42c4160 module=cluster

time=“2019-02-28T08:16:10Z” level=info msg="server config all_services: " cluster-name=cluster-000f387c-1637-4620-8a5f-37c1f42c4160 module=cluster

time=“2019-02-28T08:16:10Z” level=info msg=“Cluster status: balanced” cluster-name=cluster-000f387c-1637-4620-8a5f-37c1f42c4160 module=cluster

time=“2019-02-28T08:16:10Z” level=info msg=“Node status:” cluster-name=cluster-000f387c-1637-4620-8a5f-37c1f42c4160 module=cluster

time=“2019-02-28T08:16:10Z” level=info msg=“├───────────────────────────────────────────────────┼──────────────┼────────────────┤” cluster-name=cluster-000f387c-1637-4620-8a5f-37c1f42c4160 module=cluster

time=“2019-02-28T08:16:10Z” level=info msg=“│ cluster-000f387c-1637-4620-8a5f-37c1f42c4160-0000 │ all_services │ managed+warmup │” cluster-name=cluster-000f387c-1637-4620-8a5f-37c1f42c4160 module=cluster

time=“2019-02-28T08:16:10Z” level=info msg=“│ cluster-000f387c-1637-4620-8a5f-37c1f42c4160-0001 │ all_services │ managed+warmup │” cluster-name=cluster-000f387c-1637-4620-8a5f-37c1f42c4160 module=cluster

time=“2019-02-28T08:16:10Z” level=info msg=“│ cluster-000f387c-1637-4620-8a5f-37c1f42c4160-0002 │ all_services │ managed+warmup │” cluster-name=cluster-000f387c-1637-4620-8a5f-37c1f42c4160 module=cluster

Thanks and Regards,

Harshit

However, because I’m nice, we have password rotation planned for the 2.1 release, so it’ll happen automatically soon! Hopefully in the next few months.

However, because I’m nice, we have password rotation planned for the 2.1 release, so it’ll happen automatically soon! Hopefully in the next few months.