Hi ,

Operator 2 not creating the cluster with 3 nodes. The node showing not ready state due to phase : Failed

In the cluster configuration I am given 3 servers but only one creating and when I check the operator logs it is showing the below logs.

{“level”:“info”,“ts”:1589872054.5801156,“logger”:“cluster”,“msg”:“Upgrading resource”,“cluster”:“cb-op2-test/cb-example”,“kind”:“CouchbaseCluster”,“name”:“cb-example”,“version”:“2.0.0”}

{“level”:“error”,“ts”:1589872054.5804846,“logger”:“cluster”,“msg”:“Cluster setup failed”,“cluster”:“cb-op2-test/cb-example”,“error”:“failed to lookup /spec/cluster/autoCompaction in add: unexpected kind invalid in lookup”,“stacktrace”:"github.com/couchbase/couchbase-operator/vendor/github.com/go-logr/zapr.(*zapLogger).Error\n\t/home/couchbase/jenkins/workspace/couchbase-operator-build/goproj/src/github.com/couchbase/couchbase-operator/vendor/github.com/go-logr/zapr/zapr.go:128\ngithub.com/

Below is the pod details

NAME READY STATUS RESTARTS AGE

cb-example-0000 0/1 Running 0 50m

couchbase-operator-admission-7ccbd85455-2qs5t 1/1 Running 0 89m

couchbase-operator-b6496564f-v2xqt 1/1 Running 9 89m

But using operator 1.2.2 the cluster running fine. And I can’t able to enable the LDAP authentication using the manifest file that why I am trying to update the operator to 2. So could any one please help me to solve the same ?

This looks like not all of the upgrade steps were followed. Is this from an upgrade?

Nop, I am tried to install a new cluster using Operator 2.0.0 . Below is the log I am getting from the operator version 2.0.0. Operator 1.2.2 is working fine but I need to enable LDAP using the helm chart thats why I am trying to create a new cluster using the operator 2.0.0

{“level”:“info”,“ts”:1590051590.8475218,“logger”:“cluster”,“msg”:“Watching new cluster”,“cluster”:“couchbase-test/my-couchbase-couchbase-cluster”}

{“level”:“info”,“ts”:1590051590.847752,“logger”:“cluster”,“msg”:“Janitor starting”,“cluster”:“couchbase-test/my-couchbase-couchbase-cluster”}

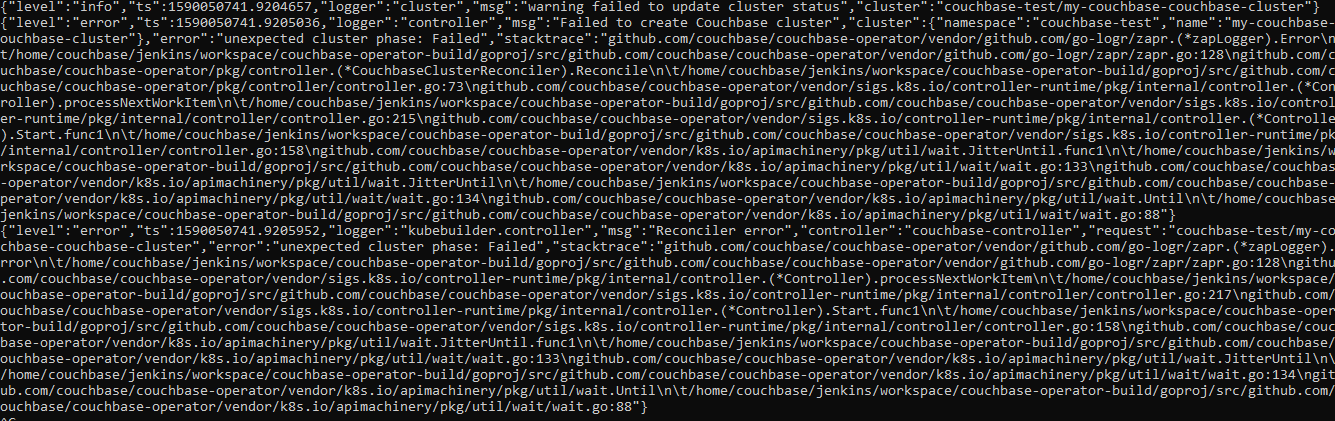

{“level”:“info”,“ts”:1590051590.9090197,“logger”:“cluster”,“msg”:“warning failed to update cluster status”,“cluster”:“couchbase-test/my-couchbase-couchbase-cluster”}

{“level”:“error”,“ts”:1590051590.9090579,“logger”:“cluster”,“msg”:“Cluster setup failed”,“cluster”:“couchbase-test/my-couchbase-couchbase-cluster”,“error”:“unexpected cluster phase: Failed”,“stacktrace”:

{“level”:“error”,“ts”:1590050741.9205952,“logger”:“kubebuilder.controller”,“msg”:“Reconciler error”,“controller”:“couchbase-controller”,“request”:“couchbase-test/my-couchbase-couchbase-cluster”,“error”:“unexpected cluster phase: Failed”,“stacktrace”:"github.com/couchbase/couchbase-operator/vendor/github.com/go-logr/zapr.(*zapLogger).

This issue has been resolved. I can able to deploy the latest operator with cluster successfully using the helm repo.

Could you please share detailed steps of how to fix it

Hi , I was used the below helm chart fixed my issue.

Also I have faced some issue while using the traditional administrator secret for cluster login. So also try with direct username and password option.

Thank you for you reply and I will follow your suggestion.

Same or very similar issue occurred while trying to do a K8S upgrade against existing CB Cluster. Key message appears below- any idea how to recover the cluster? Operator is not willing to operate.

2020-09-24T21:21:59.331803724Z {"level":"error","ts":1600982519.3316486,"logger":"cluster","msg":"Cluster setup failed","cluster":"default/couchbase-bddh","error":"unexpected kind invalid in add","stacktrace":"github.com/couchbase/couchbase-operator/vendor/github.com/go-logr/zapr.(*zapLogger).Error\n\t/home/couchbase/jenkins/workspace/couchbase-operator-build/goproj/src/github.com/couchbase/couchbase-operator/vendor/github.com/go-logr/zapr/zapr.go:128\ngithub.com/couchbase/couchbase-operator/pkg/cluster.New\n\t/home/couchbase/jenkins/workspace/couchbase-operator-build/goproj/src/github.com/couchbase/couchbase-operator/pkg/cluster/cluster.go:143\ngithub.com/couchbase/couchbase-operator/pkg/controller.(*CouchbaseClusterReconciler).Reconcile\n\t/home/couchbase/jenkins/workspace/couchbase-operator-build/goproj/src/github.com/couchbase/couchbase-operator/pkg/controller/controller.go:71\ngithub.com/couchbase/couchbase-operator/vendor/sigs.k8s.io/controller-runtime/pkg/internal/controller.(*Controller).processNextWorkItem\n\t/home/couchbase/jenkins/workspace/couchbase-operator-build/goproj/src/github.com/couchbase/couchbase-operator/vendor/sigs.k8s.io/controller-runtime/pkg/internal/controller/controller.go:215\ngithub.com/couchbase/couchbase-operator/vendor/sigs.k8s.io/controller-runtime/pkg/internal/controller.(*Controller).Start.func1\n\t/home/couchbase/jenkins/workspace/couchbase-operator-build/goproj/src/github.com/couchbase/couchbase-operator/vendor/sigs.k8s.io/controller-runtime/pkg/internal/controller/controller.go:158\ngithub.com/couchbase/couchbase-operator/vendor/k8s.io/apimachinery/pkg/util/wait.JitterUntil.func1\n\t/home/couchbase/jenkins/workspace/couchbase-operator-build/goproj/src/github.com/couchbase/couchbase-operator/vendor/k8s.io/apimachinery/pkg/util/wait/wait.go:133\ngithub.com/couchbase/couchbase-operator/vendor/k8s.io/apimachinery/pkg/util/wait.JitterUntil\n\t/home/couchbase/jenkins/workspace/couchbase-operator-build/goproj/src/github.com/couchbase/couchbase-operator/vendor/k8s.io/apimachinery/pkg/util/wait/wait.go:134\ngithub.com/couchbase/couchbase-operator/vendor/k8s.io/apimachinery/pkg/util/wait.Until\n\t/home/couchbase/jenkins/workspace/couchbase-operator-build/goproj/src/github.com/couchbase/couchbase-operator/vendor/k8s.io/apimachinery/pkg/util/wait/wait.go:88"}