Hello,

we’re currently running CSG 3.0.9 on a bucket with 35 million docs, and we’d like to upgrade to CSG 3.1.12. To verify nothing breaks, we restored our production database on a staging server that runs the same software versions that we run in production (CSG 3.0.9, Couchbase server 7.2.4). We ensured all indexes were built and that the staging system behaved normally after import of the production data.

Then we upgraded CSG to 3.1.12 and saw very high load on the machine. No other service ran, so the load must have come from CSG or couchbase server. In couchbase web interface we saw that roughly 6000 operations per second were performed on the bucket. This high load continued over something like 5 hours, before it stopped. My initial hunch was: maybe CSG is performing a compaction, so I checked status with the REST api, but compaction wasn’t running. I couldn’t find anything in the logs or in the docs about it, so I’m wondering:

- is this behavior intended or a bug? What is CSG doing after the upgrade?

- is it safe to upgrade our production database or does this behavior indicate something’s wrong?

Thank you very much

Hello @hermitdemschoenenleb ,

- Could you please provide more details into the steps followed for the upgrade?

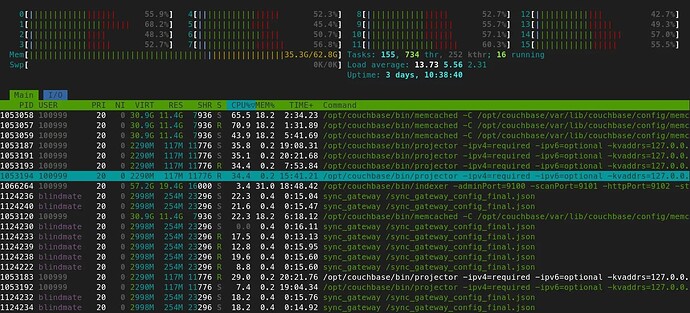

- I can see that Couchbase Server and Sync Gateway are running on the same node and from the

htop output, I see that most of the CPU is consumed by Couchbase Server processes and not from Sync Gateway

- The high number of operations could be due to the Sync Gateway importing the documents and processing them and attaching mobile metadata to it.

- You can check for the import count by reading the metrics endpoint

is this behavior intended or a bug? What is CSG doing after the upgrade?

The hypothesis of documents being imported by Sync Gateway applies if the documents loaded to the environment and they do not contain mobile metadata. So could you please confirm if the documents had the metadata before the upgrade was started?

In couchbase web interface we saw that roughly 6000 operations per second were performed on the bucket. This high load continued over something like 5 hours, before it stopped.

Could you please confirm if there was no other application connected to Couchbase Server that could be performing any operations?

Hello @ritesh.kumar

thanks a lot for the quick reply!

I can see that Couchbase Server and Sync Gateway are running on the same node […]

Could you please confirm if there was no other application connected to Couchbase Server that could be performing any operations?

Yes – I did the tests on our staging server which only ran couchbase server and sync gateway, and nothing else. Also, the staging server is in a different network than our other servers, so nothing could have accessed it.

The hypothesis of documents being imported by Sync Gateway applies if the documents loaded to the environment and they do not contain mobile metadata. So could you please confirm if the documents had the metadata before the upgrade was started?

We perform all the writes to the database through Sync Gateway and don’t operate on the couchbase bucket directly → all docs should have Sync Gateway metadata.

Could you please provide more details into the steps followed for the upgrade?

Sure! I just reran the whole procedure and the same problem appeared again. This is what I did:

-

Created a fresh backup on our production servers

-

Reset staging server completely

-

Moved the backup to the staging server

-

Started couchbase server with empty data directory

-

Created a new cluster on the staging server

-

Restored the backup on the staging server

-

Built all the indexes required for Sync Gateway

-

Started Sync Gateway 3.0.9 – everything normal, no problems: no load on the server, couchbase server web interface showed 0 operations per second

-

I verified that all docs have indeed Sync Gateway metadata (I hope the query I used is correct)

SELECT COUNT(*) FROM `data` WHERE (meta().`xattrs`).`_sync` IS NOT VALUED AND (not ((meta().`id`) like "\\_sync:%"));

- → result: 121

- I also did a

SELECT * on these docs: all 121 docs had JSON body { "_purged": true }

- not sure how these 121 docs were created, but I suppose this low number of docs shouldn’t keep couchbase server busy for several hours

-

I stopped Sync Gateway 3.0.9

-

You had the suspicion that when upgrading, Sync Gateway imports documents which is what causes the high load. Therefore, I set “import_docs”: false in my configuration

-

I started Sync Gateway 3.1.12: no high load, everything working normally

-

I stopped Sync Gateway again, switched import_docs to true, and restarted Sync Gateway 3.1.12 again: high load for 3 hours

-

→ apparently, Sync Gateway runs an import on upgrade indeed. I don’t get why it does it, though, as nearly all the documents in the bucket have sync metadata already

-

during the period of high load, Couchbase Server UI stated that the bucket saw 6500 Set operations per second

-

After 3 hours, the high load stopped. I checked the output of the metrics API as you suggested:

root@pod:/blindmate/server# curl localhost:4986/metrics | grep import

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

0 0 0 0 0 0 0 0 --:--:-- --:--:-- --:--:-- 0# HELP sgw_collection_import_count import_count

# TYPE sgw_collection_import_count counter

sgw_collection_import_count{collection="_default._default",database="data"} 0

100 35255 0 35255 0 0 10.0M 0 --:--:-- --:--:-- --:--:-- 11.2M

# HELP sgw_shared_bucket_import_import_cancel_cas import_cancel_cas

# TYPE sgw_shared_bucket_import_import_cancel_cas counter

sgw_shared_bucket_import_import_cancel_cas{database="data"} 0

# HELP sgw_shared_bucket_import_import_count import_count

# TYPE sgw_shared_bucket_import_import_count counter

sgw_shared_bucket_import_import_count{database="data"} 0

# HELP sgw_shared_bucket_import_import_error_count import_error_count

# TYPE sgw_shared_bucket_import_import_error_count counter

sgw_shared_bucket_import_import_error_count{database="data"} 1

# HELP sgw_shared_bucket_import_import_high_seq import_high_seq

# TYPE sgw_shared_bucket_import_import_high_seq counter

sgw_shared_bucket_import_import_high_seq{database="data"} 0

# HELP sgw_shared_bucket_import_import_partitions import_partitions

# TYPE sgw_shared_bucket_import_import_partitions gauge

sgw_shared_bucket_import_import_partitions{database="data"} 0

# HELP sgw_shared_bucket_import_import_processing_time import_processing_time

# TYPE sgw_shared_bucket_import_import_processing_time gauge

sgw_shared_bucket_import_import_processing_time{database="data"} 0

→ apparently, no docs were actually imported

-

Sync Gateway logs

2025-08-13T16:45:58.610+02:00 ==== Couchbase Sync Gateway/3.1.12(2;afacff6) CE ====

2025-08-13T16:45:58.610+02:00 [INF] Loading content from [/sync_gateway_config_final.json] ...

2025-08-13T16:45:58.641+02:00 [INF] Found unknown fields in startup config. Attempting to read as legacy config.

2025-08-13T16:45:58.642+02:00 [INF] Loading content from [/sync_gateway_config_final.json] ...

2025-08-13T16:45:58.682+02:00 [WRN] Running in legacy config mode -- rest.legacyServerMain() at main_legacy.go:25

2025-08-13T16:45:58.682+02:00 [INF] Loading content from [/sync_gateway_config_final.json] ...

2025-08-13T16:45:58.736+02:00 [INF] Logging: Console to stderr

2025-08-13T16:45:58.736+02:00 [INF] Logging: Files disabled

2025-08-13T16:45:58.736+02:00 [ERR] No log_file_path property specified in config, and --defaultLogFilePath command line flag was not set. Log files required for product support are not being generated.

2025-08-13T16:45:58.736+02:00 [INF] Logging: Console level: trace

2025-08-13T16:45:58.736+02:00 [INF] Logging: Console keys: []

2025-08-13T16:45:58.736+02:00 [INF] Logging: Redaction level: partial

2025-08-13T16:45:58.736+02:00 [DBG] requestedSoftFDLimit < currentSoftFdLimit (5000 <= 250000) no action needed

2025-08-13T16:45:58.736+02:00 [INF] Logging stats with frequency: &{1m0s}

2025-08-13T16:45:58.736+02:00 [INF] Initializing server connections...

2025-08-13T16:45:58.820+02:00 [INF] Finished initializing server connections

2025-08-13T16:45:58.820+02:00 [INF] Opening db /data as bucket "data", pool "default", server <couchbase://localhost>

2025-08-13T16:45:58.820+02:00 [INF] Opening Couchbase database data on <couchbase://localhost> as user "<ud>data</ud>"

2025-08-13T16:45:58.820+02:00 [INF] Setting query timeouts for bucket data to 1m15s

2025-08-13T16:45:58.853+02:00 [INF] Setting max_concurrent_query_ops to 256 based on query node count (1)

2025-08-13T16:45:58.853+02:00 [INF] b:data._default._default Initializing indexes with numReplicas: 0...

2025-08-13T16:45:58.866+02:00 [DBG] b:data._default._default Skipping index: users ...

2025-08-13T16:45:58.866+02:00 [DBG] b:data._default._default Skipping index: roles ...

2025-08-13T16:45:58.876+02:00 [INF] b:data._default._default Verifying index availability...

2025-08-13T16:45:58.880+02:00 [INF] b:data._default._default Index sg_access_x1 is online

2025-08-13T16:45:58.880+02:00 [INF] b:data._default._default Index sg_allDocs_x1 is online

2025-08-13T16:45:58.880+02:00 [INF] b:data._default._default Index sg_channels_x1 is online

2025-08-13T16:45:58.880+02:00 [INF] b:data._default._default Index sg_roleAccess_x1 is online

2025-08-13T16:45:58.880+02:00 [INF] b:data._default._default Index sg_syncDocs_x1 is online

2025-08-13T16:45:58.880+02:00 [INF] b:data._default._default Index sg_tombstones_x1 is online

2025-08-13T16:45:58.880+02:00 [INF] b:data._default._default Indexes ready

2025-08-13T16:45:58.880+02:00 [INF] delta_sync enabled=false with rev_max_age_seconds=86400 for database data

2025-08-13T16:45:58.880+02:00 [WRN] Deprecation notice: setting database configuration option "allow_conflicts" to true is due to be removed. In the future, conflicts will not be allowed. -- rest.dbcOptionsFromConfig() at server_context.go:1212

2025-08-13T16:45:58.886+02:00 [INF] c:CleanAgedItems db:data Created background task: "CleanAgedItems" with interval 1m0s

2025-08-13T16:45:58.890+02:00 [INF] db:data Waiting for database init to complete...

2025-08-13T16:45:58.890+02:00 [INF] db:data Database init completed, starting online processes

2025-08-13T16:45:58.913+02:00 [INF] c:InsertPendingEntries db:data Created background task: "InsertPendingEntries" with interval 2.5s

2025-08-13T16:45:58.913+02:00 [INF] c:CleanSkippedSequenceQueue db:data Created background task: "CleanSkippedSequenceQueue" with interval 30m0s

2025-08-13T16:46:00.789+02:00 [INF] db:data Using metadata purge interval of 3.00 days for tombstone compaction.

2025-08-13T16:46:00.789+02:00 [INF] c:Compact db:data Created background task: "Compact" with interval 24h0m0s

2025-08-13T16:46:00.791+02:00 [INF] Starting metrics server on 127.0.0.1:4986

2025-08-13T16:46:00.791+02:00 [INF] Starting admin server on 127.0.0.1:4985

2025-08-13T16:46:00.791+02:00 [INF] Starting server on :4984 ...

-

Sync Gateway configuration

{

"disable_persistent_config": true,

"use_tls_server": false,

"logging": {

"console": {

"log_level": "trace",

"color_enabled": true

}

},

"databases": {

"data": {

"num_index_replicas": 0,

"username": "data",

"password": "PASSWORD",

"server": "http://localhost:8091",

"allow_conflicts": true,

"bucket": "data",

"enable_shared_bucket_access": true,

"import_docs": true,

"users": {

USERS

},

"event_handlers": {

"document_changed": EVENT_HANDLERS

},

"sync": SYNCFUNCTION}

},

"CORS": {

"origin": [],

"loginOrigin": [

"*"

],

"headers": [

"Content-Type",

"Authorization"

],

"maxAge": 1728000

}

}

Overall, it looks like Sync Gateway tries to do an import when upgraded. What I find strange is:

- Why does Sync Gateway run import even though nearly all the docs already are imported?

- Why does Couchbase Server see 6500 Set operations per second for hours, but sgw_shared_bucket_import_import_count is 0?

If you need anything else for debugging – happy to restart the whole backup restore & upgrade operation again

@ritesh.kumar do you have any idea what’s going on during the upgrade? Do you consider this to be normal, i.e. can I do the upgrade on production as well, or might there be something wrong?

![]()