This is a universal truth! A reliable reproduction is often half or more of the battle.

I’ll try to get it done by Monday!

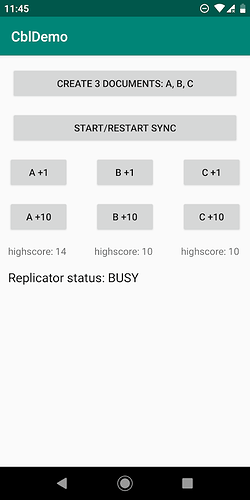

I also observed another interesting detail. I posted above that the sync stops for the device (A) which has the CBL status BUSY. That is incorrect. The device (A) shows the status when a local document update happened. This update is unable to sync up?! But if another device (B) updates a different document it is received and displayed by device (A). So pull replication keeps working.

I created a demo app but was never able to reproduce the issue.

Here are all my findings so far:

- unable to reproduce with demo app in dev cluster

- unable to reproduce with production app in dev cluster

- no issues with production app in production cluster when in beta test phase. ~100 unique users per day

- Loadbalancer server, web app server, SG servers, Couchbase Server servers have plenty off compute, network and storage resources left unused

- easily run into issue with production app in production cluster. But not reproducible. Once the issue appeared on one device it’s easy to see it on a different device, too. As far as I can tell only enough time needs to pass since the last time the SG service was last restarted. Yesterday it took around an hour. Today it ran fine for more than half a day and still does run fine.

- issue appears when

- app was in background and came to foreground

- app was plugged in to wall socket and screen was kept on. After some time without using the app the issue appeared after updating a document on the device

- Restarting the SG service fixes the issue immediately

Important to note

I have been using SG 2.6 for a while already. The described issue only appeared after most users updated to the production app with CBL 2.6/CBL 2.7 SNAPSHOT

Workaround until the issue will be fixed

I could restart the SG service via cron job every so often. Any other recommendations?

Looping in @humpback_whale and @fatmonkey45

Are you still seeing your described issues? In development only? If so are you able to reproduce it in a minimal app you could share? Or did you find a workaround and would like to share it?

I also tested @humpback_whale’s issue but could not reproduce it in production. I can edit documents on Couchbase Server dashboard. After turning airplane mode off the change is pulled down and replicator status goes back to idle.

@benjamin_glatzeder

We are still experiencing the issue with CBL 2.5.3 changing it to CBL 2.6 or 2.7 did not resolve the problem so we kept it on 2.5.3

On the infrastructure side - everything seems to have plenty of compute and storage resources - just like @benjamin_glatzeder observes

We have around 30 users but mostly up to 8 per day, 2 buckets in Couchbase with around 200k documents in each.

The android app has two replicators (per bucket) connecting to the same SG endpoint.

If we reinstall the app on the device the initial sync takes a while (obviously due to number of documents), but once the replicators go into IDLE and we leave the app running for a few minutes or disconnect / reconnect the network - the issue appears.

This happens in our Development and Production environments.

Is there any chance that this has something to do with a firewall/gateway of some kind? It really does sound like something that could happen when some kind of port/address mapping timed out…

My current fix is:

- Create a user account with only 1 document

- in loop:

- Update document periodically

- Wait several seconds

- Check if replicator status is still busy

- if it is still busy then ssh into server and restart SG service

I tested the setup without automatic service restarts for a day or two and could confirm that the sync status was busy (and stopped syncing) on my other devices which ran the production app. The service is restarted between 1 and 3 times per day. I wasn’t able to find any firewall/port time outs. I think my issue is that the write queue grew too big on the SG server as pointed out by @bbrks in this post.

The issues I experienced are gone since upgrading to SG version 2.7. Hopefully this will solve your issues, too, @humpback_whale and @fatmonkey45.

Great work all around!

Thanks @benjamin_glatzeder for the update!

I have updated both SG and CBL to 2.7, however out of my two buckets that i am replicating one is still having the same issue:

V:NETWORK: WebSocketListener received data of 127 bytes

V:NETWORK: {N8litecore4blip10ConnectionE#11} Finished receiving 'norev' REQ #114341 N

D:NETWORK: C4Socket.received @2919712968: 127

D:NETWORK: C4Socket.completedReceive @2919712968: com.couchbase.lite.internal.replicator.CBLWebSocket@a8b0850

I:REPLICATOR: {N8litecore4repl6PullerE#18} activityLevel=busy: pendingResponseCount=0, _caughtUp=0, _pendingRevMessages=193, _activeIncomingRevs=0

V:NETWORK: {N8litecore4repl12C4SocketImplE#14} Received 127-byte message

V:NETWORK: WebSocketListener received data of 127 bytes

D:NETWORK: C4Socket.received @2919712968: 127

V:NETWORK: {N8litecore4blip6BLIPIOE#15} Received frame: REQ #114342 --N-, length 123

V:NETWORK: {N8litecore4blip10ConnectionE#11} Receiving 'norev' REQ #114342 N

V:NETWORK: {N8litecore4blip10ConnectionE#11} Finished receiving 'norev' REQ #114342 N

V:NETWORK: {N8litecore4repl12C4SocketImplE#14} Received 127-byte message

D:NETWORK: C4Socket.completedReceive @2919712968: com.couchbase.lite.internal.replicator.CBLWebSocket@a8b0850

V:NETWORK: WebSocketListener received data of 127 bytes

D:NETWORK: C4Socket.received @2919712968: 127

V:NETWORK: {N8litecore4blip6BLIPIOE#15} Received frame: REQ #114343 --N-, length 123

V:NETWORK: {N8litecore4blip10ConnectionE#11} Receiving 'norev' REQ #114343 N

V:NETWORK: {N8litecore4blip10ConnectionE#11} Finished receiving 'norev' REQ #114343 N

D:NETWORK: C4Socket.completedReceive @2919712968: com.couchbase.lite.internal.replicator.CBLWebSocket@a8b0850

V:NETWORK: {N8litecore4repl12C4SocketImplE#14} Received 127-byte message

V:NETWORK: WebSocketListener received data of 127 bytes

D:NETWORK: C4Socket.received @2919712968: 127

V:NETWORK: {N8litecore4blip6BLIPIOE#15} Received frame: REQ #114344 --N-, length 123

V:NETWORK: {N8litecore4blip10ConnectionE#11} Receiving 'norev' REQ #114344 N

V:NETWORK: {N8litecore4blip10ConnectionE#11} Finished receiving 'norev' REQ #114344 N

V:NETWORK: {N8litecore4repl12C4SocketImplE#14} Received 127-byte message

V:NETWORK: WebSocketListener received data of 127 bytes

D:NETWORK: C4Socket.received @2919712968: 127

V:NETWORK: {N8litecore4repl12C4SocketImplE#14} Received 127-byte message

V:NETWORK: WebSocketListener received data of 127 bytes

D:NETWORK: C4Socket.received @2919712968: 127

V:NETWORK: {N8litecore4repl12C4SocketImplE#14} Received 127-byte message

V:NETWORK: WebSocketListener received data of 127 bytes

D:NETWORK: C4Socket.received @2919712968: 127

V:NETWORK: {N8litecore4repl12C4SocketImplE#14} Received 127-byte message

V:NETWORK: WebSocketListener received data of 127 bytes

D:NETWORK: C4Socket.received @2919712968: 127

I:REPLICATOR: {N8litecore4repl6PullerE#18} activityLevel=busy: pendingResponseCount=0, _caughtUp=0, _pendingRevMessages=192, _activeIncomingRevs=0

I:REPLICATOR: {N8litecore4repl6PullerE#18} activityLevel=busy: pendingResponseCount=0, _caughtUp=0, _pendingRevMessages=191, _activeIncomingRevs=0

I:REPLICATOR: {N8litecore4repl6PullerE#18} activityLevel=busy: pendingResponseCount=0, _caughtUp=0, _pendingRevMessages=190, _activeIncomingRevs=0

V:NETWORK: {N8litecore4repl12C4SocketImplE#14} Received 127-byte message

V:NETWORK: WebSocketListener received data of 127 bytes

D:NETWORK: C4Socket.received @2919712968: 127

V:NETWORK: {N8litecore4repl12C4SocketImplE#14} Received 127-byte message

V:NETWORK: WebSocketListener received data of 127 bytes

D:NETWORK: C4Socket.received @2919712968: 127

V:NETWORK: {N8litecore4repl12C4SocketImplE#14} Received 127-byte message

@fatmonkey45 : That log seems to show a replicator successfully pulling 127-byte messages. We’ll need something that actually shows the replicator state and the number of bytes that are still outstanding, to analyze your issue…

I have a similar issue with SG 2.6 and CB 6.0.3 Enterprise edition, my clients use latest Android and iOS CBL. We use one PushAndPull Replicator in our clients. We have only few hundred clients, SG and CB have more than enough resource.

Everyday we have clients complaining about the following scenario:

- Two or more devices at one client location push and pull data with the SG, customer is satisfied, replication between the devices is fast.

- Everyday some customers complain that part of their devices don’t get replicated while the other devices are replicating. Restarting the app doesn’t help.

- Only workaround so far is the restart of the sync gateway, after that all devices get replicated.

Running into this stuck BUSY state myself. I’m testing replication recovery after network outage and it doesn’t get unstuck, even after network is restored.

CBL 2.8.1 on Android 10

I’m updating a local document every 30 seconds and logging this every 30 seconds…

Status{activityLevel=BUSY, progress=Progress{completed=12897, total=13083}, error=null}

Thinking about if( completed < total) and completed hasn’t changed in a few minutes, restart the replicator.

-Tim

Did you ever find a solution to this, I am stuck on a similar problem ?

Not really, but things are more stable than in the past. I am not sure if it was network related.

Hi, there is more information about this issue over here: Replicator pull continous stop after network error in couchbase lite 2.8.x android - #2 by Giallon

Upgrading to the latest CBL might help resolving the issue as we have fixed the issues related to the replicator hanging in the past few releases. If that still doesn’t solve, please attach the full verbose logging here. It will be very useful to get full-thread dump when the replicator is hanging.